Huachuan Wang

Temporal Streaming Batch Principal Component Analysis for Time Series Classification

Oct 28, 2024

Abstract:In multivariate time series classification, although current sequence analysis models have excellent classification capabilities, they show significant shortcomings when dealing with long sequence multivariate data, such as prolonged training times and decreased accuracy. This paper focuses on optimizing model performance for long-sequence multivariate data by mitigating the impact of extended time series and multiple variables on the model. We propose a principal component analysis (PCA)-based temporal streaming compression and dimensionality reduction algorithm for time series data (temporal streaming batch PCA, TSBPCA), which continuously updates the compact representation of the entire sequence through streaming PCA time estimation with time block updates, enhancing the data representation capability of a range of sequence analysis models. We evaluated this method using various models on five real datasets, and the experimental results show that our method performs well in terms of classification accuracy and time efficiency. Notably, our method demonstrates a trend of increasing effectiveness as sequence length grows; on the two longest sequence datasets, accuracy improved by about 7.2%, and execution time decreased by 49.5%.

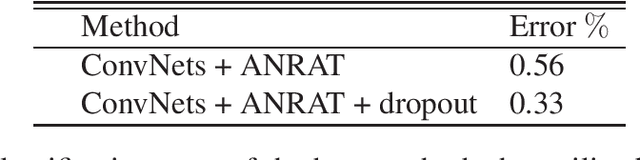

Adaptively Solving the Local-Minimum Problem for Deep Neural Networks

Dec 25, 2020

Abstract:This paper aims to overcome a fundamental problem in the theory and application of deep neural networks (DNNs). We propose a method to solve the local minimum problem in training DNNs directly. Our method is based on the cross-entropy loss criterion's convexification by transforming the cross-entropy loss into a risk averting error (RAE) criterion. To alleviate numerical difficulties, a normalized RAE (NRAE) is employed. The convexity region of the cross-entropy loss expands as its risk sensitivity index (RSI) increases. Making the best use of the convexity region, our method starts training with an extensive RSI, gradually reduces it, and switches to the RAE as soon as the RAE is numerically feasible. After training converges, the resultant deep learning machine is expected to be inside the attraction basin of a global minimum of the cross-entropy loss. Numerical results are provided to show the effectiveness of the proposed method.

Low-Order Model of Biological Neural Networks

Dec 12, 2020

Abstract:A biologically plausible low-order model (LOM) of biological neural networks is a recurrent hierarchical network of dendritic nodes/trees, spiking/nonspiking neurons, unsupervised/ supervised covariance/accumulative learning mechanisms, feedback connections, and a scheme for maximal generalization. These component models are motivated and necessitated by making LOM learn and retrieve easily without differentiation, optimization, or iteration, and cluster, detect and recognize multiple/hierarchical corrupted, distorted, and occluded temporal and spatial patterns.

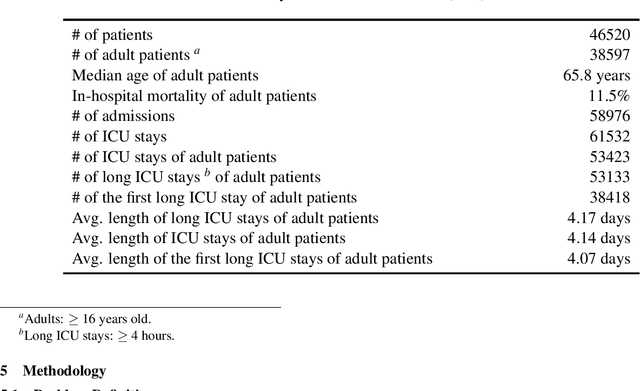

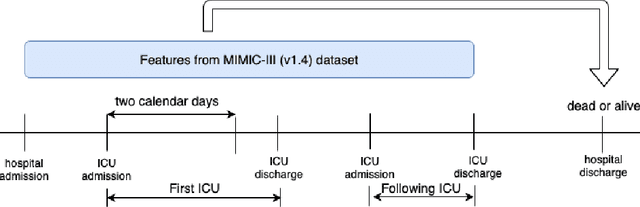

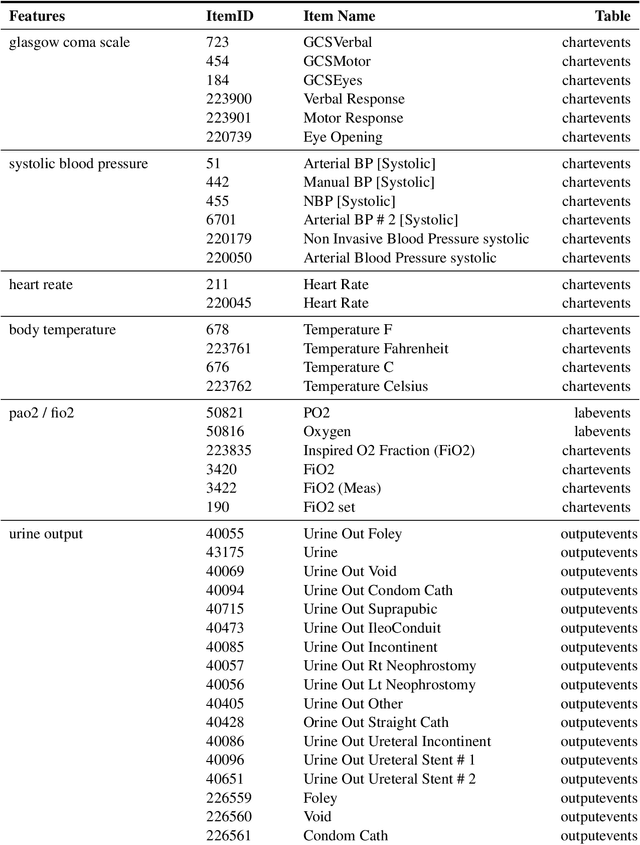

Building Deep Learning Models to Predict Mortality in ICU Patients

Dec 11, 2020

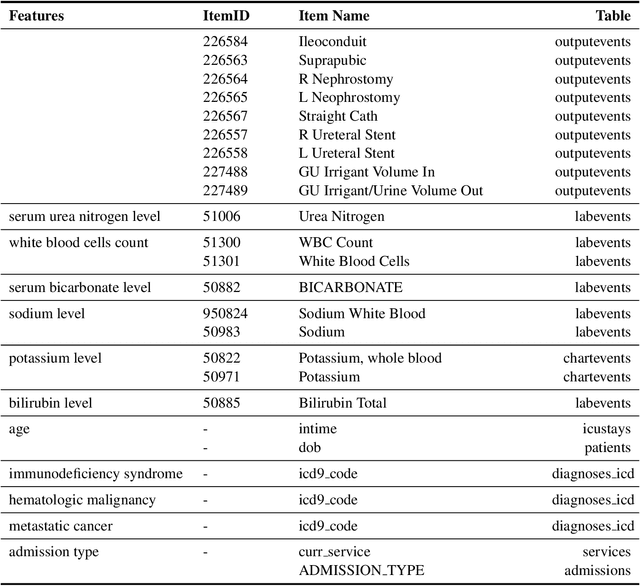

Abstract:Mortality prediction in intensive care units is considered one of the critical steps for efficiently treating patients in serious condition. As a result, various prediction models have been developed to address this problem based on modern electronic healthcare records. However, it becomes increasingly challenging to model such tasks as time series variables because some laboratory test results such as heart rate and blood pressure are sampled with inconsistent time frequencies. In this paper, we propose several deep learning models using the same features as the SAPS II score. To derive insight into the proposed model performance. Several experiments have been conducted based on the well known clinical dataset Medical Information Mart for Intensive Care III. The prediction results demonstrate the proposed model's capability in terms of precision, recall, F1 score, and area under the receiver operating characteristic curve.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge