Hozaifa Kassab

Two-Stage Quranic QA via Ensemble Retrieval and Instruction-Tuned Answer Extraction

Aug 09, 2025Abstract:Quranic Question Answering presents unique challenges due to the linguistic complexity of Classical Arabic and the semantic richness of religious texts. In this paper, we propose a novel two-stage framework that addresses both passage retrieval and answer extraction. For passage retrieval, we ensemble fine-tuned Arabic language models to achieve superior ranking performance. For answer extraction, we employ instruction-tuned large language models with few-shot prompting to overcome the limitations of fine-tuning on small datasets. Our approach achieves state-of-the-art results on the Quran QA 2023 Shared Task, with a MAP@10 of 0.3128 and MRR@10 of 0.5763 for retrieval, and a pAP@10 of 0.669 for extraction, substantially outperforming previous methods. These results demonstrate that combining model ensembling and instruction-tuned language models effectively addresses the challenges of low-resource question answering in specialized domains.

RIRO: Reshaping Inputs, Refining Outputs Unlocking the Potential of Large Language Models in Data-Scarce Contexts

Dec 15, 2024Abstract:Large language models (LLMs) have significantly advanced natural language processing, excelling in areas like text generation, summarization, and question-answering. Despite their capabilities, these models face challenges when fine-tuned on small, domain-specific datasets, often struggling to generalize and deliver accurate results with unfamiliar inputs. To tackle this issue, we introduce RIRO, a novel two-layer architecture designed to improve performance in data-scarce environments. The first layer leverages advanced prompt engineering to reformulate inputs, ensuring better alignment with training data, while the second layer focuses on refining outputs to minimize inconsistencies. Through fine-tuning models like Phi-2, Falcon 7B, and Falcon 1B, with Phi-2 outperforming the others. Additionally, we introduce a benchmark using evaluation metrics such as cosine similarity, Levenshtein distance, BLEU score, ROUGE-1, ROUGE-2, and ROUGE-L. While these advancements improve performance, challenges like computational demands and overfitting persist, limiting the potential of LLMs in data-scarce, high-stakes environments such as healthcare, legal documentation, and software testing.

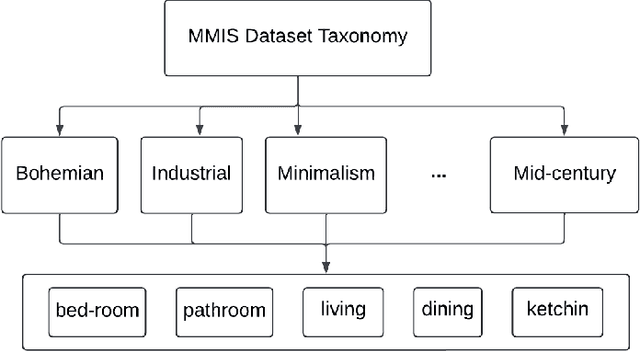

MMIS: Multimodal Dataset for Interior Scene Visual Generation and Recognition

Jul 08, 2024

Abstract:We introduce MMIS, a novel dataset designed to advance MultiModal Interior Scene generation and recognition. MMIS consists of nearly 160,000 images. Each image within the dataset is accompanied by its corresponding textual description and an audio recording of that description, providing rich and diverse sources of information for scene generation and recognition. MMIS encompasses a wide range of interior spaces, capturing various styles, layouts, and furnishings. To construct this dataset, we employed careful processes involving the collection of images, the generation of textual descriptions, and corresponding speech annotations. The presented dataset contributes to research in multi-modal representation learning tasks such as image generation, retrieval, captioning, and classification.

GCF: Graph Convolutional Networks for Facial Expression Recognition

Jul 02, 2024

Abstract:Facial Expression Recognition (FER) is vital for understanding interpersonal communication. However, existing classification methods often face challenges such as vulnerability to noise, imbalanced datasets, overfitting, and generalization issues. In this paper, we propose GCF, a novel approach that utilizes Graph Convolutional Networks for FER. GCF integrates Convolutional Neural Networks (CNNs) for feature extraction, using either custom architectures or pretrained models. The extracted visual features are then represented on a graph, enhancing local CNN features with global features via a Graph Convolutional Neural Network layer. We evaluate GCF on benchmark datasets including CK+, JAFFE, and FERG. The results show that GCF significantly improves performance over state-of-the-art methods. For example, GCF enhances the accuracy of ResNet18 from 92% to 98% on CK+, from 66% to 89% on JAFFE, and from 94% to 100% on FERG. Similarly, GCF improves the accuracy of VGG16 from 89% to 97% on CK+, from 72% to 92% on JAFFE, and from 96% to 99.49% on FERG. We provide a comprehensive analysis of our approach, demonstrating its effectiveness in capturing nuanced facial expressions. By integrating graph convolutions with CNNs, GCF significantly advances FER, offering improved accuracy and robustness in real-world applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge