Hojjat Rakhshani

On the performance of deep learning for numerical optimization: an application to protein structure prediction

Dec 17, 2020

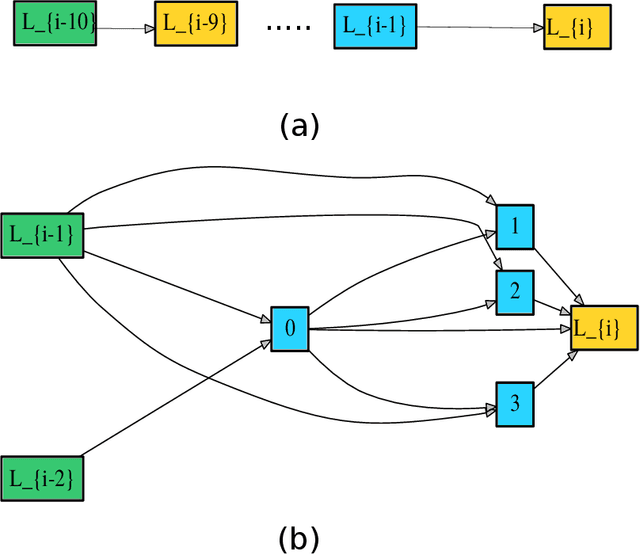

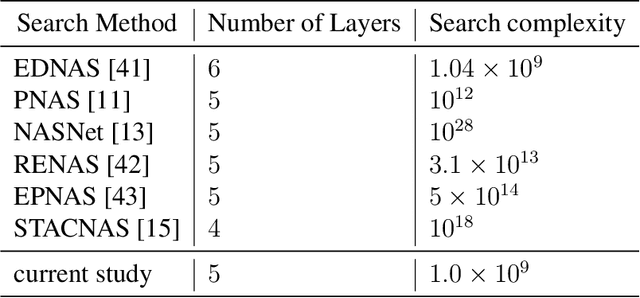

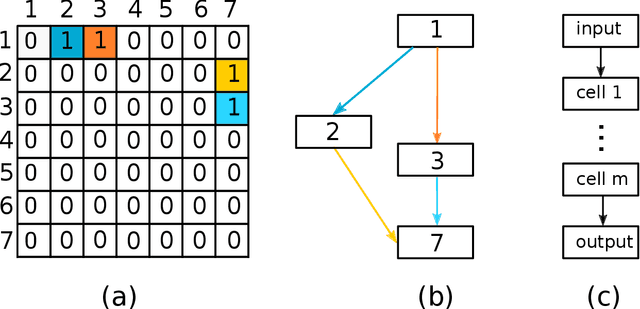

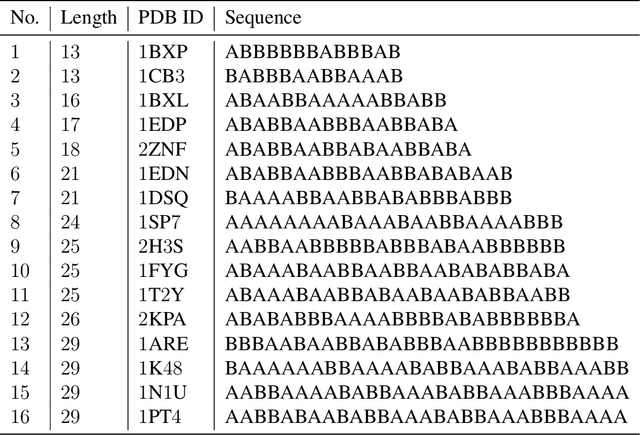

Abstract:Deep neural networks have recently drawn considerable attention to build and evaluate artificial learning models for perceptual tasks. Here, we present a study on the performance of the deep learning models to deal with global optimization problems. The proposed approach adopts the idea of the neural architecture search (NAS) to generate efficient neural networks for solving the problem at hand. The space of network architectures is represented using a directed acyclic graph and the goal is to find the best architecture to optimize the objective function for a new, previously unknown task. Different from proposing very large networks with GPU computational burden and long training time, we focus on searching for lightweight implementations to find the best architecture. The performance of NAS is first analyzed through empirical experiments on CEC 2017 benchmark suite. Thereafter, it is applied to a set of protein structure prediction (PSP) problems. The experiments reveal that the generated learning models can achieve competitive results when compared to hand-designed algorithms; given enough computational budget

From feature selection to continuous optimization

Nov 01, 2019

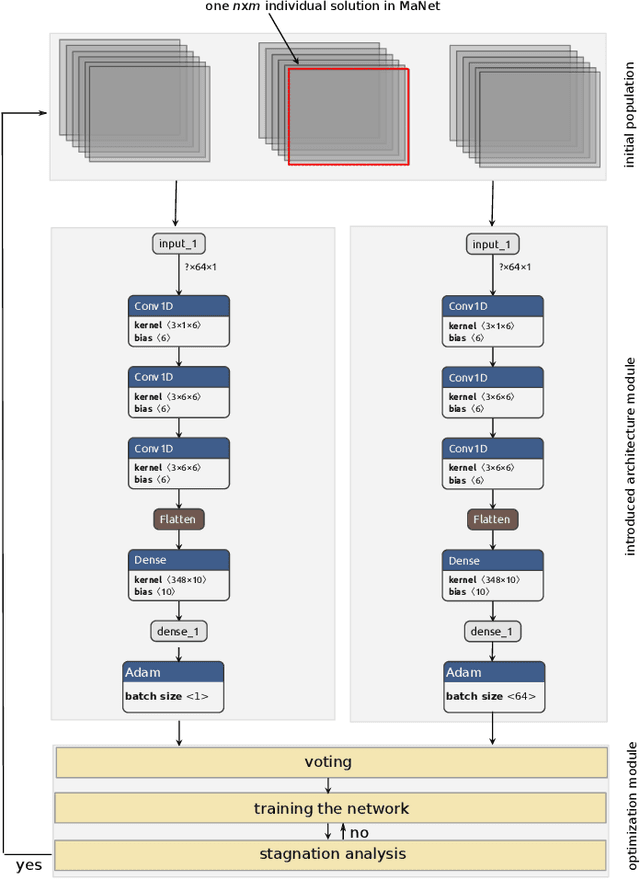

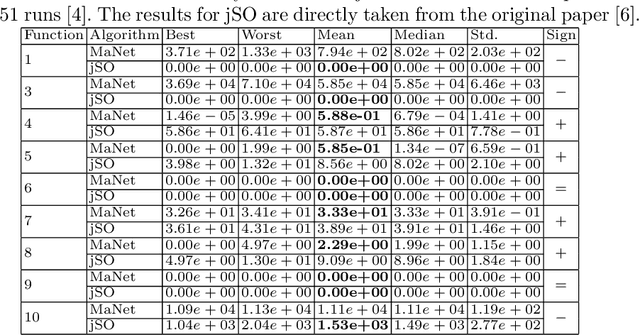

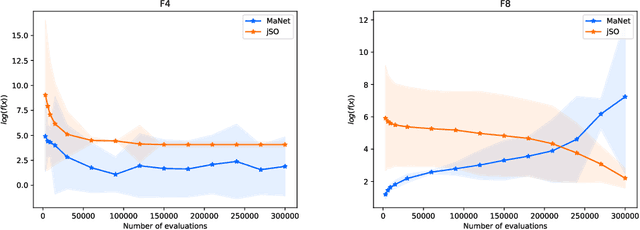

Abstract:Metaheuristic algorithms (MAs) have seen unprecedented growth thanks to their successful applications in fields including engineering and health sciences. In this work, we investigate the use of a deep learning (DL) model as an alternative tool to do so. The proposed method, called MaNet, is motivated by the fact that most of the DL models often need to solve massive nasty optimization problems consisting of millions of parameters. Feature selection is the main adopted concepts in MaNet that helps the algorithm to skip irrelevant or partially relevant evolutionary information and uses those which contribute most to the overall performance. The introduced model is applied on several unimodal and multimodal continuous problems. The experiments indicate that MaNet is able to yield competitive results compared to one of the best hand-designed algorithms for the aforementioned problems, in terms of the solution accuracy and scalability.

Automatic hyperparameter selection in Autodock

Dec 02, 2018

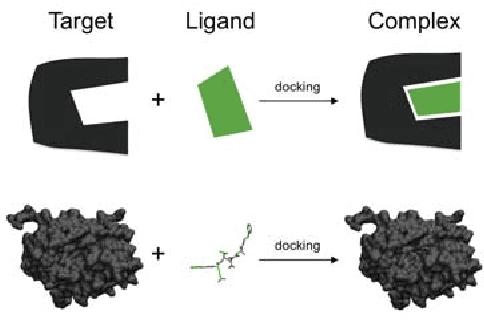

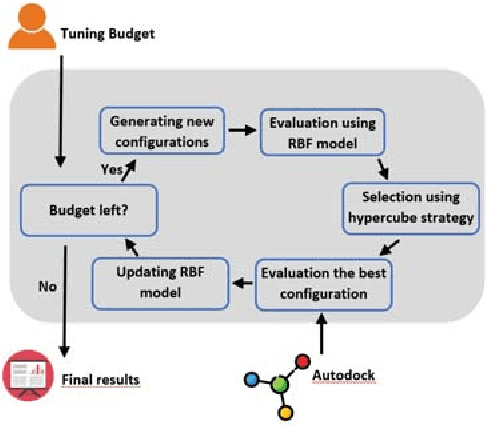

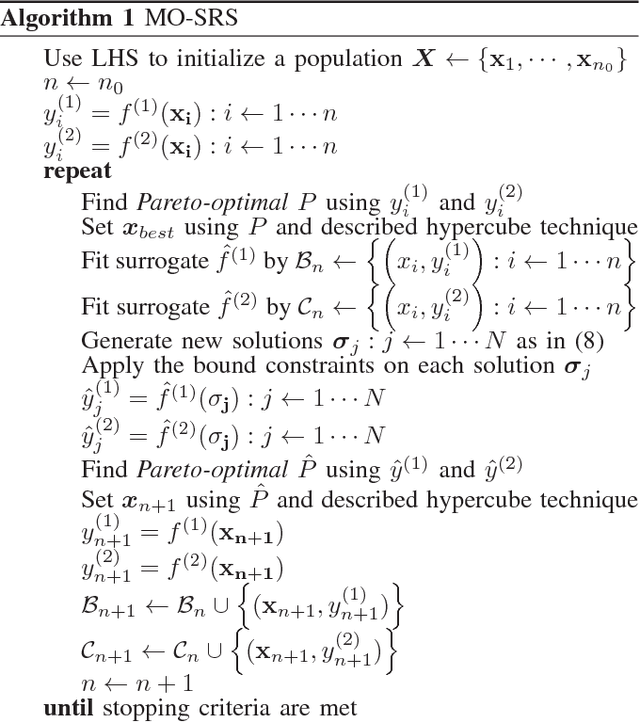

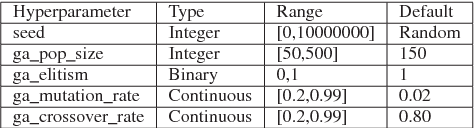

Abstract:Autodock is a widely used molecular modeling tool which predicts how small molecules bind to a receptor of known 3D structure. The current version of AutoDock uses meta-heuristic algorithms in combination with local search methods for doing the conformation search. Appropriate settings of hyperparameters in these algorithms are important, particularly for novice users who often find it hard to identify the best configuration. In this work, we design a surrogate based multi-objective algorithm to help such users by automatically tuning hyperparameter settings. The proposed method iteratively uses a radial basis function model and non-dominated sorting to evaluate the sampled configurations during the search phase. Our experimental results using Autodock show that the introduced component is practical and effective.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge