Hiroya Makino

Zero-Shot Visual Concept Blending Without Text Guidance

Mar 27, 2025Abstract:We propose a novel, zero-shot image generation technique called "Visual Concept Blending" that provides fine-grained control over which features from multiple reference images are transferred to a source image. If only a single reference image is available, it is difficult to isolate which specific elements should be transferred. However, using multiple reference images, the proposed approach distinguishes between common and unique features by selectively incorporating them into a generated output. By operating within a partially disentangled Contrastive Language-Image Pre-training (CLIP) embedding space (from IP-Adapter), our method enables the flexible transfer of texture, shape, motion, style, and more abstract conceptual transformations without requiring additional training or text prompts. We demonstrate its effectiveness across a diverse range of tasks, including style transfer, form metamorphosis, and conceptual transformations, showing how subtle or abstract attributes (e.g., brushstroke style, aerodynamic lines, and dynamism) can be seamlessly combined into a new image. In a user study, participants accurately recognized which features were intended to be transferred. Its simplicity, flexibility, and high-level control make Visual Concept Blending valuable for creative fields such as art, design, and content creation, where combining specific visual qualities from multiple inspirations is crucial.

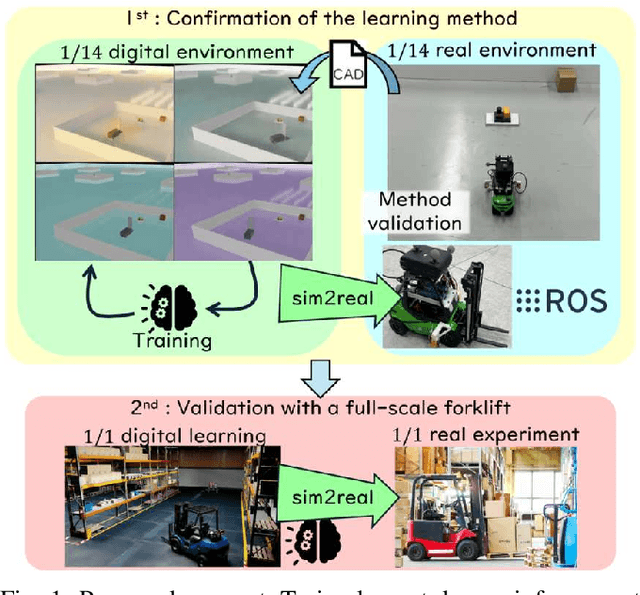

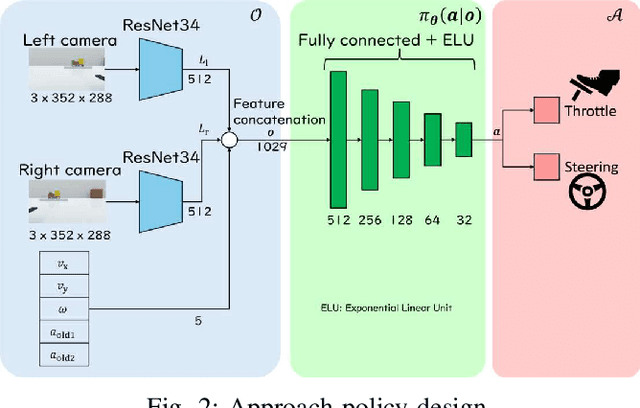

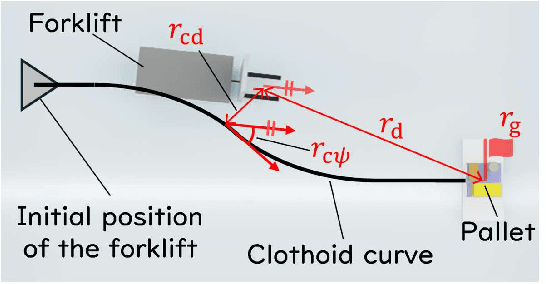

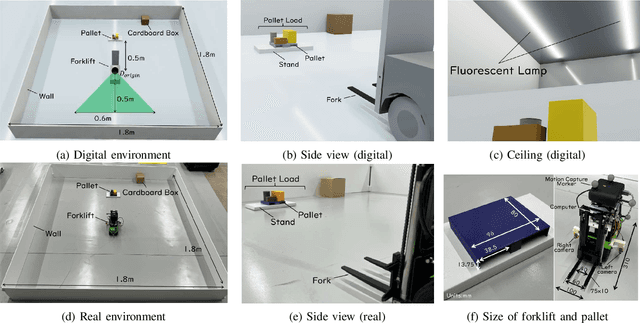

Visual-Based Forklift Learning System Enabling Zero-Shot Sim2Real Without Real-World Data

Dec 16, 2024

Abstract:Forklifts are used extensively in various industrial settings and are in high demand for automation. In particular, counterbalance forklifts are highly versatile and employed in diverse scenarios. However, efforts to automate these processes are lacking, primarily owing to the absence of a safe and performance-verifiable development environment. This study proposes a learning system that combines a photorealistic digital learning environment with a 1/14-scale robotic forklift environment to address this challenge. Inspired by the training-based learning approach adopted by forklift operators, we employ an end-to-end vision-based deep reinforcement learning approach. The learning is conducted in a digitalized environment created from CAD data, making it safe and eliminating the need for real-world data. In addition, we safely validate the method in a physical setting utilizing a 1/14-scale robotic forklift with a configuration similar to that of a real forklift. We achieved a 60% success rate in pallet loading tasks in real experiments using a robotic forklift. Our approach demonstrates zero-shot sim2real with a simple method that does not require heuristic additions. This learning-based approach is considered a first step towards the automation of counterbalance forklifts.

Online Multi-Agent Pickup and Delivery with Task Deadlines

Mar 19, 2024Abstract:Managing delivery deadlines in automated warehouses and factories is crucial for maintaining customer satisfaction and ensuring seamless production. This study introduces the problem of online multi-agent pickup and delivery with task deadlines (MAPD-D), which is an advanced variant of the online MAPD problem incorporating delivery deadlines. MAPD-D presents a dynamic deadline-driven approach that includes task deadlines, with tasks being added at any time (online), thus challenging conventional MAPD frameworks. To tackle MAPD-D, we propose a novel algorithm named deadline-aware token passing (D-TP). The D-TP algorithm is designed to calculate pickup deadlines and assign tasks while balancing execution cost and deadline proximity. Additionally, we introduce the D-TP with task swaps (D-TPTS) method to further reduce task tardiness, enhancing flexibility and efficiency via task-swapping strategies. Numerical experiments were conducted in simulated warehouse environments to showcase the effectiveness of the proposed methods. Both D-TP and D-TPTS demonstrate significant reductions in task tardiness compared to existing methods, thereby contributing to efficient operations in automated warehouses and factories with delivery deadlines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge