Hiroshi Mamiya

Exploring Bias and Prediction Metrics to Characterise the Fairness of Machine Learning for Equity-Centered Public Health Decision-Making: A Narrative Review

Aug 23, 2024

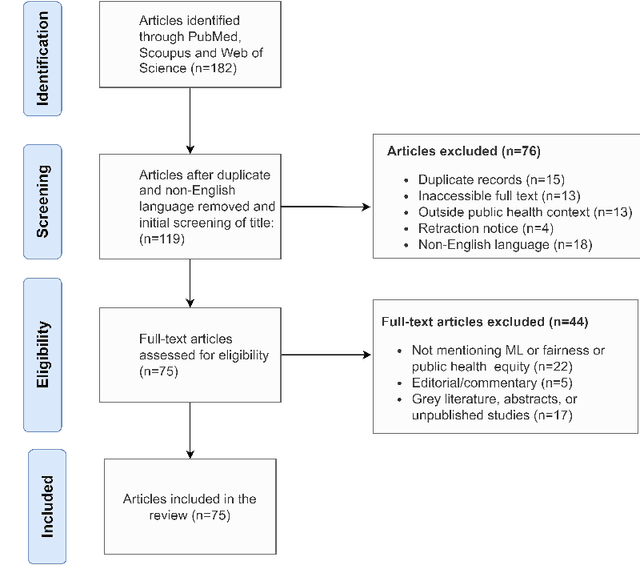

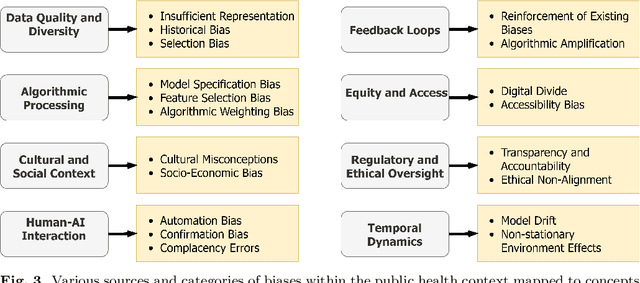

Abstract:Background: The rapid advancement of Machine Learning (ML) represents novel opportunities to enhance public health research, surveillance, and decision-making. However, there is a lack of comprehensive understanding of algorithmic bias -- systematic errors in predicted population health outcomes -- resulting from the public health application of ML. The objective of this narrative review is to explore the types of bias generated by ML and quantitative metrics to assess these biases. Methods: We performed search on PubMed, MEDLINE, IEEE (Institute of Electrical and Electronics Engineers), ACM (Association for Computing Machinery) Digital Library, Science Direct, and Springer Nature. We used keywords to identify studies describing types of bias and metrics to measure these in the domain of ML and public and population health published in English between 2008 and 2023, inclusive. Results: A total of 72 articles met the inclusion criteria. Our review identified the commonly described types of bias and quantitative metrics to assess these biases from an equity perspective. Conclusion: The review will help formalize the evaluation framework for ML on public health from an equity perspective.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge