Hiromi Narimatsu

Empirical Analysis of Training Strategies of Transformer-based Japanese Chit-chat Systems

Sep 11, 2021

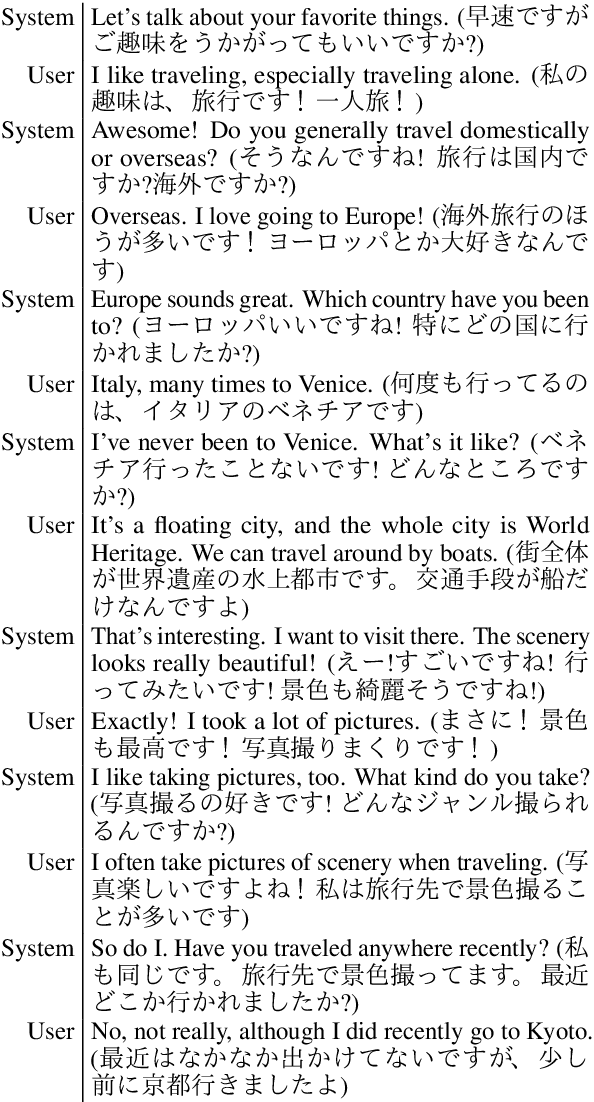

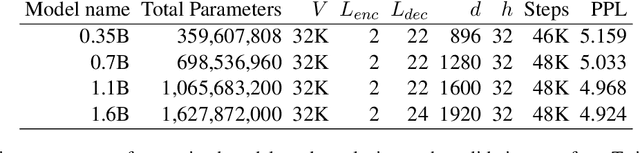

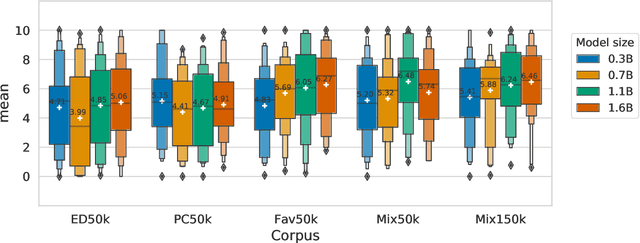

Abstract:In recent years, several high-performance conversational systems have been proposed based on the Transformer encoder-decoder model. Although previous studies analyzed the effects of the model parameters and the decoding method on subjective dialogue evaluations with overall metrics, they did not analyze how the differences of fine-tuning datasets affect on user's detailed impression. In addition, the Transformer-based approach has only been verified for English, not for such languages with large inter-language distances as Japanese. In this study, we develop large-scale Transformer-based Japanese dialogue models and Japanese chit-chat datasets to examine the effectiveness of the Transformer-based approach for building chit-chat dialogue systems. We evaluated and analyzed the impressions of human dialogues in different fine-tuning datasets, model parameters, and the use of additional information.

State Duration and Interval Modeling in Hidden Semi-Markov Model for Sequential Data Analysis

Aug 24, 2016

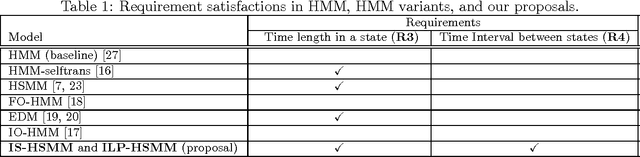

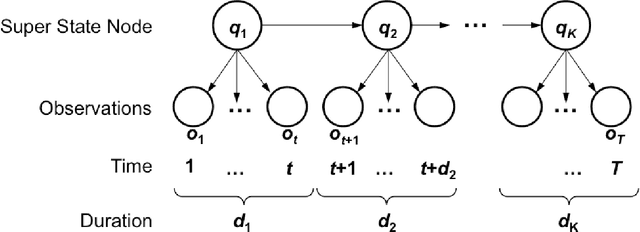

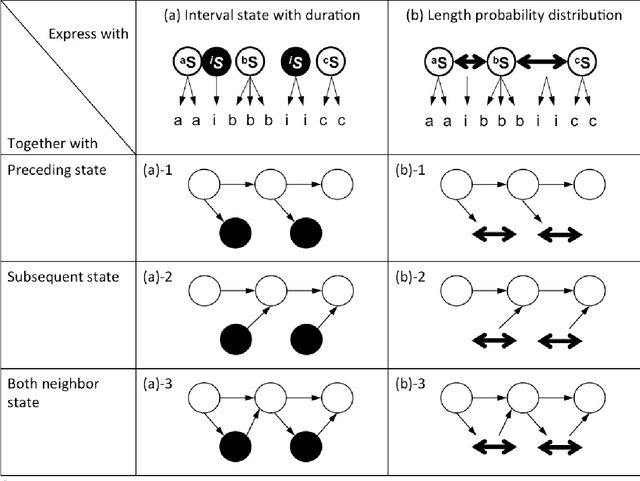

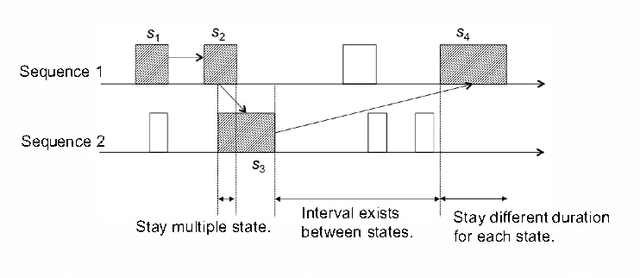

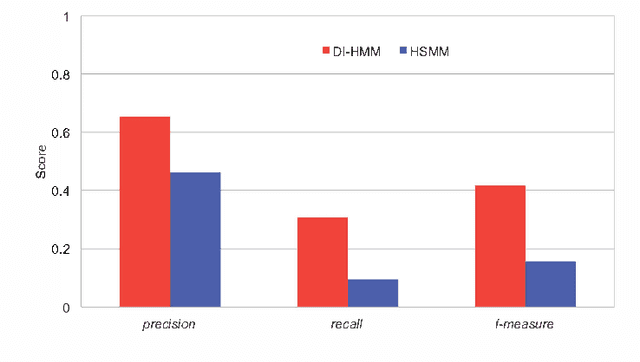

Abstract:Sequential data modeling and analysis have become indispensable tools for analyzing sequential data such as time-series data because a larger amount of sensed event data have become available. These methods capture the sequential structure of data of interest, such as input- output relationship and correlation among datasets. However, since most studies in this area are specialized or limited for their respective applications, rigorous requirement analysis on such a model has not been examined in a general point of view. Hence, we particularly examine the structure of sequential data, and extract the necessity of "state duration" and "state duration" of events for efficient and rich representation of sequential data. Specifically addressing the hidden semi-Markov model (HSMM) that represents such state duration inside a model, we attempt to newly add representational capability of state interval of events onto HSMM. To this end, we propose two extended models; one is interval state hidden semi-Markov model (IS-HSMM) to express the length of state interval with a special state node designated as "interval state node". The other is interval length probability hidden semi-Markov model (ILP-HSMM) which repre- sents the length of state interval with a new probabilistic parameter "interval length probability." From exhaustive simulations, we show superior performances of the proposed models in comparison with HSMM. To the best of our knowledge, our proposed models are the first extensions of HMM to support state interval representation as well as state duration representation.

Duration and Interval Hidden Markov Model for Sequential Data Analysis

Aug 20, 2015

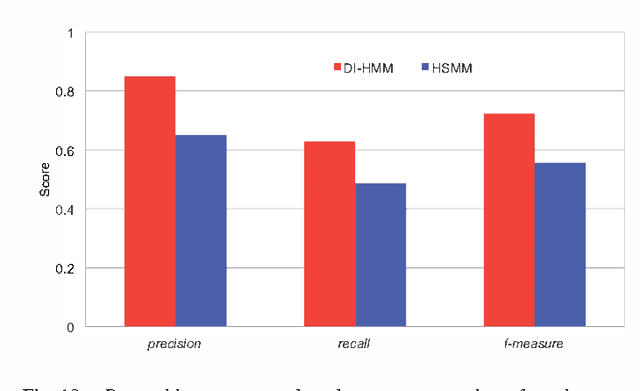

Abstract:Analysis of sequential event data has been recognized as one of the essential tools in data modeling and analysis field. In this paper, after the examination of its technical requirements and issues to model complex but practical situation, we propose a new sequential data model, dubbed Duration and Interval Hidden Markov Model (DI-HMM), that efficiently represents "state duration" and "state interval" of data events. This has significant implications to play an important role in representing practical time-series sequential data. This eventually provides an efficient and flexible sequential data retrieval. Numerical experiments on synthetic and real data demonstrate the efficiency and accuracy of the proposed DI-HMM.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge