Hiroaki Inaba

Interpretable collaborative data analysis on distributed data

Nov 09, 2020

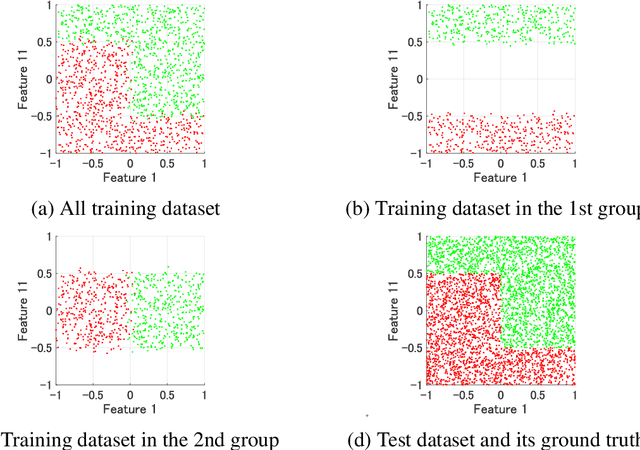

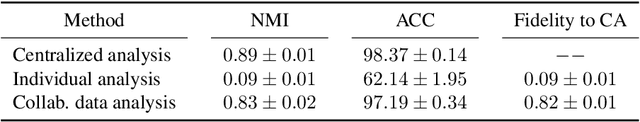

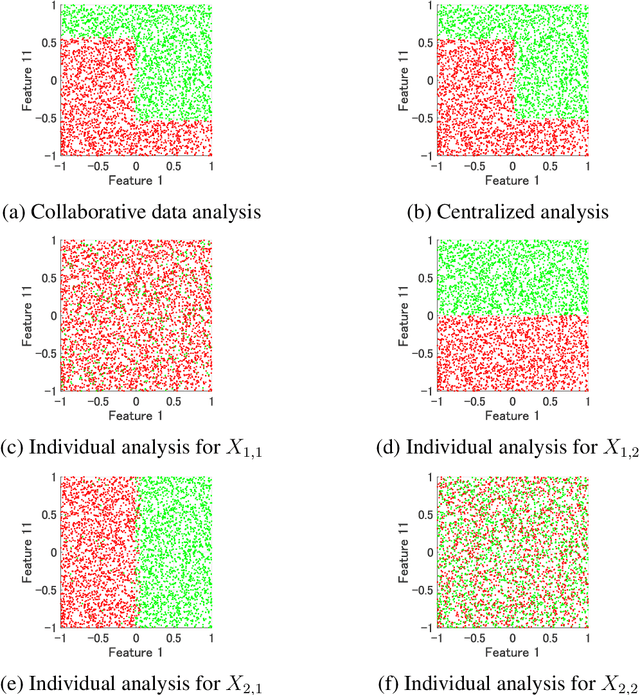

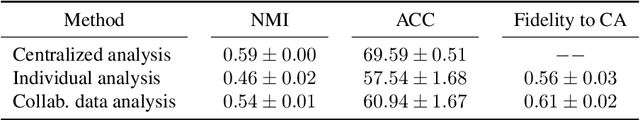

Abstract:This paper proposes an interpretable non-model sharing collaborative data analysis method as one of the federated learning systems, which is an emerging technology to analyze distributed data. Analyzing distributed data is essential in many applications such as medical, financial, and manufacturing data analyses due to privacy, and confidentiality concerns. In addition, interpretability of the obtained model has an important role for practical applications of the federated learning systems. By centralizing intermediate representations, which are individually constructed in each party, the proposed method obtains an interpretable model, achieving a collaborative analysis without revealing the individual data and learning model distributed over local parties. Numerical experiments indicate that the proposed method achieves better recognition performance for artificial and real-world problems than individual analysis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge