Hilel Hagai Diamandi

RevRIR: Joint Reverberant Speech and Room Impulse Response Embedding using Contrastive Learning with Application to Room Shape Classification

Jun 05, 2024

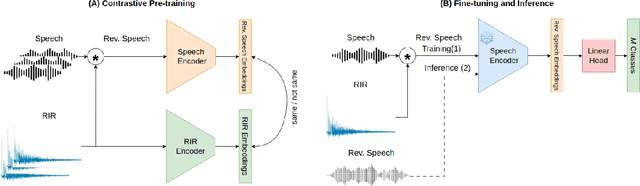

Abstract:This paper focuses on room fingerprinting, a task involving the analysis of an audio recording to determine the specific volume and shape of the room in which it was captured. While it is relatively straightforward to determine the basic room parameters from the Room Impulse Responses (RIR), doing so from a speech signal is a cumbersome task. To address this challenge, we introduce a dual-encoder architecture that facilitates the estimation of room parameters directly from speech utterances. During pre-training, one encoder receives the RIR while the other processes the reverberant speech signal. A contrastive loss function is employed to embed the speech and the acoustic response jointly. In the fine-tuning stage, the specific classification task is trained. In the test phase, only the reverberant utterance is available, and its embedding is used for the task of room shape classification. The proposed scheme is extensively evaluated using simulated acoustic environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge