Hideki Oki

Triplet Loss for Knowledge Distillation

Apr 17, 2020

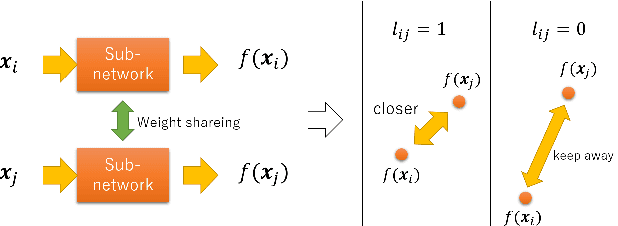

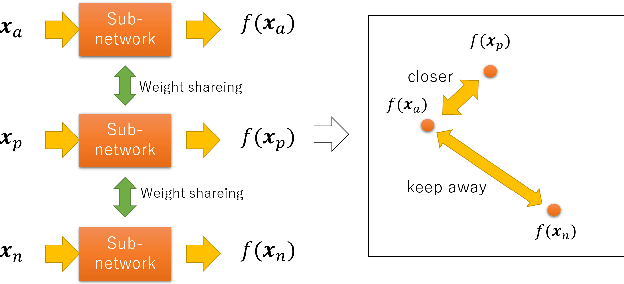

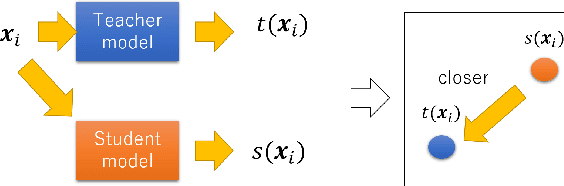

Abstract:In recent years, deep learning has spread rapidly, and deeper, larger models have been proposed. However, the calculation cost becomes enormous as the size of the models becomes larger. Various techniques for compressing the size of the models have been proposed to improve performance while reducing computational costs. One of the methods to compress the size of the models is knowledge distillation (KD). Knowledge distillation is a technique for transferring knowledge of deep or ensemble models with many parameters (teacher model) to smaller shallow models (student model). Since the purpose of knowledge distillation is to increase the similarity between the teacher model and the student model, we propose to introduce the concept of metric learning into knowledge distillation to make the student model closer to the teacher model using pairs or triplets of the training samples. In metric learning, the researchers are developing the methods to build a model that can increase the similarity of outputs for similar samples. Metric learning aims at reducing the distance between similar and increasing the distance between dissimilar. The functionality of the metric learning to reduce the differences between similar outputs can be used for the knowledge distillation to reduce the differences between the outputs of the teacher model and the student model. Since the outputs of the teacher model for different objects are usually different, the student model needs to distinguish them. We think that metric learning can clarify the difference between the different outputs, and the performance of the student model could be improved. We have performed experiments to compare the proposed method with state-of-the-art knowledge distillation methods.

Mixup of Feature Maps in a Hidden Layer for Training of Convolutional Neural Network

Jun 24, 2019

Abstract:The deep Convolutional Neural Network (CNN) became very popular as a fundamental technique for image classification and objects recognition. To improve the recognition accuracy for the more complex tasks, deeper networks have being introduced. However, the recognition accuracy of the trained deep CNN drastically decreases for the samples which are obtained from the outside regions of the training samples. To improve the generalization ability for such samples, Krizhevsky et al. proposed to generate additional samples through transformations from the existing samples and to make the training samples richer. This method is known as data augmentation. Hongyi Zhang et al. introduced data augmentation method called mixup which achieves state-of-the-art performance in various datasets. Mixup generates new samples by mixing two different training samples. Mixing of the two images is implemented with simple image morphing. In this paper, we propose to apply mixup to the feature maps in a hidden layer. To implement the mixup in the hidden layer we use the Siamese network or the triplet network architecture to mix feature maps. From the experimental comparison, it is observed that the mixup of the feature maps obtained from the first convolution layer is more effective than the original image mixup.

* 11 pages, 5 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge