Henrique Weber

Editable Indoor Lighting Estimation

Nov 09, 2022

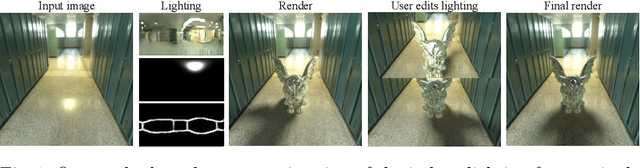

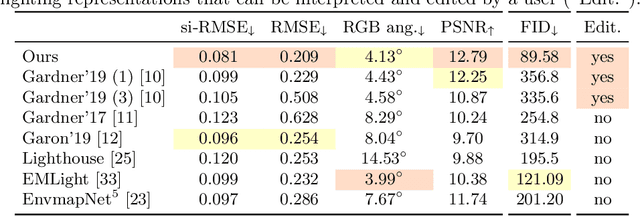

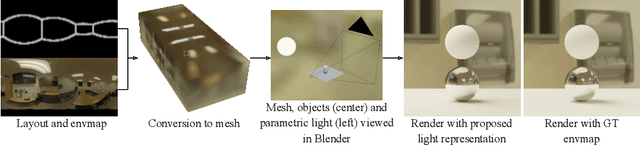

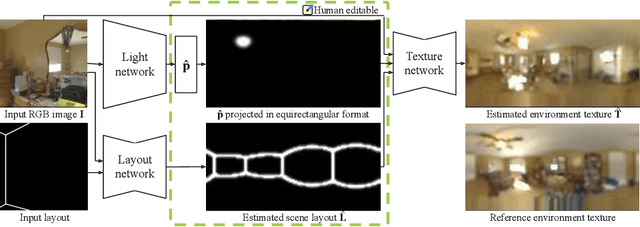

Abstract:We present a method for estimating lighting from a single perspective image of an indoor scene. Previous methods for predicting indoor illumination usually focus on either simple, parametric lighting that lack realism, or on richer representations that are difficult or even impossible to understand or modify after prediction. We propose a pipeline that estimates a parametric light that is easy to edit and allows renderings with strong shadows, alongside with a non-parametric texture with high-frequency information necessary for realistic rendering of specular objects. Once estimated, the predictions obtained with our model are interpretable and can easily be modified by an artist/user with a few mouse clicks. Quantitative and qualitative results show that our approach makes indoor lighting estimation easier to handle by a casual user, while still producing competitive results.

Learning to Estimate Indoor Lighting from 3D Objects

Aug 13, 2018

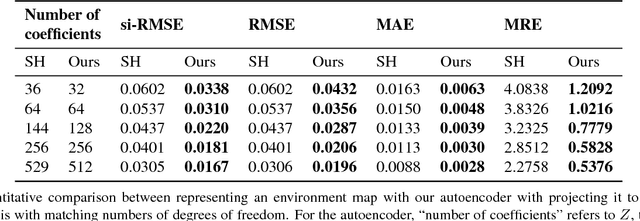

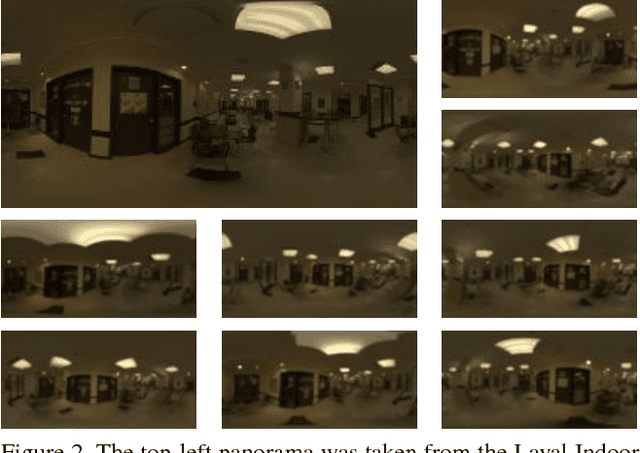

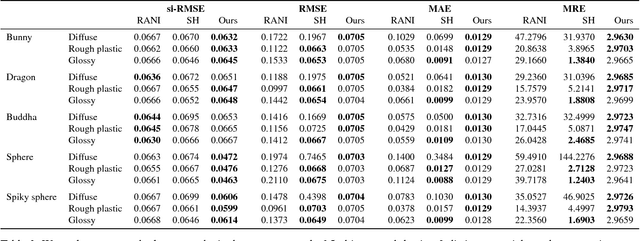

Abstract:In this work, we propose a step towards a more accurate prediction of the environment light given a single picture of a known object. To achieve this, we developed a deep learning method that is able to encode the latent space of indoor lighting using few parameters and that is trained on a database of environment maps. This latent space is then used to generate predictions of the light that are both more realistic and accurate than previous methods. To achieve this, our first contribution is a deep autoencoder which is capable of learning the feature space that compactly models lighting. Our second contribution is a convolutional neural network that predicts the light from a single image of a known object. To train these networks, our third contribution is a novel dataset that contains 21,000 HDR indoor environment maps. The results indicate that the predictor can generate plausible lighting estimations even from diffuse objects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge