Hemanth Sai Ram Reddy

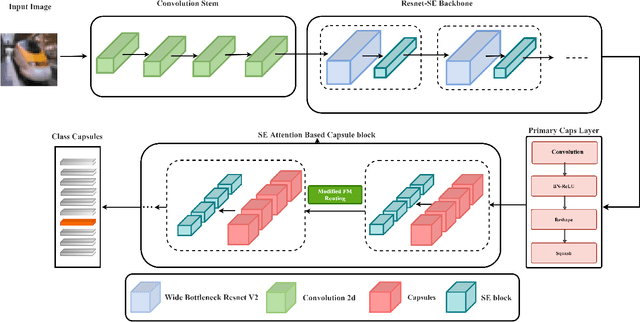

WideCaps: A Wide Attention based Capsule Network for Image Classification

Aug 14, 2021

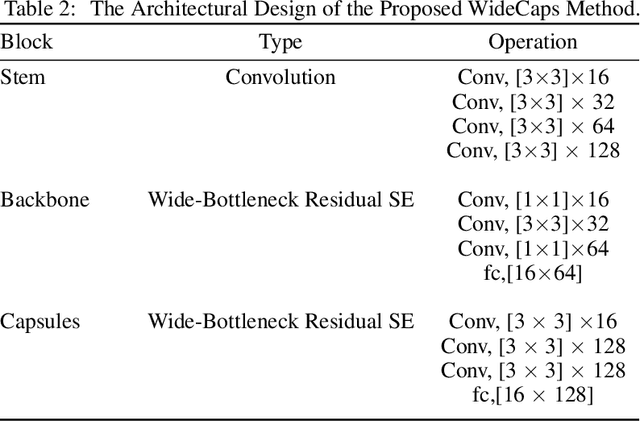

Abstract:The capsule network is a distinct and promising segment of the neural network family that drew attention due to its unique ability to maintain the equivariance property by preserving the spatial relationship amongst the features. The capsule network has attained unprecedented success over image classification tasks with datasets such as MNIST and affNIST by encoding the characteristic features into the capsules and building the parse-tree structure. However, on the datasets involving complex foreground and background regions such as CIFAR-10, the performance of the capsule network is sub-optimal due to its naive data routing policy and incompetence towards extracting complex features. This paper proposes a new design strategy for capsule network architecture for efficiently dealing with complex images. The proposed method incorporates wide bottleneck residual modules and the Squeeze and Excitation attention blocks upheld by the modified FM routing algorithm to address the defined problem. A wide bottleneck residual module facilitates extracting complex features followed by the squeeze and excitation attention block to enable channel-wise attention by suppressing the trivial features. This setup allows channel inter-dependencies at almost no computational cost, thereby enhancing the representation ability of capsules on complex images. We extensively evaluate the performance of the proposed model on three publicly available datasets, namely CIFAR-10, Fashion MNIST, and SVHN, to outperform the top-5 performance on CIFAR-10 and Fashion MNIST with highly competitive performance on the SVHN dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge