Heet Shah

Multilingual and Multimodal Abuse Detection

Apr 03, 2022

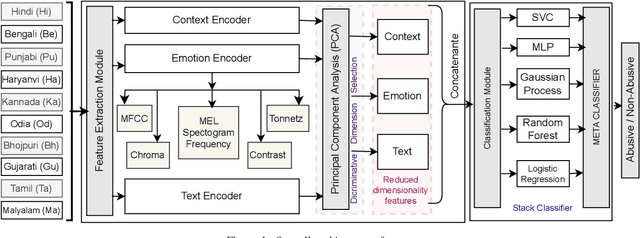

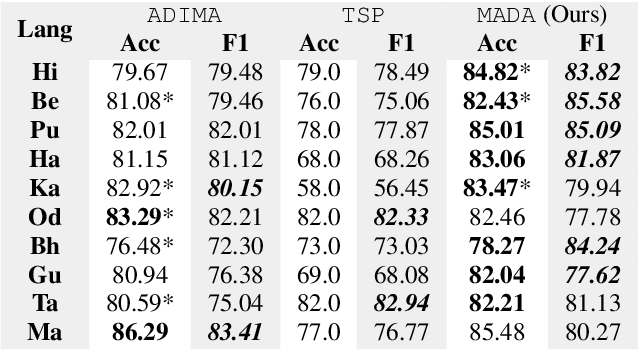

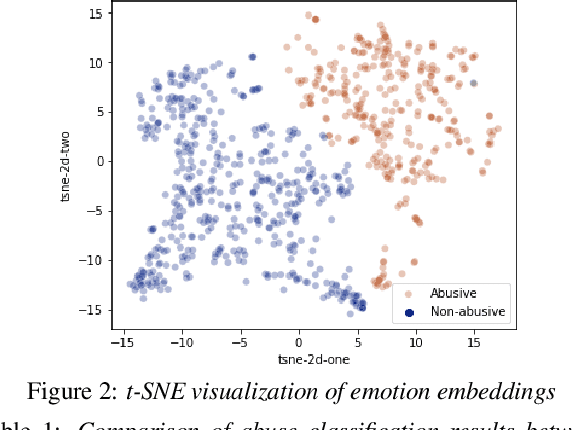

Abstract:The presence of abusive content on social media platforms is undesirable as it severely impedes healthy and safe social media interactions. While automatic abuse detection has been widely explored in textual domain, audio abuse detection still remains unexplored. In this paper, we attempt abuse detection in conversational audio from a multimodal perspective in a multilingual social media setting. Our key hypothesis is that along with the modelling of audio, incorporating discriminative information from other modalities can be highly beneficial for this task. Our proposed method, MADA, explicitly focuses on two modalities other than the audio itself, namely, the underlying emotions expressed in the abusive audio and the semantic information encapsulated in the corresponding textual form. Observations prove that MADA demonstrates gains over audio-only approaches on the ADIMA dataset. We test the proposed approach on 10 different languages and observe consistent gains in the range 0.6%-5.2% by leveraging multiple modalities. We also perform extensive ablation experiments for studying the contributions of every modality and observe the best results while leveraging all the modalities together. Additionally, we perform experiments to empirically confirm that there is a strong correlation between underlying emotions and abusive behaviour.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge