He Cheng

BadSAD: Clean-Label Backdoor Attacks against Deep Semi-Supervised Anomaly Detection

Dec 17, 2024Abstract:Image anomaly detection (IAD) is essential in applications such as industrial inspection, medical imaging, and security. Despite the progress achieved with deep learning models like Deep Semi-Supervised Anomaly Detection (DeepSAD), these models remain susceptible to backdoor attacks, presenting significant security challenges. In this paper, we introduce BadSAD, a novel backdoor attack framework specifically designed to target DeepSAD models. Our approach involves two key phases: trigger injection, where subtle triggers are embedded into normal images, and latent space manipulation, which positions and clusters the poisoned images near normal images to make the triggers appear benign. Extensive experiments on benchmark datasets validate the effectiveness of our attack strategy, highlighting the severe risks that backdoor attacks pose to deep learning-based anomaly detection systems.

Backdoor Attack against One-Class Sequential Anomaly Detection Models

Feb 15, 2024

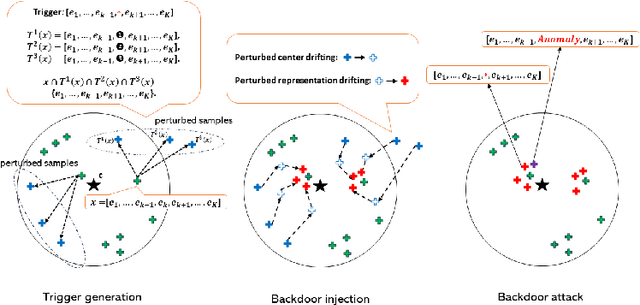

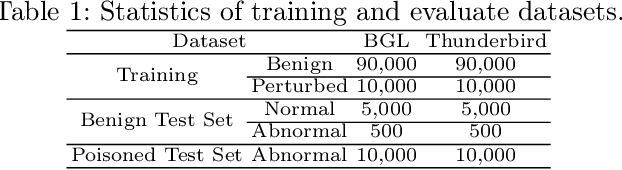

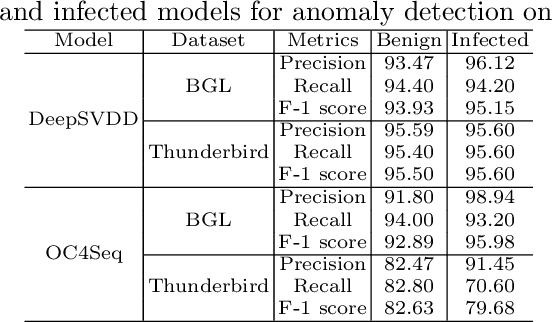

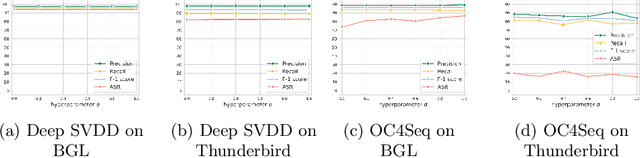

Abstract:Deep anomaly detection on sequential data has garnered significant attention due to the wide application scenarios. However, deep learning-based models face a critical security threat - their vulnerability to backdoor attacks. In this paper, we explore compromising deep sequential anomaly detection models by proposing a novel backdoor attack strategy. The attack approach comprises two primary steps, trigger generation and backdoor injection. Trigger generation is to derive imperceptible triggers by crafting perturbed samples from the benign normal data, of which the perturbed samples are still normal. The backdoor injection is to properly inject the backdoor triggers to comprise the model only for the samples with triggers. The experimental results demonstrate the effectiveness of our proposed attack strategy by injecting backdoors on two well-established one-class anomaly detection models.

Fine-grained Anomaly Detection in Sequential Data via Counterfactual Explanations

Oct 09, 2022

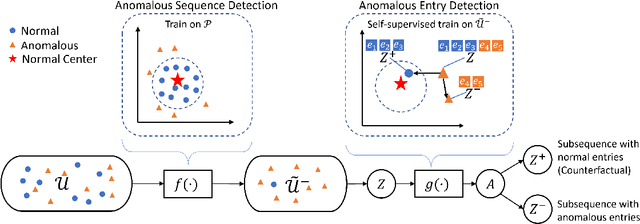

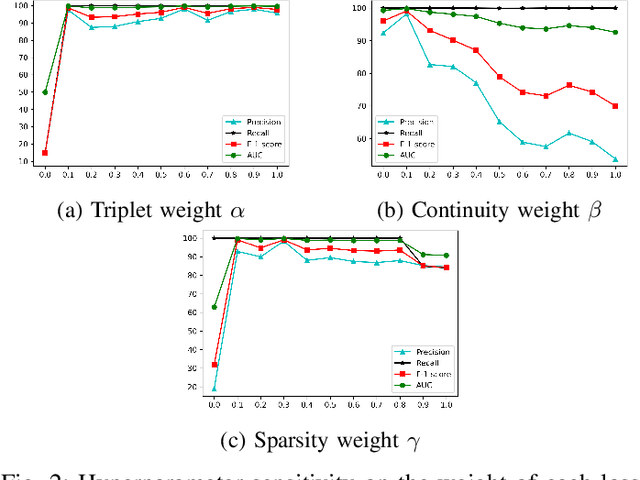

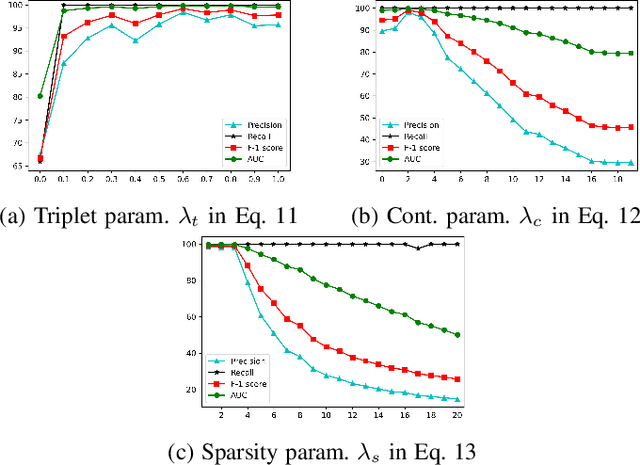

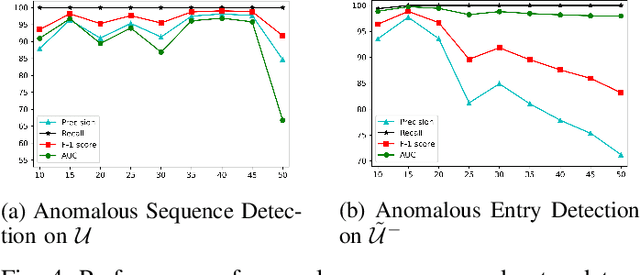

Abstract:Anomaly detection in sequential data has been studied for a long time because of its potential in various applications, such as detecting abnormal system behaviors from log data. Although many approaches can achieve good performance on anomalous sequence detection, how to identify the anomalous entries in sequences is still challenging due to a lack of information at the entry-level. In this work, we propose a novel framework called CFDet for fine-grained anomalous entry detection. CFDet leverages the idea of interpretable machine learning. Given a sequence that is detected as anomalous, we can consider anomalous entry detection as an interpretable machine learning task because identifying anomalous entries in the sequence is to provide an interpretation to the detection result. We make use of the deep support vector data description (Deep SVDD) approach to detect anomalous sequences and propose a novel counterfactual interpretation-based approach to identify anomalous entries in the sequences. Experimental results on three datasets show that CFDet can correctly detect anomalous entries.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge