Hassan Takabi

Effectiveness of Adversarial Examples and Defenses for Malware Classification

Sep 10, 2019

Abstract:Artificial neural networks have been successfully used for many different classification tasks including malware detection and distinguishing between malicious and non-malicious programs. Although artificial neural networks perform very well on these tasks, they are also vulnerable to adversarial examples. An adversarial example is a sample that has minor modifications made to it so that the neural network misclassifies it. Many techniques have been proposed, both for crafting adversarial examples and for hardening neural networks against them. Most previous work has been done in the image domain. Some of the attacks have been adopted to work in the malware domain which typically deals with binary feature vectors. In order to better understand the space of adversarial examples in malware classification, we study different approaches of crafting adversarial examples and defense techniques in the malware domain and compare their effectiveness on multiple datasets.

CryptoDL: Deep Neural Networks over Encrypted Data

Nov 14, 2017

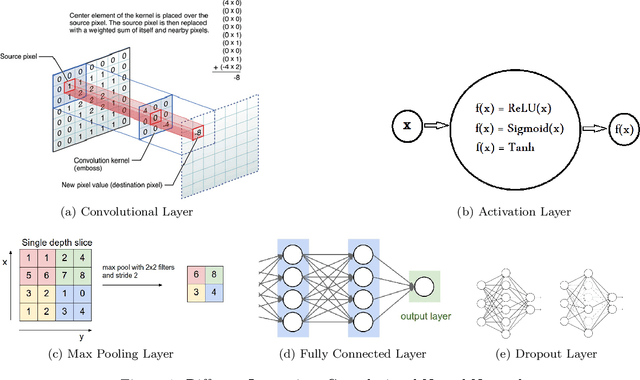

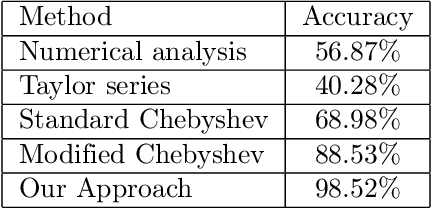

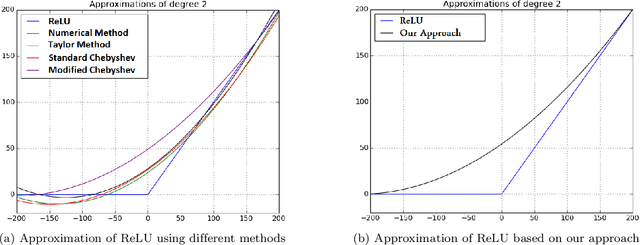

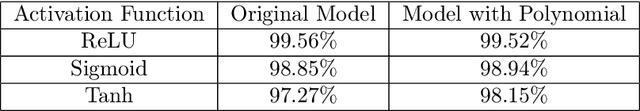

Abstract:Machine learning algorithms based on deep neural networks have achieved remarkable results and are being extensively used in different domains. However, the machine learning algorithms requires access to raw data which is often privacy sensitive. To address this issue, we develop new techniques to provide solutions for running deep neural networks over encrypted data. In this paper, we develop new techniques to adopt deep neural networks within the practical limitation of current homomorphic encryption schemes. More specifically, we focus on classification of the well-known convolutional neural networks (CNN). First, we design methods for approximation of the activation functions commonly used in CNNs (i.e. ReLU, Sigmoid, and Tanh) with low degree polynomials which is essential for efficient homomorphic encryption schemes. Then, we train convolutional neural networks with the approximation polynomials instead of original activation functions and analyze the performance of the models. Finally, we implement convolutional neural networks over encrypted data and measure performance of the models. Our experimental results validate the soundness of our approach with several convolutional neural networks with varying number of layers and structures. When applied to the MNIST optical character recognition tasks, our approach achieves 99.52\% accuracy which significantly outperforms the state-of-the-art solutions and is very close to the accuracy of the best non-private version, 99.77\%. Also, it can make close to 164000 predictions per hour. We also applied our approach to CIFAR-10, which is much more complex compared to MNIST, and were able to achieve 91.5\% accuracy with approximation polynomials used as activation functions. These results show that CryptoDL provides efficient, accurate and scalable privacy-preserving predictions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge