Hasan AlMarzouqi

Cascaded Deep Hybrid Models for Multistep Household Energy Consumption Forecasting

Jul 06, 2022

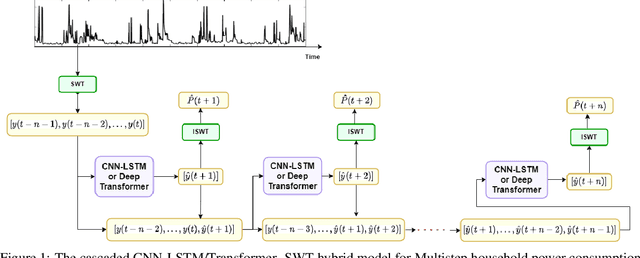

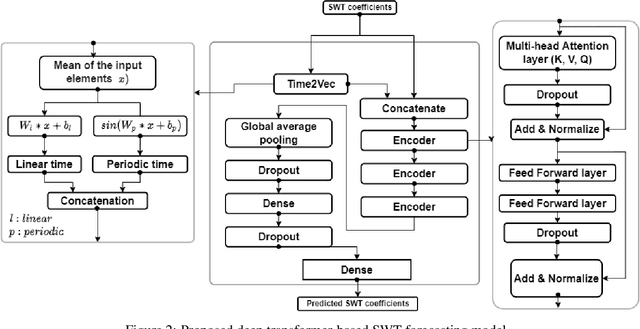

Abstract:Sustainability requires increased energy efficiency with minimal waste. The future power systems should thus provide high levels of flexibility iin controling energy consumption. Precise projections of future energy demand/load at the aggregate and on the individual site levels are of great importance for decision makers and professionals in the energy industry. Forecasting energy loads has become more advantageous for energy providers and customers, allowing them to establish an efficient production strategy to satisfy demand. This study introduces two hybrid cascaded models for forecasting multistep household power consumption in different resolutions. The first model integrates Stationary Wavelet Transform (SWT), as an efficient signal preprocessing technique, with Convolutional Neural Networks and Long Short Term Memory (LSTM). The second hybrid model combines SWT with a self-attention based neural network architecture named transformer. The major constraint of using time-frequency analysis methods such as SWT in multistep energy forecasting problems is that they require sequential signals, making signal reconstruction problematic in multistep forecasting applications.The cascaded models can efficiently address this problem through using the recursive outputs. Experimental results show that the proposed hybrid models achieve superior prediction performance compared to the existing multistep power consumption prediction methods. The results will pave the way for more accurate and reliable forecasting of household power consumption.

Semantic Labeling of High Resolution Images Using EfficientUNets and Transformers

Jun 22, 2022

Abstract:Semantic segmentation necessitates approaches that learn high-level characteristics while dealing with enormous amounts of data. Convolutional neural networks (CNNs) can learn unique and adaptive features to achieve this aim. However, due to the large size and high spatial resolution of remote sensing images, these networks cannot analyze an entire scene efficiently. Recently, deep transformers have proven their capability to record global interactions between different objects in the image. In this paper, we propose a new segmentation model that combines convolutional neural networks with transformers, and show that this mixture of local and global feature extraction techniques provides significant advantages in remote sensing segmentation. In addition, the proposed model includes two fusion layers that are designed to represent multi-modal inputs and output of the network efficiently. The input fusion layer extracts feature maps summarizing the relationship between image content and elevation maps (DSM). The output fusion layer uses a novel multi-task segmentation strategy where class labels are identified using class-specific feature extraction layers and loss functions. Finally, a fast-marching method is used to convert all unidentified class labels to their closest known neighbors. Our results demonstrate that the proposed methodology improves segmentation accuracy compared to state-of-the-art techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge