Hartej Soin

Generative flow induced neural architecture search: Towards discovering optimal architecture in wavelet neural operator

May 11, 2024

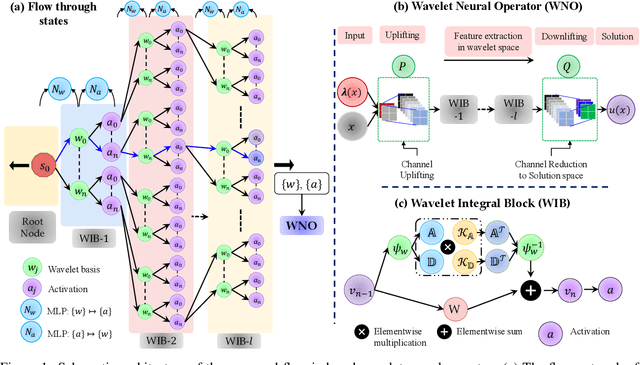

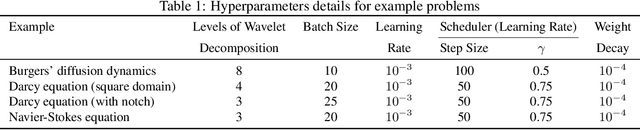

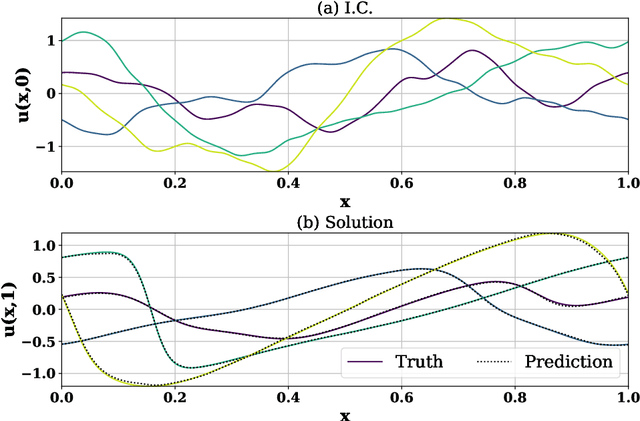

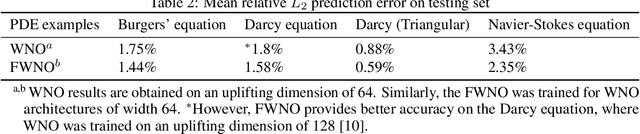

Abstract:We propose a generative flow-induced neural architecture search algorithm. The proposed approach devices simple feed-forward neural networks to learn stochastic policies to generate sequences of architecture hyperparameters such that the generated states are in proportion with the reward from the terminal state. We demonstrate the efficacy of the proposed search algorithm on the wavelet neural operator (WNO), where we learn a policy to generate a sequence of hyperparameters like wavelet basis and activation operators for wavelet integral blocks. While the trajectory of the generated wavelet basis and activation sequence is cast as flow, the policy is learned by minimizing the flow violation between each state in the trajectory and maximizing the reward from the terminal state. In the terminal state, we train WNO simultaneously to guide the search. We propose to use the exponent of the negative of the WNO loss on the validation dataset as the reward function. While the grid search-based neural architecture generation algorithms foresee every combination, the proposed framework generates the most probable sequence based on the positive reward from the terminal state, thereby reducing exploration time. Compared to reinforcement learning schemes, where complete episodic training is required to get the reward, the proposed algorithm generates the hyperparameter trajectory sequentially. Through four fluid mechanics-oriented problems, we illustrate that the learned policies can sample the best-performing architecture of the neural operator, thereby improving the performance of the vanilla wavelet neural operator.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge