Haoyuan Xu

Integrated Drill Boom Hole-Seeking Control via Reinforcement Learning

Dec 04, 2023

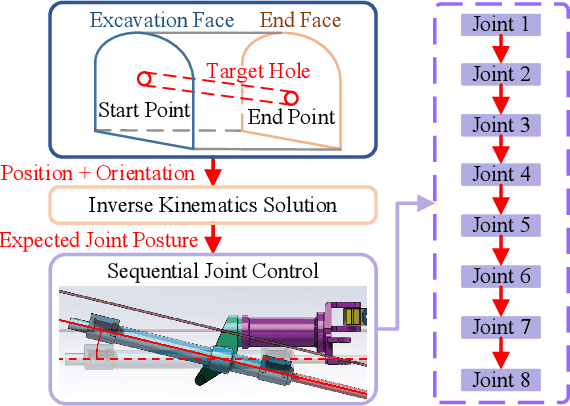

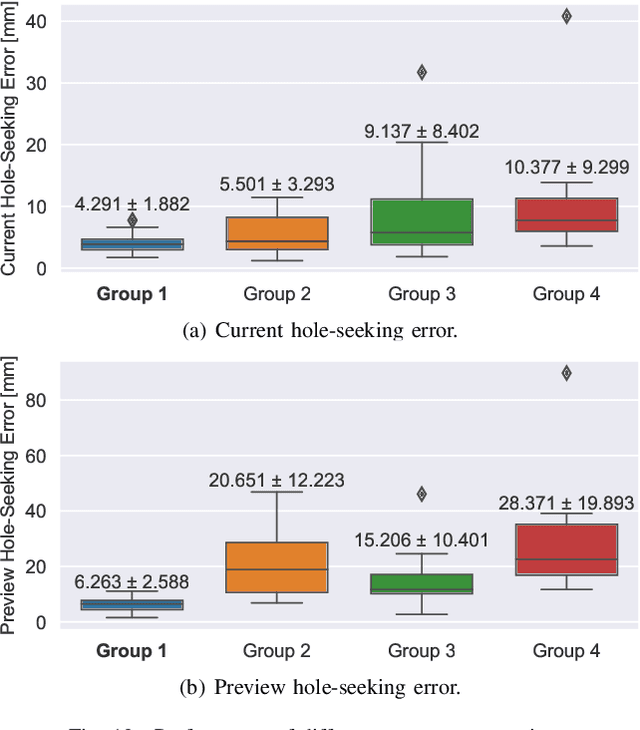

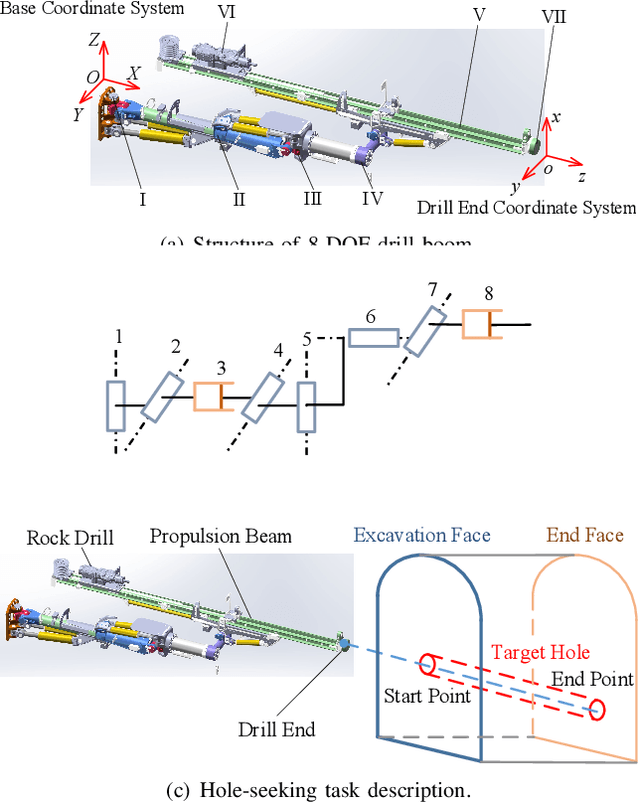

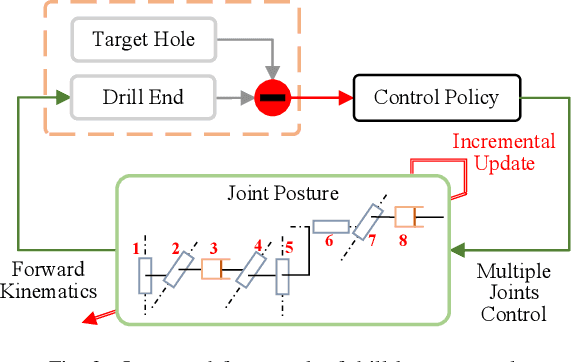

Abstract:Intelligent drill boom hole-seeking is a promising technology for enhancing drilling efficiency, mitigating potential safety hazards, and relieving human operators. Most existing intelligent drill boom control methods rely on a hierarchical control framework based on inverse kinematics. However, these methods are generally time-consuming due to the computational complexity of inverse kinematics and the inefficiency of the sequential execution of multiple joints. To tackle these challenges, this study proposes an integrated drill boom control method based on Reinforcement Learning (RL). We develop an integrated drill boom control framework that utilizes a parameterized policy to directly generate control inputs for all joints at each time step, taking advantage of joint posture and target hole information. By formulating the hole-seeking task as a Markov decision process, contemporary mainstream RL algorithms can be directly employed to learn a hole-seeking policy, thus eliminating the need for inverse kinematics solutions and promoting cooperative multi-joint control. To enhance the drilling accuracy throughout the entire drilling process, we devise a state representation that combines Denavit-Hartenberg joint information and preview hole-seeking discrepancy data. Simulation results show that the proposed method significantly outperforms traditional methods in terms of hole-seeking accuracy and time efficiency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge