Hannu Sillanpää

Cost Sensitive Optimization of Deepfake Detector

Dec 08, 2020

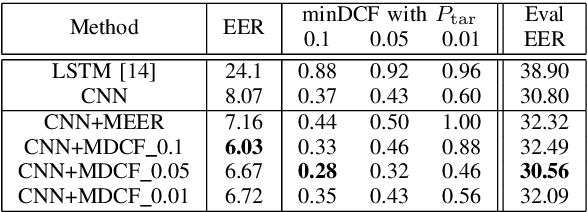

Abstract:Since the invention of cinema, the manipulated videos have existed. But generating manipulated videos that can fool the viewer has been a time-consuming endeavor. With the dramatic improvements in the deep generative modeling, generating believable looking fake videos has become a reality. In the present work, we concentrate on the so-called deepfake videos, where the source face is swapped with the targets. We argue that deepfake detection task should be viewed as a screening task, where the user, such as the video streaming platform, will screen a large number of videos daily. It is clear then that only a small fraction of the uploaded videos are deepfakes, so the detection performance needs to be measured in a cost-sensitive way. Preferably, the model parameters also need to be estimated in the same way. This is precisely what we propose here.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge