Hangda Liu

A Nonlinear Hash-based Optimization Method for SpMV on GPUs

Apr 11, 2025

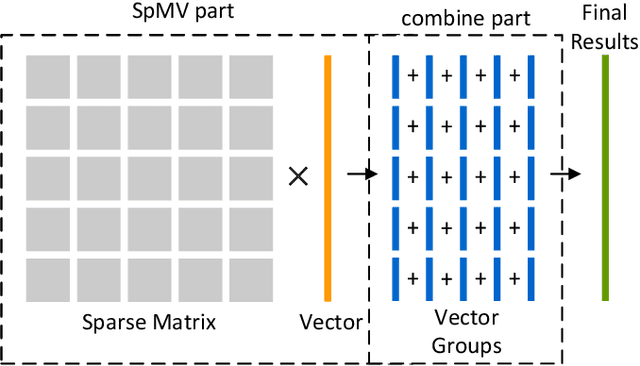

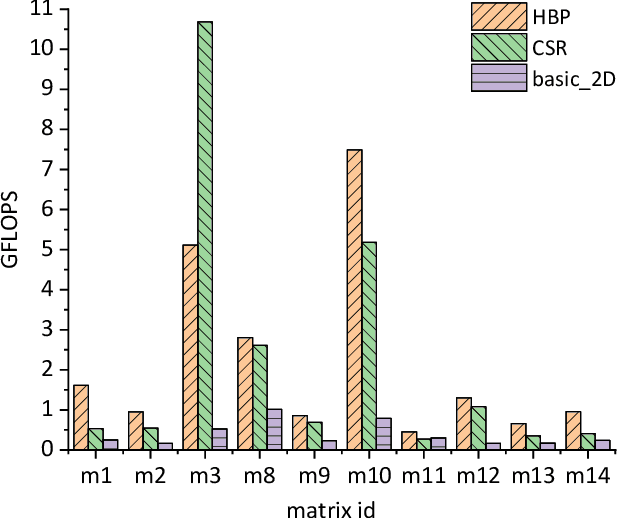

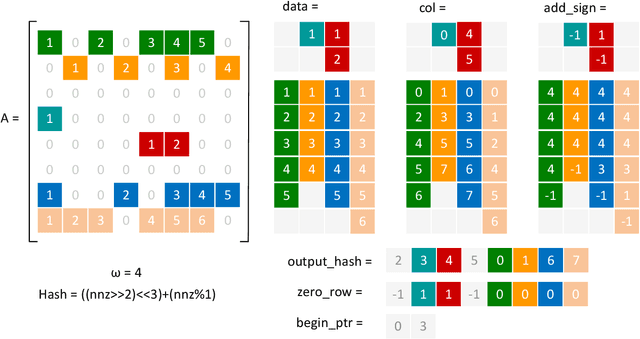

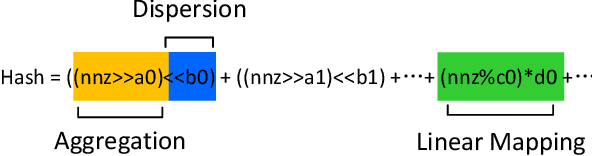

Abstract:Sparse matrix-vector multiplication (SpMV) is a fundamental operation with a wide range of applications in scientific computing and artificial intelligence. However, the large scale and sparsity of sparse matrix often make it a performance bottleneck. In this paper, we highlight the effectiveness of hash-based techniques in optimizing sparse matrix reordering, introducing the Hash-based Partition (HBP) format, a lightweight SpMV approach. HBP retains the performance benefits of the 2D-partitioning method while leveraging the hash transformation's ability to group similar elements, thereby accelerating the pre-processing phase of sparse matrix reordering. Additionally, we achieve parallel load balancing across matrix blocks through a competitive method. Our experiments, conducted on both Nvidia Jetson AGX Orin and Nvidia RTX 4090, show that in the pre-processing step, our method offers an average speedup of 3.53 times compared to the sorting approach and 3.67 times compared to the dynamic programming method employed in Regu2D. Furthermore, in SpMV, our method achieves a maximum speedup of 3.32 times on Orin and 3.01 times on RTX4090 against the CSR format in sparse matrices from the University of Florida Sparse Matrix Collection.

Efficient Continual Learning through Frequency Decomposition and Integration

Mar 28, 2025Abstract:Continual learning (CL) aims to learn new tasks while retaining past knowledge, addressing the challenge of forgetting during task adaptation. Rehearsal-based methods, which replay previous samples, effectively mitigate forgetting. However, research on enhancing the efficiency of these methods, especially in resource-constrained environments, remains limited, hindering their application in real-world systems with dynamic data streams. The human perceptual system processes visual scenes through complementary frequency channels: low-frequency signals capture holistic cues, while high-frequency components convey structural details vital for fine-grained discrimination. Inspired by this, we propose the Frequency Decomposition and Integration Network (FDINet), a novel framework that decomposes and integrates information across frequencies. FDINet designs two lightweight networks to independently process low- and high-frequency components of images. When integrated with rehearsal-based methods, this frequency-aware design effectively enhances cross-task generalization through low-frequency information, preserves class-specific details using high-frequency information, and facilitates efficient training due to its lightweight architecture. Experiments demonstrate that FDINet reduces backbone parameters by 78%, improves accuracy by up to 7.49% over state-of-the-art (SOTA) methods, and decreases peak memory usage by up to 80%. Additionally, on edge devices, FDINet accelerates training by up to 5$\times$.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge