Hamid Reza Boveiri

Medical image registration using unsupervised deep neural network: A scoping literature review

Aug 03, 2022

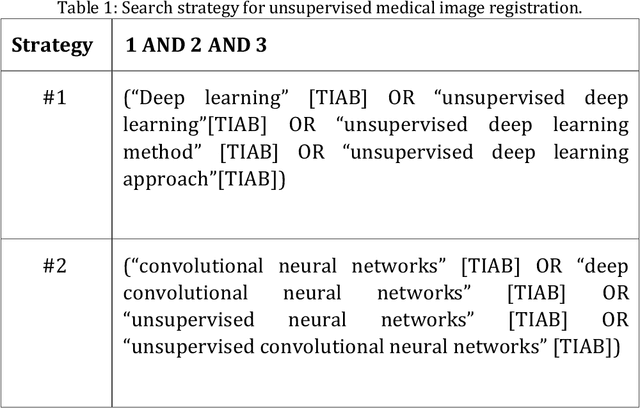

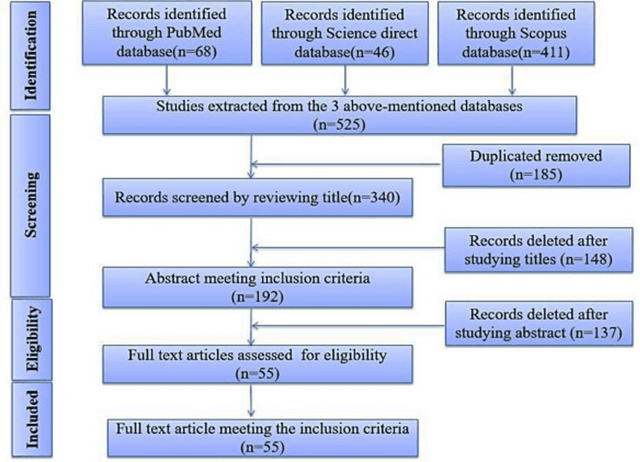

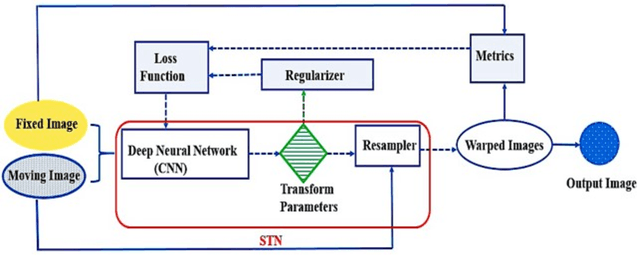

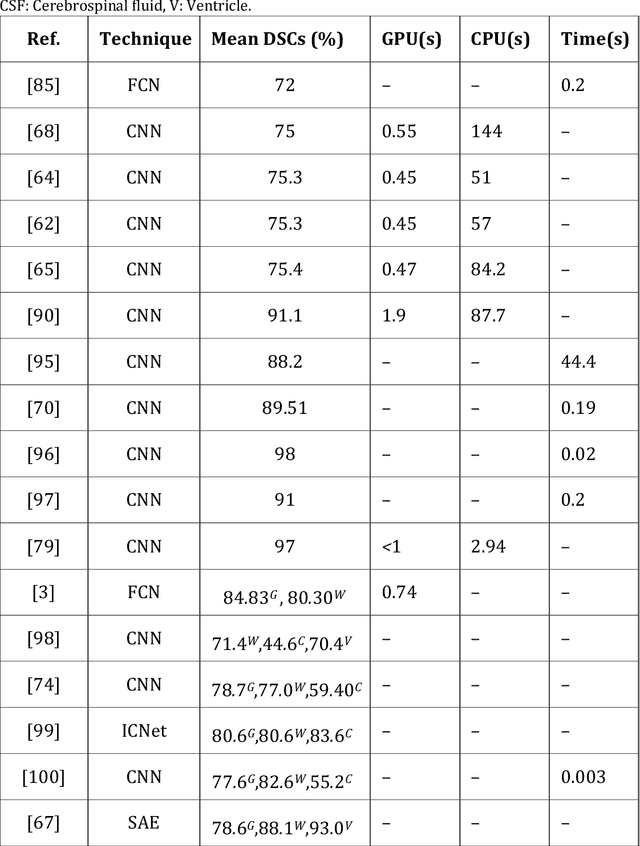

Abstract:In medicine, image registration is vital in image-guided interventions and other clinical applications. However, it is a difficult subject to be addressed which by the advent of machine learning, there have been considerable progress in algorithmic performance has recently been achieved for medical image registration in this area. The implementation of deep neural networks provides an opportunity for some medical applications such as conducting image registration in less time with high accuracy, playing a key role in countering tumors during the operation. The current study presents a comprehensive scoping review on the state-of-the-art literature of medical image registration studies based on unsupervised deep neural networks is conducted, encompassing all the related studies published in this field to this date. Here, we have tried to summarize the latest developments and applications of unsupervised deep learning-based registration methods in the medical field. Fundamental and main concepts, techniques, statistical analysis from different viewpoints, novelties, and future directions are elaborately discussed and conveyed in the current comprehensive scoping review. Besides, this review hopes to help those active readers, who are riveted by this field, achieve deep insight into this exciting field.

Medical Image Registration Using Deep Neural Networks: A Comprehensive Review

Feb 09, 2020

Abstract:Image-guided interventions are saving the lives of a large number of patients where the image registration problem should indeed be considered as the most complex and complicated issue to be tackled. On the other hand, the recently huge progress in the field of machine learning made by the possibility of implementing deep neural networks on the contemporary many-core GPUs opened up a promising window to challenge with many medical applications, where the registration is not an exception. In this paper, a comprehensive review on the state-of-the-art literature known as medical image registration using deep neural networks is presented. The review is systematic and encompasses all the related works previously published in the field. Key concepts, statistical analysis from different points of view, confiding challenges, novelties and main contributions, key-enabling techniques, future directions and prospective trends all are discussed and surveyed in details in this comprehensive review. This review allows a deep understanding and insight for the readers active in the field who are investigating the state-of-the-art and seeking to contribute the future literature.

On the Performance of Metaheuristics: A Different Perspective

Jan 24, 2020

Abstract:Nowadays, we are immersed in tens of newly-proposed evolutionary and swam-intelligence metaheuristics, which makes it very difficult to choose a proper one to be applied on a specific optimization problem at hand. On the other hand, most of these metaheuristics are nothing but slightly modified variants of the basic metaheuristics. For example, Differential Evolution (DE) or Shuffled Frog Leaping (SFL) are just Genetic Algorithms (GA) with a specialized operator or an extra local search, respectively. Therefore, what comes to the mind is whether the behavior of such newly-proposed metaheuristics can be investigated on the basis of studying the specifications and characteristics of their ancestors. In this paper, a comprehensive evaluation study on some basic metaheuristics i.e. Genetic Algorithm (GA), Particle Swarm Optimization (PSO), Artificial Bee Colony (ABC), Teaching-Learning-Based Optimization (TLBO), and Cuckoo Optimization algorithm (COA) is conducted, which give us a deeper insight into the performance of them so that we will be able to better estimate the performance and applicability of all other variations originated from them. A large number of experiments have been conducted on 20 different combinatorial optimization benchmark functions with different characteristics, and the results reveal to us some fundamental conclusions besides the following ranking order among these metaheuristics, {ABC, PSO, TLBO, GA, COA} i.e. ABC and COA are the best and the worst methods from the performance point of view, respectively. In addition, from the convergence perspective, PSO and ABC have significant better convergence for unimodal and multimodal functions, respectively, while GA and COA have premature convergence to local optima in many cases needing alternative mutation mechanisms to enhance diversification and global search.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge