Haley N. Green

Using Physiological Measures, Gaze, and Facial Expressions to Model Human Trust in a Robot Partner

Apr 07, 2025

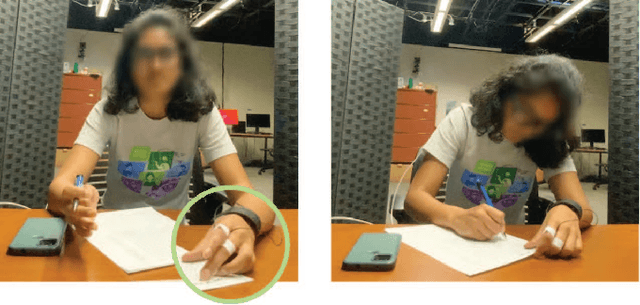

Abstract:With robots becoming increasingly prevalent in various domains, it has become crucial to equip them with tools to achieve greater fluency in interactions with humans. One of the promising areas for further exploration lies in human trust. A real-time, objective model of human trust could be used to maximize productivity, preserve safety, and mitigate failure. In this work, we attempt to use physiological measures, gaze, and facial expressions to model human trust in a robot partner. We are the first to design an in-person, human-robot supervisory interaction study to create a dedicated trust dataset. Using this dataset, we train machine learning algorithms to identify the objective measures that are most indicative of trust in a robot partner, advancing trust prediction in human-robot interactions. Our findings indicate that a combination of sensor modalities (blood volume pulse, electrodermal activity, skin temperature, and gaze) can enhance the accuracy of detecting human trust in a robot partner. Furthermore, the Extra Trees, Random Forest, and Decision Trees classifiers exhibit consistently better performance in measuring the person's trust in the robot partner. These results lay the groundwork for constructing a real-time trust model for human-robot interaction, which could foster more efficient interactions between humans and robots.

* Accepted at the IEEE International Conference on Robotics and Automation (ICRA), 2025

What Am I? Evaluating the Effect of Language Fluency and Task Competency on the Perception of a Social Robot

Oct 14, 2024Abstract:Recent advancements in robot capabilities have enabled them to interact with people in various human-social environments (HSEs). In many of these environments, the perception of the robot often depends on its capabilities, e.g., task competency, language fluency, etc. To enable fluent human-robot interaction (HRI) in HSEs, it is crucial to understand the impact of these capabilities on the perception of the robot. Although many works have investigated the effects of various robot capabilities on the robot's perception separately, in this paper, we present a large-scale HRI study (n = 60) to investigate the combined impact of both language fluency and task competency on the perception of a robot. The results suggest that while language fluency may play a more significant role than task competency in the perception of the verbal competency of a robot, both language fluency and task competency contribute to the perception of the intelligence and reliability of the robot. The results also indicate that task competency may play a more significant role than language fluency in the perception of meeting expectations and being a good teammate. The findings of this study highlight the relationship between language fluency and task competency in the context of social HRI and will enable the development of more intelligent robots in the future.

* Accepted at the IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), 2024

CoHRT: A Collaboration System for Human-Robot Teamwork

Oct 11, 2024Abstract:Collaborative robots are increasingly deployed alongside humans in factories, hospitals, schools, and other domains to enhance teamwork and efficiency. Systems that seamlessly integrate humans and robots into cohesive teams for coordinated and efficient task execution are needed, enabling studies on how robot collaboration policies affect team performance and teammates' perceived fairness, trust, and safety. Such a system can also be utilized to study the impact of a robot's normative behavior on team collaboration. Additionally, it allows for investigation into how the legibility and predictability of robot actions affect human-robot teamwork and perceived safety and trust. Existing systems are limited, typically involving one human and one robot, and thus require more insight into broader team dynamics. Many rely on games or virtual simulations, neglecting the impact of a robot's physical presence. Most tasks are turn-based, hindering simultaneous execution and affecting efficiency. This paper introduces CoHRT (Collaboration System for Human-Robot Teamwork), which facilitates multi-human-robot teamwork through seamless collaboration, coordination, and communication. CoHRT utilizes a server-client-based architecture, a vision-based system to track task environments, and a simple interface for team action coordination. It allows for the design of tasks considering the human teammates' physical and mental workload and varied skill labels across the team members. We used CoHRT to design a collaborative block manipulation and jigsaw puzzle-solving task in a team of one Franka Emika Panda robot and two humans. The system enables recording multi-modal collaboration data to develop adaptive collaboration policies for robots. To further utilize CoHRT, we outline potential research directions in diverse human-robot collaborative tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge