Hakan Ali Cirpan

Deep Semantic Segmentation for Multi-Source Localization Using Angle of Arrival Measurements

Jun 11, 2025Abstract:This paper presents a solution for multi source localization using only angle of arrival measurements. The receiver platform is in motion, while the sources are assumed to be stationary. Although numerous methods exist for single source localization, many relying on pseudo-linear formulations or non convex optimization techniques, there remains a significant gap in research addressing multi source localization in dynamic environments. To bridge this gap, we propose a deep learning-based framework that leverages semantic segmentation models for multi source localization. Specifically, we employ UNet and UNetPP as backbone models, processing input images that encode the platform's positions along with the corresponding direction finding lines at each position. By analyzing the intersections of these lines, the models effectively identify and localize multiple sources. Through simulations, we evaluate both single- and multi-source localization scenarios. Our results demonstrate that while the proposed approach performs comparably to traditional methods in single source localization, it achieves accurate source localization even in challenging conditions with high noise levels and an increased number of sources.

Quasisynchronous LoRa for LEO Nanosatellite Communications

Aug 01, 2023Abstract:Perfect synchronization in LoRa communications between Low Earth Orbit (LEO) satellites and ground base stations is still challenging, despite the potential use of atomic clocks in LEO satellites, which offer high precision. Even by incorporating atomic clocks in LEO satellites, their inherent precision can be leveraged to enhance the overall synchronization process, perfect synchronization is infeasible due to a combination of factors such as signal propagation delay, Doppler effects, clock drift and atmospheric effects. These challenges require the development of advanced synchronization techniques and algorithms to mitigate their effects and ensure reliable communication from / to LEO satellites. However, maintaining acceptable levels of synchronization rather than striving for perfection, quasisynchronous (QS) communication can be adopted which maintains communication reliability, improves resource utilization, reduces power consumption, and ensures scalability as more devices join the communication. Overall, QS communication offers a practical, adaptive, and robust solution that enables LEO satellite communications to support the growing demands of IoT applications and global connectivity. In our investigation, we explore different chip waveforms such as rectangular and raised cosine. Furthermore, for the first time, we study the Symbol Error Rate (SER) performance of QS LoRa communication, for different spreading factors (SF), over Additive White Gaussian Noise (AWGN) channels.

Time-Frequency Warped Waveforms for Well-Contained Massive Machine Type Communications

May 01, 2023Abstract:This paper proposes a novel time-frequency warped waveform for short symbols, massive machine-type communication (mMTC), and internet of things (IoT) applications. The waveform is composed of asymmetric raised cosine (RC) pulses to increase the signal containment in time and frequency domains. The waveform has low power tails in the time domain, hence better performance in the presence of delay spread and time offsets. The time-axis warping unitary transform is applied to control the waveform occupancy in time-frequency space and to compensate for the usage of high roll-off factor pulses at the symbol edges. The paper explains a step-by-step analysis for determining the roll-off factors profile and the warping functions. Gains are presented over the conventional Zero-tail Discrete Fourier Transform-spread-Orthogonal Frequency Division Multiplexing (ZT-DFT-s-OFDM), and Cyclic prefix (CP) DFT-s-OFDM schemes in the simulations section.

Offloading Deep Learning Powered Vision Tasks from UAV to 5G Edge Server with Denoising

Feb 03, 2023

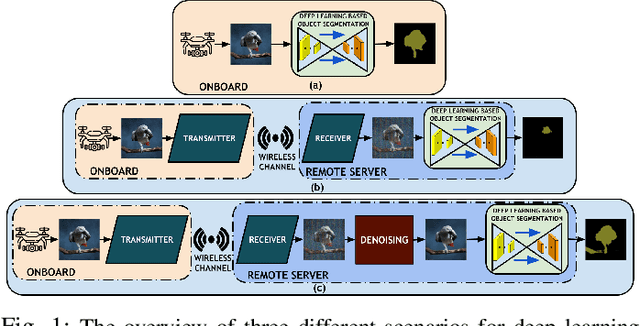

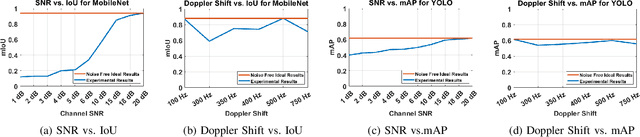

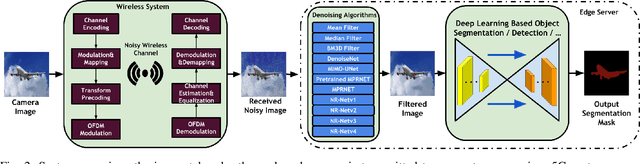

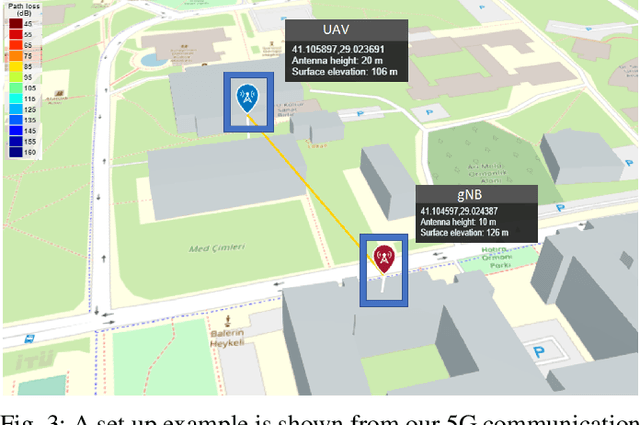

Abstract:Offloading computationally heavy tasks from an unmanned aerial vehicle (UAV) to a remote server helps improve the battery life and can help reduce resource requirements. Deep learning based state-of-the-art computer vision tasks, such as object segmentation and object detection, are computationally heavy algorithms, requiring large memory and computing power. Many UAVs are using (pretrained) off-the-shelf versions of such algorithms. Offloading such power-hungry algorithms to a remote server could help UAVs save power significantly. However, deep learning based algorithms are susceptible to noise, and a wireless communication system, by its nature, introduces noise to the original signal. When the signal represents an image, noise affects the image. There has not been much work studying the effect of the noise introduced by the communication system on pretrained deep networks. In this work, we first analyze how reliable it is to offload deep learning based computer vision tasks (including both object segmentation and detection) by focusing on the effect of various parameters of a 5G wireless communication system on the transmitted image and demonstrate how the introduced noise of the used 5G wireless communication system reduces the performance of the offloaded deep learning task. Then solutions are introduced to eliminate (or reduce) the negative effect of the noise. The proposed framework starts with introducing many classical techniques as alternative solutions first, and then introduces a novel deep learning based solution to denoise the given noisy input image. The performance of various denoising algorithms on offloading both object segmentation and object detection tasks are compared. Our proposed deep transformer-based denoiser algorithm (NR-Net) yields the state-of-the-art results on reducing the negative effect of the noise in our experiments.

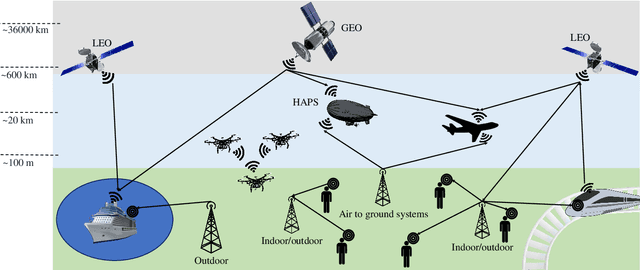

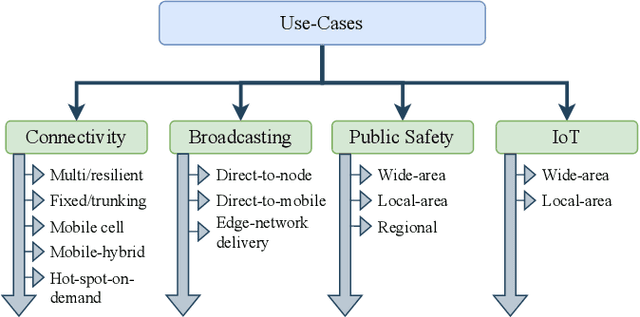

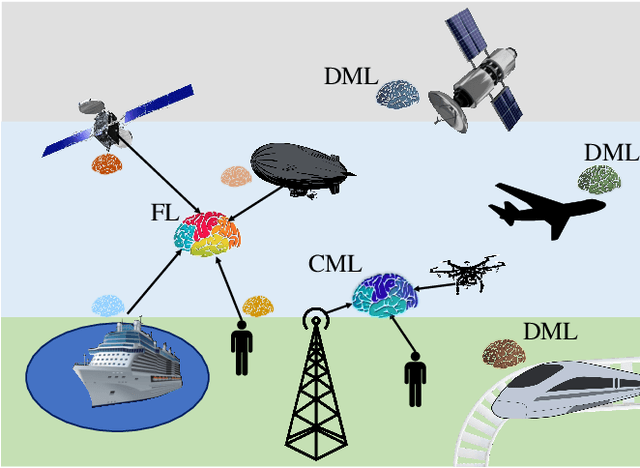

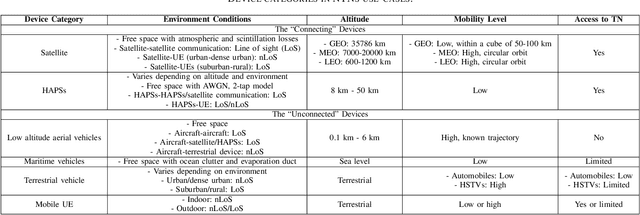

Centralized and Decentralized ML-Enabled Integrated Terrestrial and Non-Terrestrial Networks

Jul 22, 2022

Abstract:Non-terrestrial networks (NTNs) are a critical enabler of the persistent connectivity vision of sixth-generation networks, as they can service areas where terrestrial infrastructure falls short. However, the integration of these networks with the terrestrial network is laden with obstacles. The dynamic nature of NTN communication scenarios and numerous variables render conventional model-based solutions computationally costly and impracticable for resource allocation, parameter optimization, and other problems. Machine learning (ML)-based solutions, thus, can perform a pivotal role due to their inherent ability to uncover the hidden patterns in time-varying, multi-dimensional data with superior performance and less complexity. Centralized ML (CML) and decentralized ML (DML), named so based on the distribution of the data and computational load, are two classes of ML that are being studied as solutions for the various complications of terrestrial and non-terrestrial networks (TNTN) integration. Both have their benefits and drawbacks under different circumstances, and it is integral to choose the appropriate ML approach for each TNTN integration issue. To this end, this paper goes over the TNTN integration architectures as given in the 3rd generation partnership project standard releases, proposing possible scenarios. Then, the capabilities and challenges of CML and DML are explored from the vantage point of these scenarios.

Identification of Distorted RF Components via Deep Multi-Task Learning

Jul 04, 2022

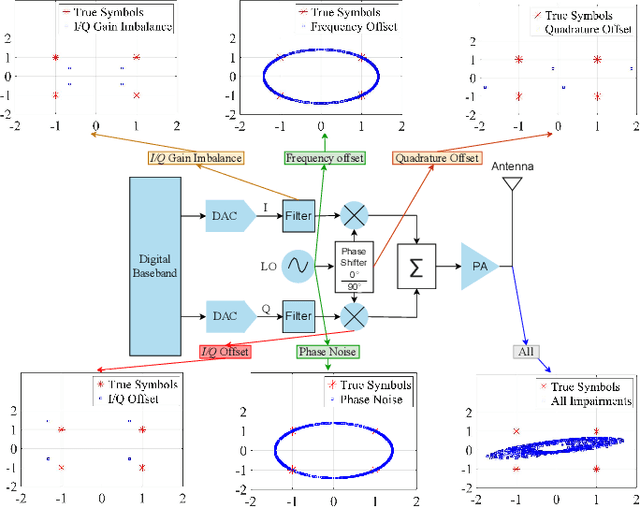

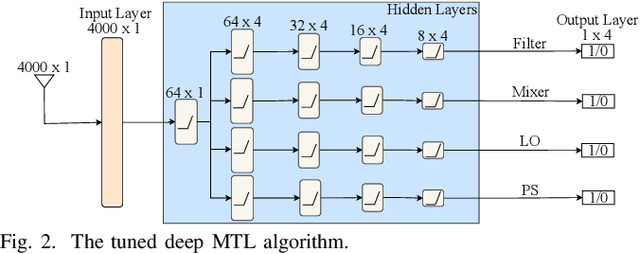

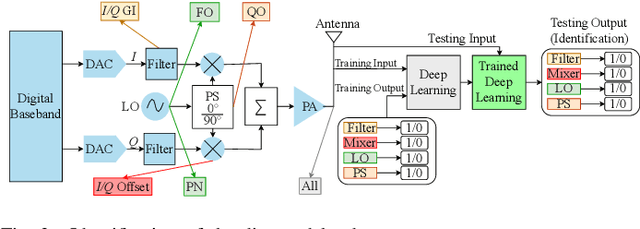

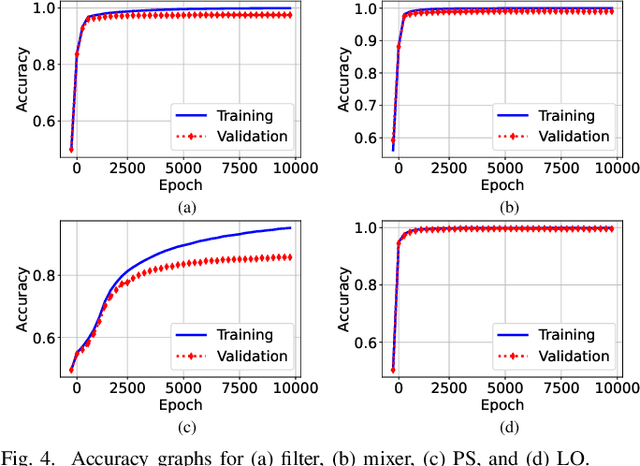

Abstract:High-quality radio frequency (RF) components are imperative for efficient wireless communication. However, these components can degrade over time and need to be identified so that either they can be replaced or their effects can be compensated. The identification of these components can be done through observation and analysis of constellation diagrams. However, in the presence of multiple distortions, it is very challenging to isolate and identify the RF components responsible for the degradation. This paper highlights the difficulties of distorted RF components' identification and their importance. Furthermore, a deep multi-task learning algorithm is proposed to identify the distorted components in the challenging scenario. Extensive simulations show that the proposed algorithm can automatically detect multiple distorted RF components with high accuracy in different scenarios.

Deep Learning-Aided Spatial Multiplexing with Index Modulation

Feb 06, 2022Abstract:In this paper, deep learning (DL)-aided data detection of spatial multiplexing (SMX) multiple-input multiple-output (MIMO) transmission with index modulation (IM) (Deep-SMX-IM) has been proposed. Deep-SMX-IM has been constructed by combining a zero-forcing (ZF) detector and DL technique. The proposed method uses the significant advantages of DL techniques to learn transmission characteristics of the frequency and spatial domains. Furthermore, thanks to using subblockbased detection provided by IM, Deep-SMX-IM is a straightforward method, which eventually reveals reduced complexity. It has been shown that Deep-SMX-IM has significant error performance gains compared to ZF detector without increasing computational complexity for different system configurations.

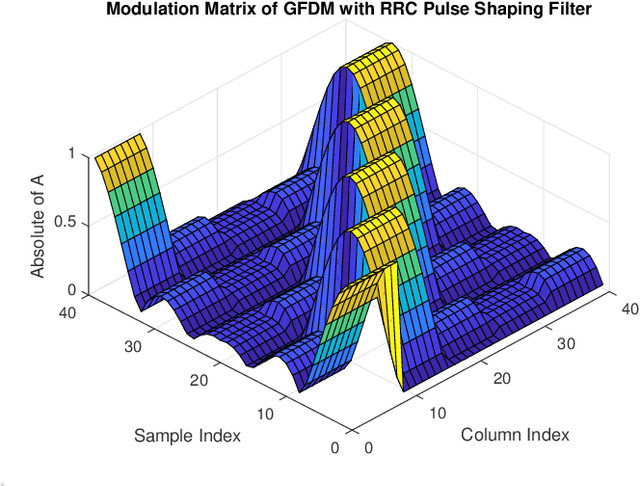

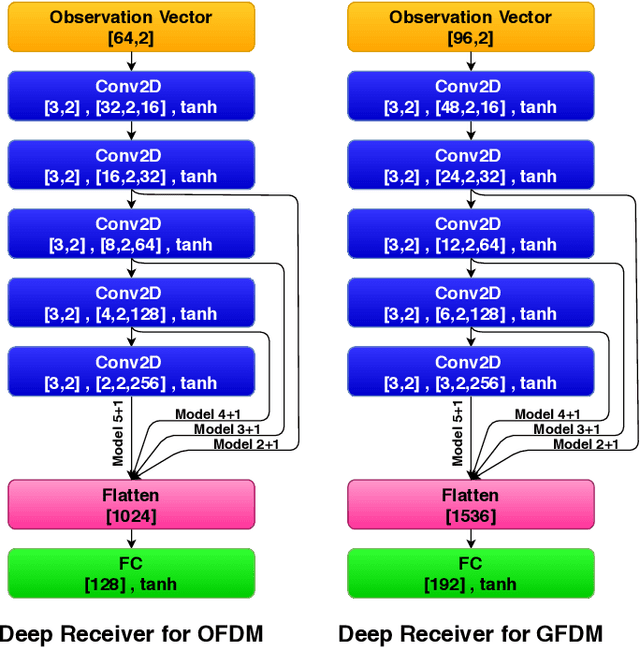

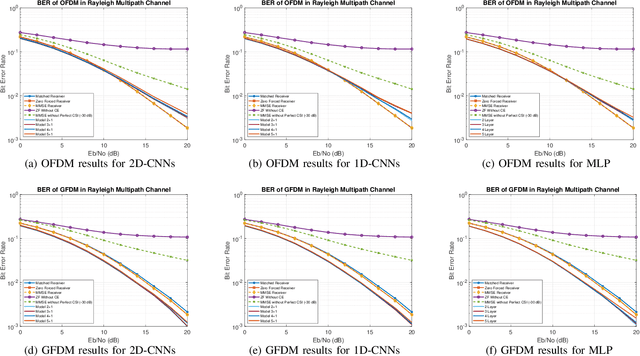

Deep Receiver Design for Multi-carrier Waveforms Using CNNs

Jun 02, 2020

Abstract:In this paper, a deep learning based receiver is proposed for a collection of multi-carrier wave-forms including both current and next-generation wireless communication systems. In particular, we propose to use a convolutional neural network (CNN) for jointly detection and demodulation of the received signal at the receiver in wireless environments. We compare our proposed architecture to the classical methods and demonstrate that our proposed CNN-based architecture can perform better on different multi-carrier forms including OFDM and GFDM in various simulations. Furthermore, we compare the total number of required parameters for each network for memory requirements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge