Haibin Zhao

Explainable Deep Learning Framework for Human Activity Recognition

Aug 21, 2024

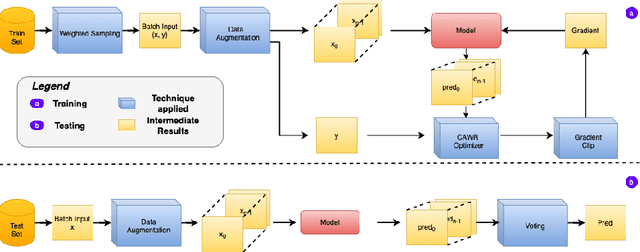

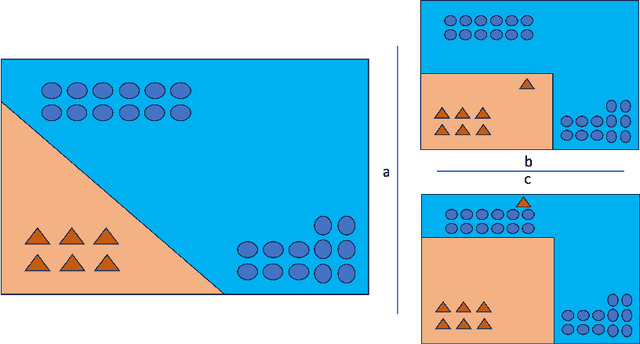

Abstract:In the realm of human activity recognition (HAR), the integration of explainable Artificial Intelligence (XAI) emerges as a critical necessity to elucidate the decision-making processes of complex models, fostering transparency and trust. Traditional explanatory methods like Class Activation Mapping (CAM) and attention mechanisms, although effective in highlighting regions vital for decisions in various contexts, prove inadequate for HAR. This inadequacy stems from the inherently abstract nature of HAR data, rendering these explanations obscure. In contrast, state-of-th-art post-hoc interpretation techniques for time series can explain the model from other perspectives. However, this requires extra effort. It usually takes 10 to 20 seconds to generate an explanation. To overcome these challenges, we proposes a novel, model-agnostic framework that enhances both the interpretability and efficacy of HAR models through the strategic use of competitive data augmentation. This innovative approach does not rely on any particular model architecture, thereby broadening its applicability across various HAR models. By implementing competitive data augmentation, our framework provides intuitive and accessible explanations of model decisions, thereby significantly advancing the interpretability of HAR systems without compromising on performance.

Standardizing Your Training Process for Human Activity Recognition Models: A Comprehensive Review in the Tunable Factors

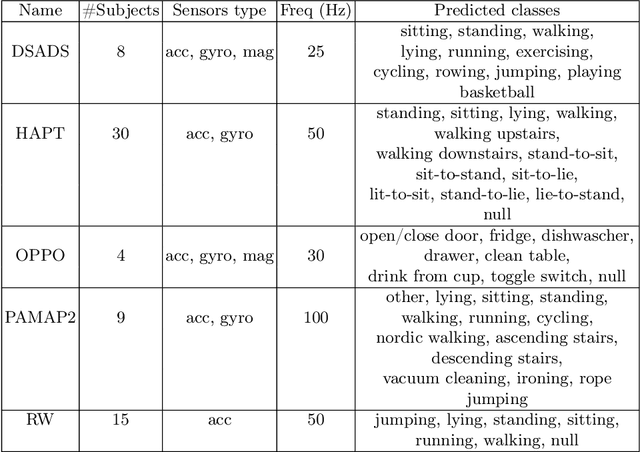

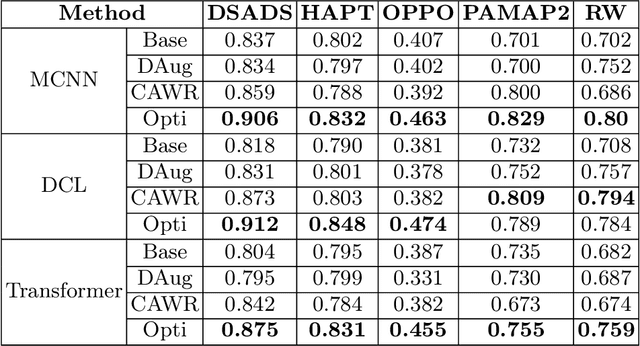

Jan 10, 2024Abstract:In recent years, deep learning has emerged as a potent tool across a multitude of domains, leading to a surge in research pertaining to its application in the wearable human activity recognition (WHAR) domain. Despite the rapid development, concerns have been raised about the lack of standardization and consistency in the procedures used for experimental model training, which may affect the reproducibility and reliability of research results. In this paper, we provide an exhaustive review of contemporary deep learning research in the field of WHAR and collate information pertaining to the training procedure employed in various studies. Our findings suggest that a major trend is the lack of detail provided by model training protocols. Besides, to gain a clearer understanding of the impact of missing descriptions, we utilize a control variables approach to assess the impact of key tunable components (e.g., optimization techniques and early stopping criteria) on the inter-subject generalization capabilities of HAR models. With insights from the analyses, we define a novel integrated training procedure tailored to the WHAR model. Empirical results derived using five well-known \ac{whar} benchmark datasets and three classical HAR model architectures demonstrate the effectiveness of our proposed methodology: in particular, there is a significant improvement in macro F1 leave one subject out cross-validation performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge