Hai Siong Tan

Evidential Physics-Informed Neural Networks

Jan 27, 2025

Abstract:We present a novel class of Physics-Informed Neural Networks that is formulated based on the principles of Evidential Deep Learning, where the model incorporates uncertainty quantification by learning parameters of a higher-order distribution. The dependent and trainable variables of the PDE residual loss and data-fitting loss terms are recast as functions of the hyperparameters of an evidential prior distribution. Our model is equipped with an information-theoretic regularizer that contains the Kullback-Leibler divergence between two inverse-gamma distributions characterizing predictive uncertainty. Relative to Bayesian-Physics-Informed-Neural-Networks, our framework appeared to exhibit higher sensitivity to data noise, preserve boundary conditions more faithfully and yield empirical coverage probabilities closer to nominal ones. Toward examining its relevance for data mining in scientific discoveries, we demonstrate how to apply our model to inverse problems involving 1D and 2D nonlinear differential equations.

Uncertainty-Error correlations in Evidential Deep Learning models for biomedical segmentation

Oct 24, 2024Abstract:In this work, we examine the effectiveness of an uncertainty quantification framework known as Evidential Deep Learning applied in the context of biomedical image segmentation. This class of models involves assigning Dirichlet distributions as priors for segmentation labels, and enables a few distinct definitions of model uncertainties. Using the cardiac and prostate MRI images available in the Medical Segmentation Decathlon for validation, we found that Evidential Deep Learning models with U-Net backbones generally yielded superior correlations between prediction errors and uncertainties relative to the conventional baseline equipped with Shannon entropy measure, Monte-Carlo Dropout and Deep Ensemble methods. We also examined these models' effectiveness in active learning, finding that relative to the standard Shannon entropy-based sampling, they yielded higher point-biserial uncertainty-error correlations while attaining similar performances in Dice-Sorensen coefficients. These superior features of EDL models render them well-suited for segmentation tasks that warrant a critical sensitivity in detecting large model errors.

Deep Evidential Learning for Dose Prediction

Apr 26, 2024Abstract:In this work, we present a novel application of an uncertainty-quantification framework called Deep Evidential Learning in the domain of radiotherapy dose prediction. Using medical images of the Open Knowledge-Based Planning Challenge dataset, we found that this model can be effectively harnessed to yield uncertainty estimates that inherited correlations with prediction errors upon completion of network training. This was achieved only after reformulating the original loss function for a stable implementation. We found that (i)epistemic uncertainty was highly correlated with prediction errors, with various association indices comparable or stronger than those for Monte-Carlo Dropout and Deep Ensemble methods, (ii)the median error varied with uncertainty threshold much more linearly for epistemic uncertainty in Deep Evidential Learning relative to these other two conventional frameworks, indicative of a more uniformly calibrated sensitivity to model errors, (iii)relative to epistemic uncertainty, aleatoric uncertainty demonstrated a more significant shift in its distribution in response to Gaussian noise added to CT intensity, compatible with its interpretation as reflecting data noise. Collectively, our results suggest that Deep Evidential Learning is a promising approach that can endow deep-learning models in radiotherapy dose prediction with statistical robustness. Towards enhancing its clinical relevance, we demonstrate how we can use such a model to construct the predicted Dose-Volume-Histograms' confidence intervals.

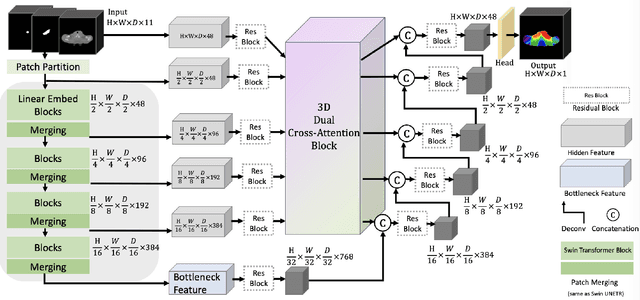

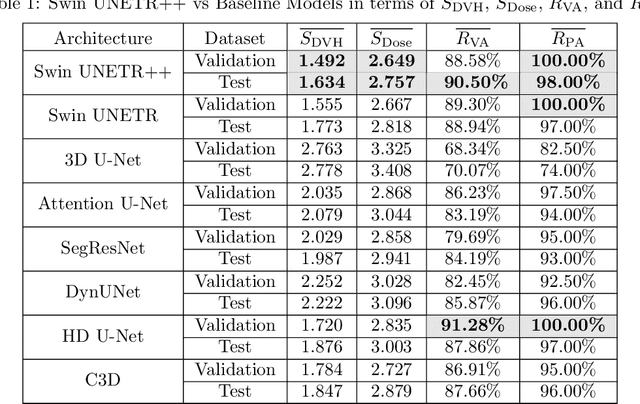

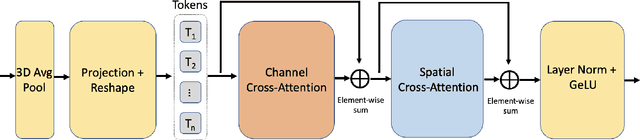

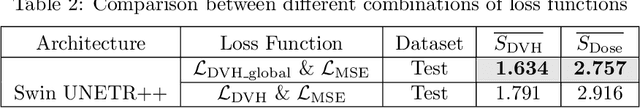

Swin UNETR++: Advancing Transformer-Based Dense Dose Prediction Towards Fully Automated Radiation Oncology Treatments

Nov 11, 2023

Abstract:The field of Radiation Oncology is uniquely positioned to benefit from the use of artificial intelligence to fully automate the creation of radiation treatment plans for cancer therapy. This time-consuming and specialized task combines patient imaging with organ and tumor segmentation to generate a 3D radiation dose distribution to meet clinical treatment goals, similar to voxel-level dense prediction. In this work, we propose Swin UNETR++, that contains a lightweight 3D Dual Cross-Attention (DCA) module to capture the intra and inter-volume relationships of each patient's unique anatomy, which fully convolutional neural networks lack. Our model was trained, validated, and tested on the Open Knowledge-Based Planning dataset. In addition to metrics of Dose Score $\overline{S_{\text{Dose}}}$ and DVH Score $\overline{S_{\text{DVH}}}$ that quantitatively measure the difference between the predicted and ground-truth 3D radiation dose distribution, we propose the qualitative metrics of average volume-wise acceptance rate $\overline{R_{\text{VA}}}$ and average patient-wise clinical acceptance rate $\overline{R_{\text{PA}}}$ to assess the clinical reliability of the predictions. Swin UNETR++ demonstrates near-state-of-the-art performance on validation and test dataset (validation: $\overline{S_{\text{DVH}}}$=1.492 Gy, $\overline{S_{\text{Dose}}}$=2.649 Gy, $\overline{R_{\text{VA}}}$=88.58%, $\overline{R_{\text{PA}}}$=100.0%; test: $\overline{S_{\text{DVH}}}$=1.634 Gy, $\overline{S_{\text{Dose}}}$=2.757 Gy, $\overline{R_{\text{VA}}}$=90.50%, $\overline{R_{\text{PA}}}$=98.0%), establishing a basis for future studies to translate 3D dose predictions into a deliverable treatment plan, facilitating full automation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge