Hafiq Anas

Deep Reinforcement Learning-Based Mapless Crowd Navigation with Perceived Risk of the Moving Crowd for Mobile Robots

Apr 07, 2023

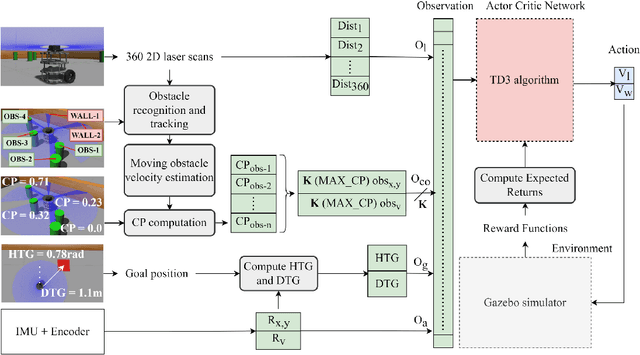

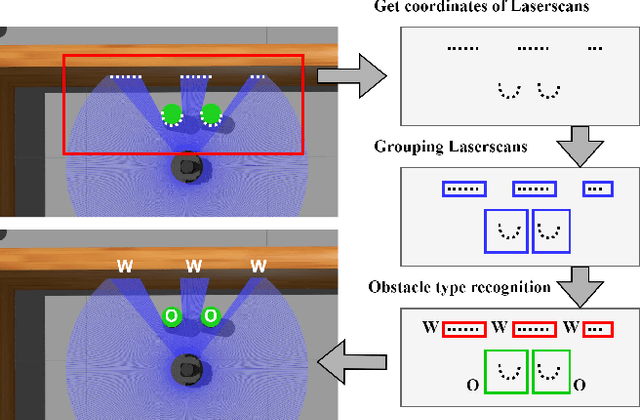

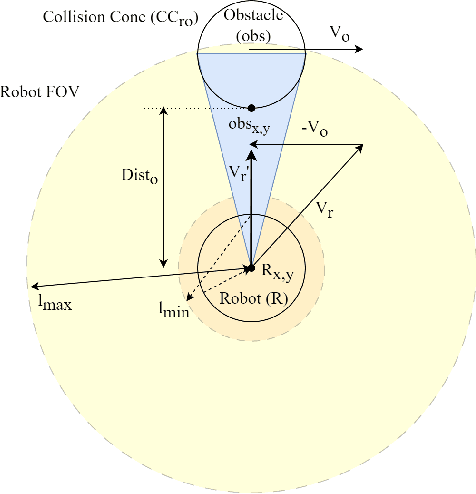

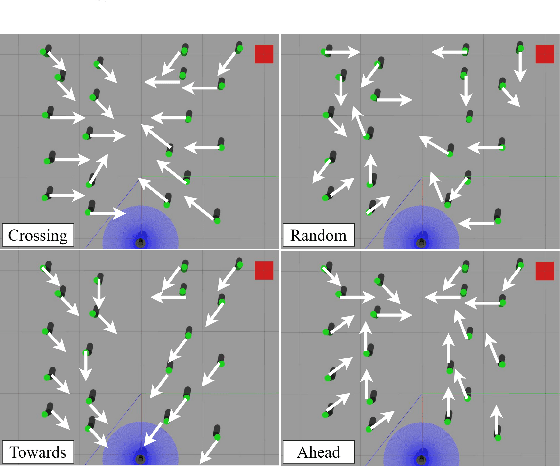

Abstract:Classical map-based navigation methods are commonly used for robot navigation, but they often struggle in crowded environments due to the Frozen Robot Problem (FRP). Deep reinforcement learning-based methods address the FRP problem, however, suffer from the issues of generalization and scalability. To overcome these challenges, we propose a method that uses Collision Probability (CP) to help the robot navigate safely through crowds. The inclusion of CP in the observation space gives the robot a sense of the level of danger of the moving crowd. The robot will navigate through the crowd when it appears safe but will take a detour when the crowd is moving aggressively. By focusing on the most dangerous obstacle, the robot will not be confused when the crowd density is high, ensuring scalability of the model. Our approach was developed using deep reinforcement learning (DRL) and trained using the Gazebo simulator in a non cooperative crowd environment with obstacles moving at randomized speeds and directions. We then evaluated our model on four different crowd-behavior scenarios with varying densities of crowds. The results shown that our method achieved a 100% success rate in all test settings. We compared our approach with a current state-of-the-art DRLbased approach, and our approach has performed significantly better. Importantly, our method is highly generalizable and requires no fine-tuning after being trained once. We further demonstrated the crowd navigation capability of our model in real-world tests.

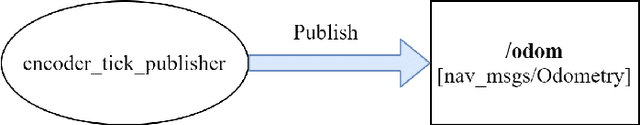

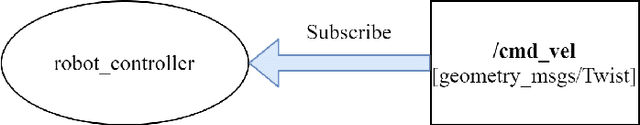

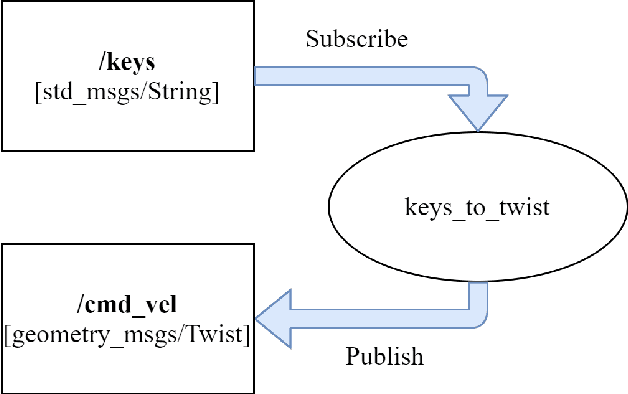

An implementation of ROS Autonomous Navigation on Parallax Eddie platform

Aug 28, 2021

Abstract:This paper presents an implementation of autonomous navigation functionality based on Robot Operating System (ROS) on a wheeled differential drive mobile platform called Eddie robot. ROS is a framework that contains many reusable software stacks as well as visualization and debugging tools that provides an ideal environment for any robotic project development. The main contribution of this paper is the description of the customized hardware and software system setup of Eddie robot to work with an autonomous navigation system in ROS called Navigation Stack and to implement one application use case for autonomous navigation. For this paper, photo taking is chosen to demonstrate a use case of the mobile robot.

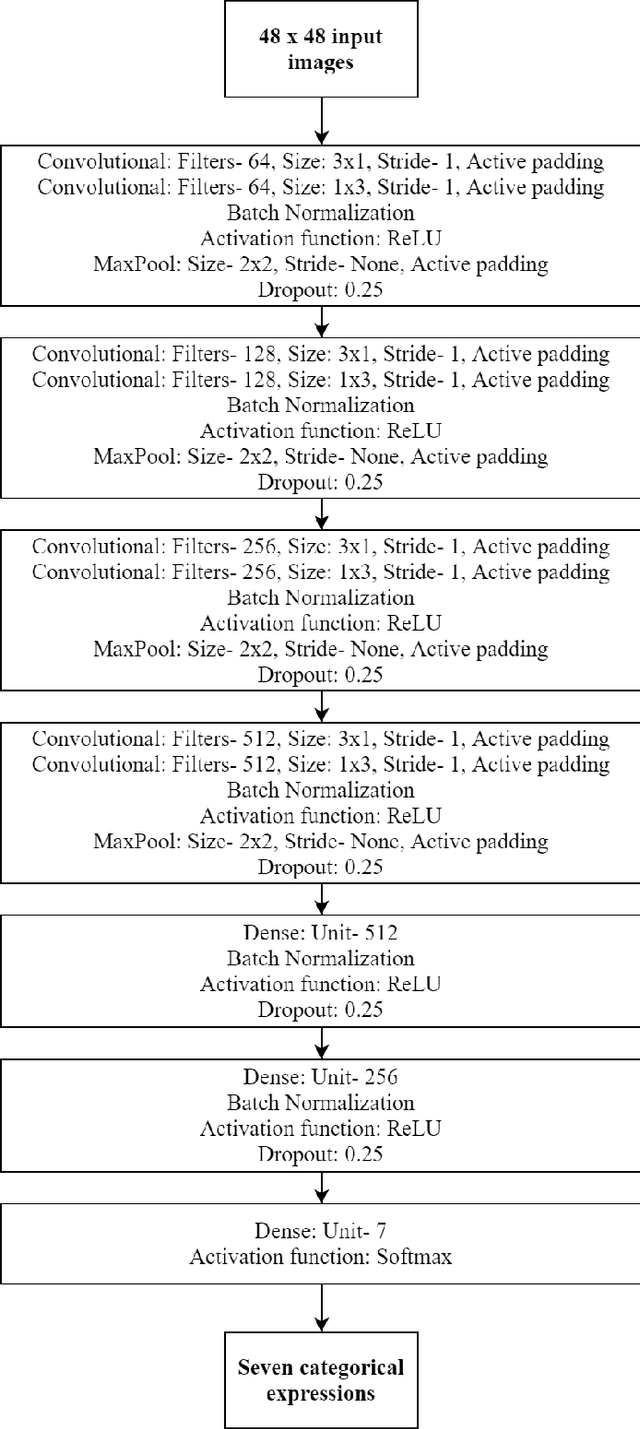

Deep Convolutional Neural Network Based Facial Expression Recognition in the Wild

Oct 03, 2020

Abstract:This paper describes the proposed methodology, data used and the results of our participation in the ChallengeTrack 2 (Expr Challenge Track) of the Affective Behavior Analysis in-the-wild (ABAW) Competition 2020. In this competition, we have used a proposed deep convolutional neural network (CNN) model to perform automatic facial expression recognition (AFER) on the given dataset. Our proposed model has achieved an accuracy of 50.77% and an F1 score of 29.16% on the validation set.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge