Guy Gilboa

Identifying Memorization of Diffusion Models through p-Laplace Analysis

May 13, 2025Abstract:Diffusion models, today's leading image generative models, estimate the score function, i.e. the gradient of the log probability of (perturbed) data samples, without direct access to the underlying probability distribution. This work investigates whether the estimated score function can be leveraged to compute higher-order differentials, namely p-Laplace operators. We show here these operators can be employed to identify memorized training data. We propose a numerical p-Laplace approximation based on the learned score functions, showing its effectiveness in identifying key features of the probability landscape. We analyze the structured case of Gaussian mixture models, and demonstrate the results carry-over to image generative models, where memorization identification based on the p-Laplace operator is performed for the first time.

Whitened CLIP as a Likelihood Surrogate of Images and Captions

May 11, 2025Abstract:Likelihood approximations for images are not trivial to compute and can be useful in many applications. We examine the use of Contrastive Language-Image Pre-training (CLIP) to assess the likelihood of images and captions. We introduce \textit{Whitened CLIP}, a novel transformation of the CLIP latent space via an invertible linear operation. This transformation ensures that each feature in the embedding space has zero mean, unit standard deviation, and no correlation with all other features, resulting in an identity covariance matrix. We show that the whitened embeddings statistics can be well approximated as a standard normal distribution, thus, the log-likelihood is estimated simply by the square Euclidean norm in the whitened embedding space. The whitening procedure is completely training-free and performed using a pre-computed whitening matrix, hence, is very fast. We present several preliminary experiments demonstrating the properties and applicability of these likelihood scores to images and captions.

* Accepted to ICML 2025. This version matches the camera-ready version

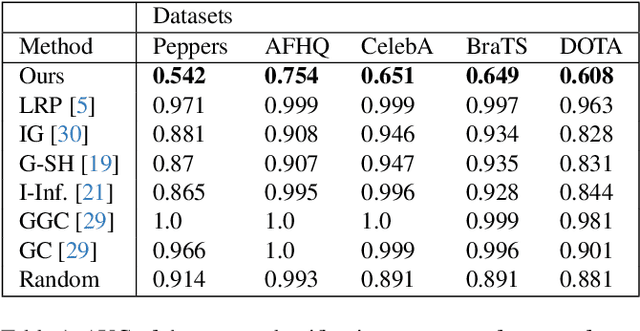

Manifold Induced Biases for Zero-shot and Few-shot Detection of Generated Images

Apr 21, 2025

Abstract:Distinguishing between real and AI-generated images, commonly referred to as 'image detection', presents a timely and significant challenge. Despite extensive research in the (semi-)supervised regime, zero-shot and few-shot solutions have only recently emerged as promising alternatives. Their main advantage is in alleviating the ongoing data maintenance, which quickly becomes outdated due to advances in generative technologies. We identify two main gaps: (1) a lack of theoretical grounding for the methods, and (2) significant room for performance improvements in zero-shot and few-shot regimes. Our approach is founded on understanding and quantifying the biases inherent in generated content, where we use these quantities as criteria for characterizing generated images. Specifically, we explore the biases of the implicit probability manifold, captured by a pre-trained diffusion model. Through score-function analysis, we approximate the curvature, gradient, and bias towards points on the probability manifold, establishing criteria for detection in the zero-shot regime. We further extend our contribution to the few-shot setting by employing a mixture-of-experts methodology. Empirical results across 20 generative models demonstrate that our method outperforms current approaches in both zero-shot and few-shot settings. This work advances the theoretical understanding and practical usage of generated content biases through the lens of manifold analysis.

The Double-Ellipsoid Geometry of CLIP

Nov 21, 2024Abstract:Contrastive Language-Image Pre-Training (CLIP) is highly instrumental in machine learning applications within a large variety of domains. We investigate the geometry of this embedding, which is still not well understood. We examine the raw unnormalized embedding and show that text and image reside on linearly separable ellipsoid shells, not centered at the origin. We explain the benefits of having this structure, allowing to better embed instances according to their uncertainty during contrastive training. Frequent concepts in the dataset yield more false negatives, inducing greater uncertainty. A new notion of conformity is introduced, which measures the average cosine similarity of an instance to any other instance within a representative data set. We show this measure can be accurately estimated by simply computing the cosine similarity to the modality mean vector. Furthermore, we find that CLIP's modality gap optimizes the matching of the conformity distributions of image and text.

Fast and Simple Explainability for Point Cloud Networks

Mar 15, 2024Abstract:We propose a fast and simple explainable AI (XAI) method for point cloud data. It computes pointwise importance with respect to a trained network downstream task. This allows better understanding of the network properties, which is imperative for safety-critical applications. In addition to debugging and visualization, our low computational complexity facilitates online feedback to the network at inference. This can be used to reduce uncertainty and to increase robustness. In this work, we introduce \emph{Feature Based Interpretability} (FBI), where we compute the features' norm, per point, before the bottleneck. We analyze the use of gradients and post- and pre-bottleneck strategies, showing pre-bottleneck is preferred, in terms of smoothness and ranking. We obtain at least three orders of magnitude speedup, compared to current XAI methods, thus, scalable for big point clouds or large-scale architectures. Our approach achieves SOTA results, in terms of classification explainability. We demonstrate how the proposed measure is helpful in analyzing and characterizing various aspects of 3D learning, such as rotation invariance, robustness to out-of-distribution (OOD) outliers or domain shift and dataset bias.

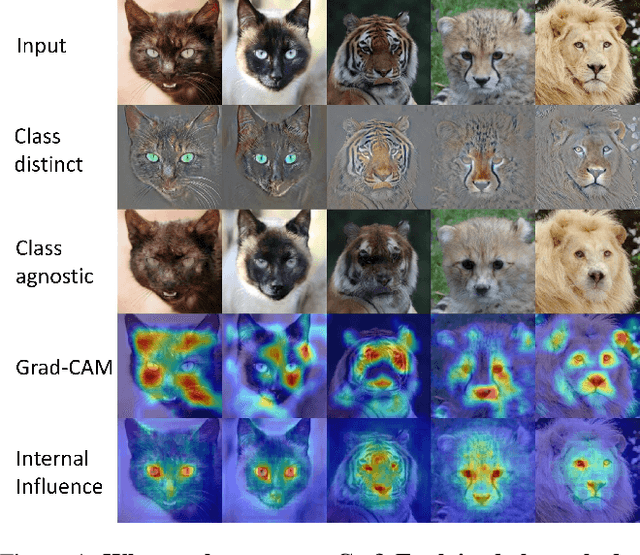

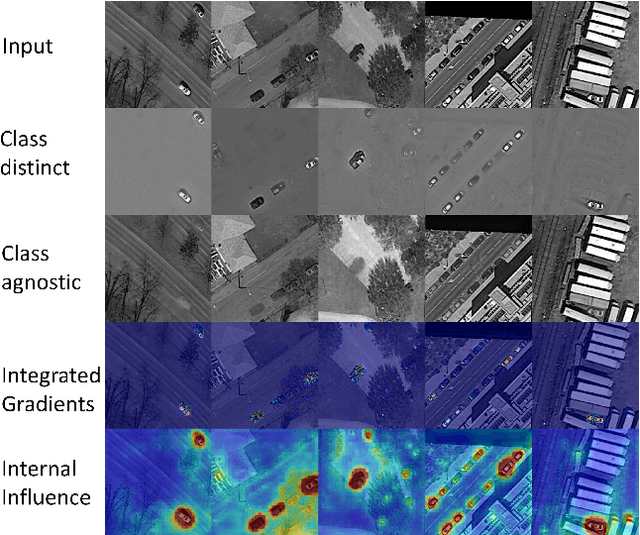

DXAI: Explaining Classification by Image Decomposition

Dec 30, 2023

Abstract:We propose a new way to explain and to visualize neural network classification through a decomposition-based explainable AI (DXAI). Instead of providing an explanation heatmap, our method yields a decomposition of the image into class-agnostic and class-distinct parts, with respect to the data and chosen classifier. Following a fundamental signal processing paradigm of analysis and synthesis, the original image is the sum of the decomposed parts. We thus obtain a radically different way of explaining classification. The class-agnostic part ideally is composed of all image features which do not posses class information, where the class-distinct part is its complementary. This new visualization can be more helpful and informative in certain scenarios, especially when the attributes are dense, global and additive in nature, for instance, when colors or textures are essential for class distinction. Code is available at https://github.com/dxai2024/dxai.

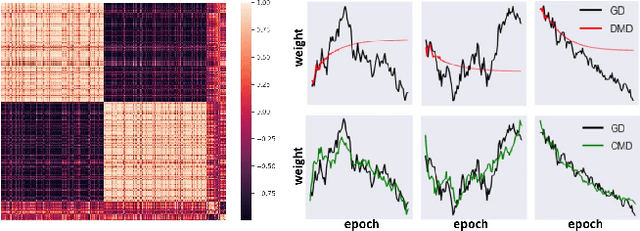

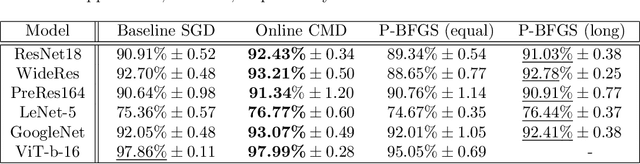

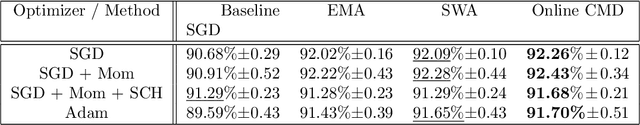

Enhancing Neural Training via a Correlated Dynamics Model

Dec 20, 2023

Abstract:As neural networks grow in scale, their training becomes both computationally demanding and rich in dynamics. Amidst the flourishing interest in these training dynamics, we present a novel observation: Parameters during training exhibit intrinsic correlations over time. Capitalizing on this, we introduce Correlation Mode Decomposition (CMD). This algorithm clusters the parameter space into groups, termed modes, that display synchronized behavior across epochs. This enables CMD to efficiently represent the training dynamics of complex networks, like ResNets and Transformers, using only a few modes. Moreover, test set generalization is enhanced. We introduce an efficient CMD variant, designed to run concurrently with training. Our experiments indicate that CMD surpasses the state-of-the-art method for compactly modeled dynamics on image classification. Our modeling can improve training efficiency and lower communication overhead, as shown by our preliminary experiments in the context of federated learning.

Critical Points ++: An Agile Point Cloud Importance Measure for Robust Classification, Adversarial Defense and Explainable AI

Aug 21, 2023Abstract:The ability to cope accurately and fast with Out-Of-Distribution (OOD) samples is crucial in real-world safety demanding applications. In this work we first study the interplay between critical points of 3D point clouds and OOD samples. Our findings are that common corruptions and outliers are often interpreted as critical points. We generalize the notion of critical points into importance measures. We show that training a classification network based only on less important points dramatically improves robustness, at a cost of minor performance loss on the clean set. We observe that normalized entropy is highly informative for corruption analysis. An adaptive threshold based on normalized entropy is suggested for selecting the set of uncritical points. Our proposed importance measure is extremely fast to compute. We show it can be used for a variety of applications, such as Explainable AI (XAI), Outlier Removal, Uncertainty Estimation, Robust Classification and Adversarial Defense. We reach SOTA results on the two latter tasks. Code is available at: https://github.com/yossilevii100/critical_points2

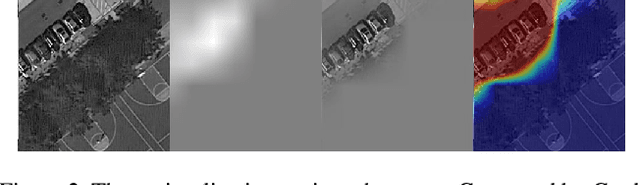

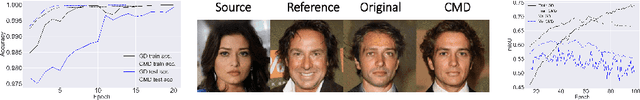

Additive Class Distinction Maps using Branched-GANs

May 04, 2023

Abstract:We present a new model, training procedure and architecture to create precise maps of distinction between two classes of images. The objective is to comprehend, in pixel-wise resolution, the unique characteristics of a class. These maps can facilitate self-supervised segmentation and objectdetection in addition to new capabilities in explainable AI (XAI). Our proposed architecture is based on image decomposition, where the output is the sum of multiple generative networks (branched-GANs). The distinction between classes is isolated in a dedicated branch. This approach allows clear, precise and interpretable visualization of the unique characteristics of each class. We show how our generic method can be used in several modalities for various tasks, such as MRI brain tumor extraction, isolating cars in aerial photography and obtaining feminine and masculine face features. This is a preliminary report of our initial findings and results.

EPiC: Ensemble of Partial Point Clouds for Robust Classification

Mar 20, 2023Abstract:Robust point cloud classification is crucial for real-world applications, as consumer-type 3D sensors often yield partial and noisy data, degraded by various artifacts. In this work we propose a general ensemble framework, based on partial point cloud sampling. Each ensemble member is exposed to only partial input data. Three sampling strategies are used jointly, two local ones, based on patches and curves, and a global one of random sampling. We demonstrate the robustness of our method to various local and global degradations. We show that our framework significantly improves the robustness of top classification netowrks by a large margin. Our experimental setting uses the recently introduced ModelNet-C database by Ren et al.[24], where we reach SOTA both on unaugmented and on augmented data. Our unaugmented mean Corruption Error (mCE) is 0.64 (current SOTA is 0.86) and 0.50 for augmented data (current SOTA is 0.57). We analyze and explain these remarkable results through diversity analysis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge