Guido C. H. E de Croon

Depth Transfer: Learning to See Like a Simulator for Real-World Drone Navigation

May 18, 2025

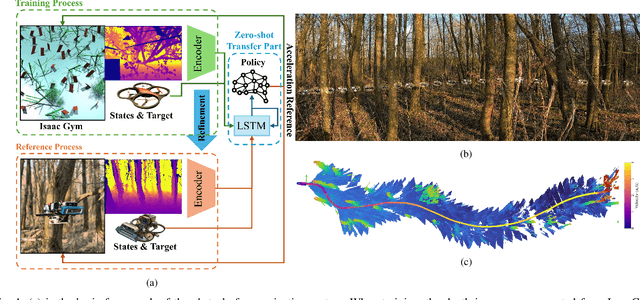

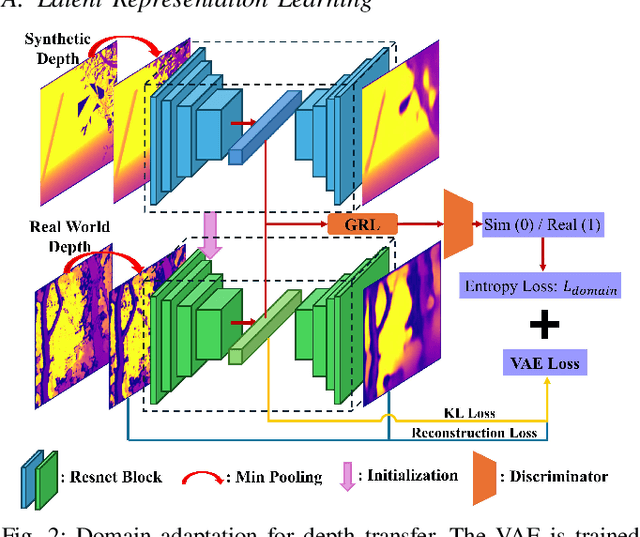

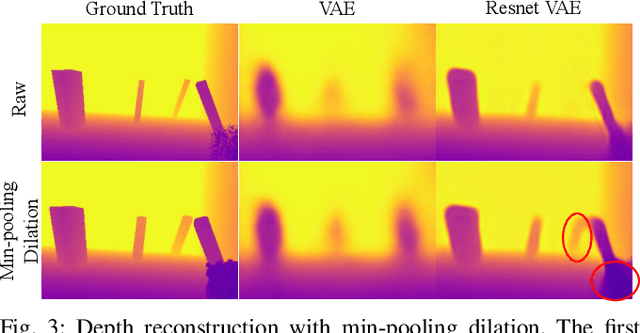

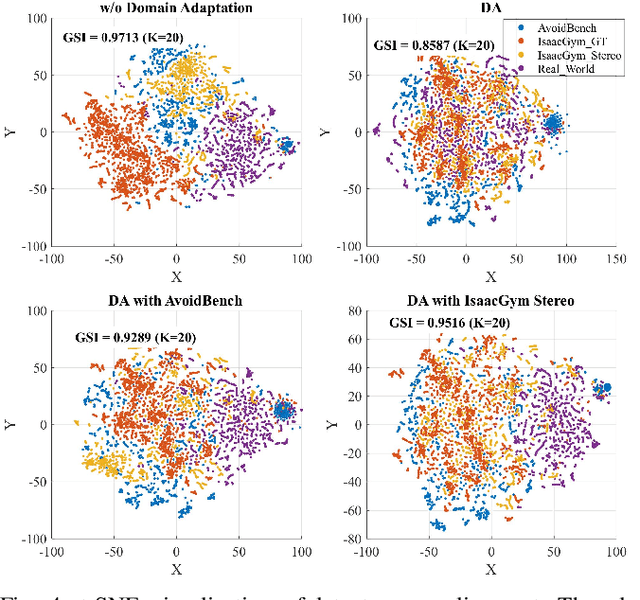

Abstract:Sim-to-real transfer is a fundamental challenge in robot reinforcement learning. Discrepancies between simulation and reality can significantly impair policy performance, especially if it receives high-dimensional inputs such as dense depth estimates from vision. We propose a novel depth transfer method based on domain adaptation to bridge the visual gap between simulated and real-world depth data. A Variational Autoencoder (VAE) is first trained to encode ground-truth depth images from simulation into a latent space, which serves as input to a reinforcement learning (RL) policy. During deployment, the encoder is refined to align stereo depth images with this latent space, enabling direct policy transfer without fine-tuning. We apply our method to the task of autonomous drone navigation through cluttered environments. Experiments in IsaacGym show that our method nearly doubles the obstacle avoidance success rate when switching from ground-truth to stereo depth input. Furthermore, we demonstrate successful transfer to the photo-realistic simulator AvoidBench using only IsaacGym-generated stereo data, achieving superior performance compared to state-of-the-art baselines. Real-world evaluations in both indoor and outdoor environments confirm the effectiveness of our approach, enabling robust and generalizable depth-based navigation across diverse domains.

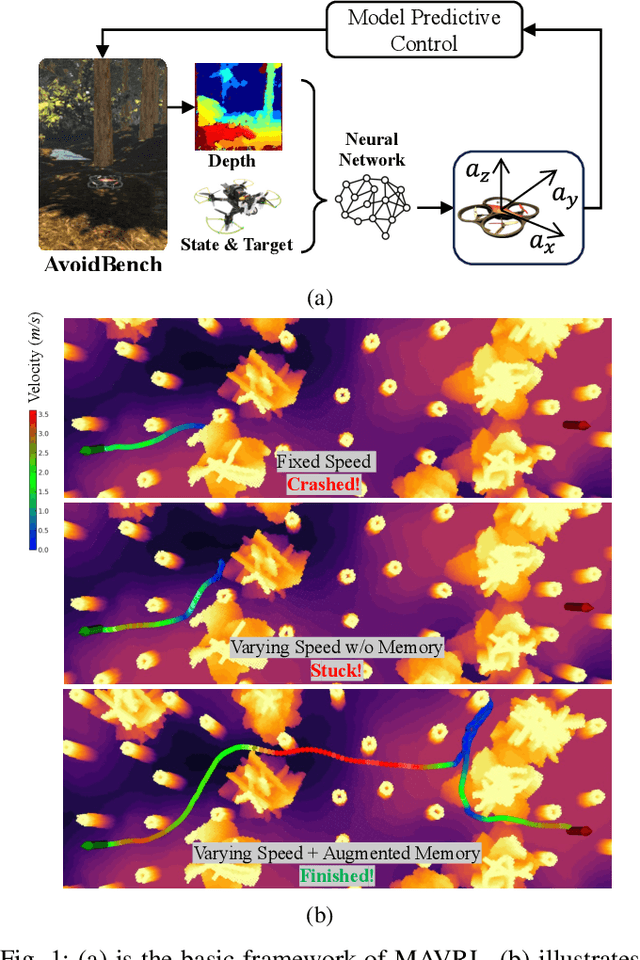

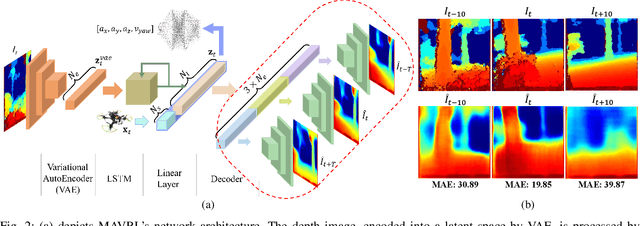

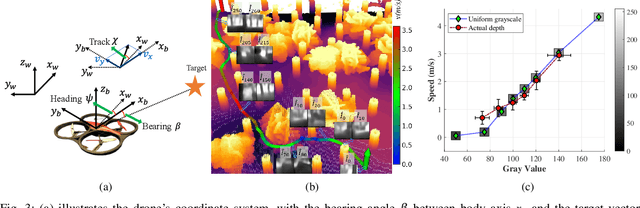

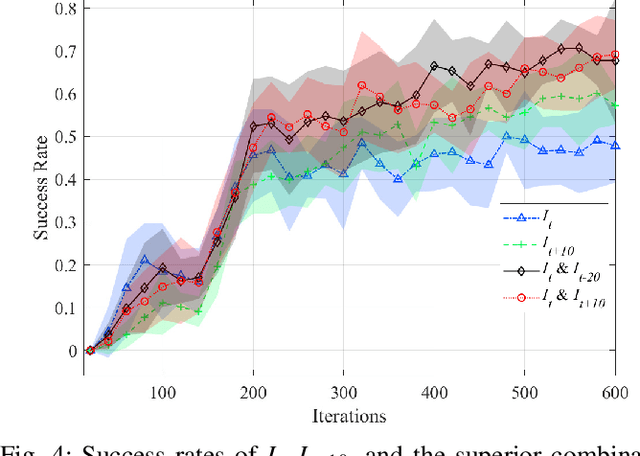

MAVRL: Learn to Fly in Cluttered Environments with Varying Speed

Feb 13, 2024

Abstract:Many existing obstacle avoidance algorithms overlook the crucial balance between safety and agility, especially in environments of varying complexity. In our study, we introduce an obstacle avoidance pipeline based on reinforcement learning. This pipeline enables drones to adapt their flying speed according to the environmental complexity. Moreover, to improve the obstacle avoidance performance in cluttered environments, we propose a novel latent space. The latent space in this representation is explicitly trained to retain memory of previous depth map observations. Our findings confirm that varying speed leads to a superior balance of success rate and agility in cluttered environments. Additionally, our memory-augmented latent representation outperforms the latent representation commonly used in reinforcement learning. Finally, after minimal fine-tuning, we successfully deployed our network on a real drone for enhanced obstacle avoidance.

AvoidBench: A high-fidelity vision-based obstacle avoidance benchmarking suite for multi-rotors

Jan 18, 2023

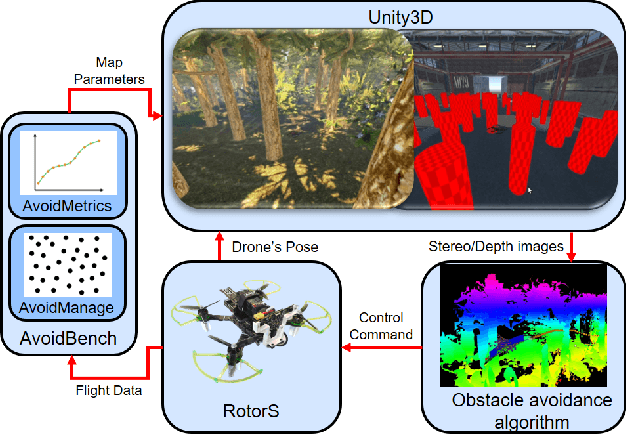

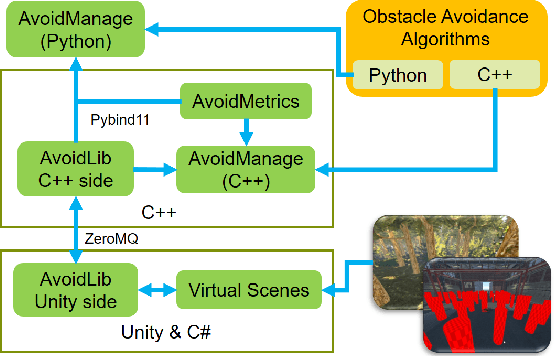

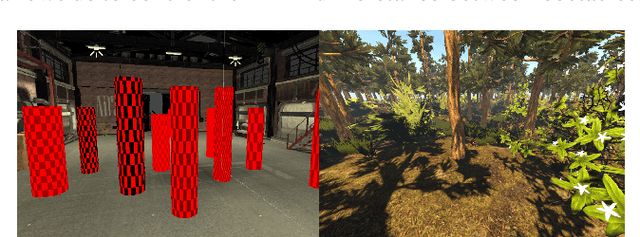

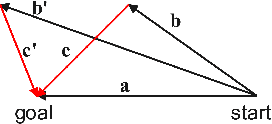

Abstract:Obstacle avoidance is an essential topic in the field of autonomous drone research. When choosing an avoidance algorithm, many different options are available, each with their advantages and disadvantages. As there is currently no consensus on testing methods, it is quite challenging to compare the performance between algorithms. In this paper, we propose AvoidBench, a benchmarking suite which can evaluate the performance of vision-based obstacle avoidance algorithms by subjecting them to a series of tasks. Thanks to the high fidelity of multi-rotors dynamics from RotorS and virtual scenes of Unity3D, AvoidBench can realize realistic simulated flight experiments. Compared to current drone simulators, we propose and implement both performance and environment metrics to reveal the suitability of obstacle avoidance algorithms for environments of different complexity. To illustrate AvoidBench's usage, we compare three algorithms: Ego-planner, MBPlanner, and Agile-autonomy. The trends observed are validated with real-world obstacle avoidance experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge