Guanzhou Li

Act Better by Timing: A timing-Aware Reinforcement Learning for Autonomous Driving

Jun 19, 2024

Abstract:Coping with intensively interactive scenarios is one of the significant challenges in the development of autonomous driving. Reinforcement learning (RL) offers an ideal solution for such scenarios through its self-evolution mechanism via interaction with the environment. However, the lack of sufficient safety mechanisms in common RL leads to the fact that agent often find it difficult to interact well in highly dynamic environment and may collide in pursuit of short-term rewards. Much of the existing safe RL methods require environment modeling to generate reliable safety boundaries that constrain agent behavior. Nevertheless, acquiring such safety boundaries is not always feasible in dynamic environments. Inspired by the driver's behavior of acting when uncertainty is minimal, this study introduces the concept of action timing to replace explicit safety boundary modeling. We define "actor" as an agent to decide optimal action at each step. By imaging the actor take opportunity to act as a timing-dependent gradual process, the other agent called "timing taker" can evaluate the optimal action execution time, and relate the optimal timing to each action moment as a dynamic safety factor to constrain the actor's action. In the experiment involving a complex, unsignaled intersection interaction, this framework achieved superior safety performance compared to all benchmark models.

ActorRL: A Novel Distributed Reinforcement Learning for Autonomous Intersection Management

May 05, 2022

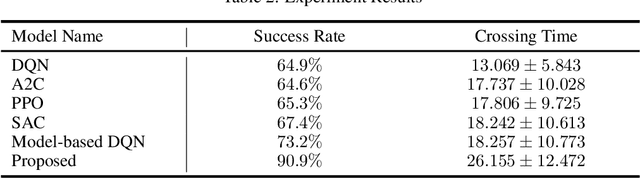

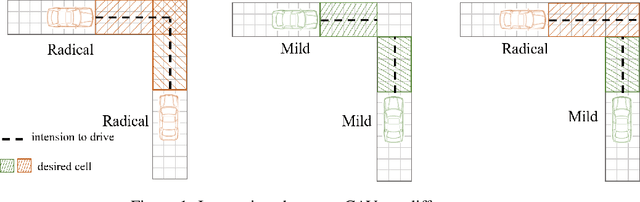

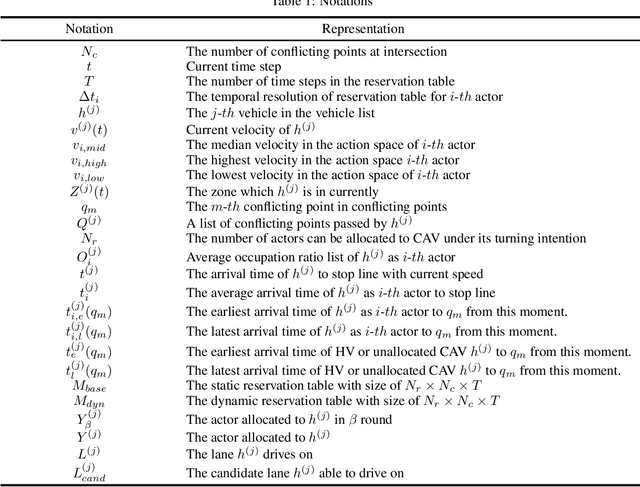

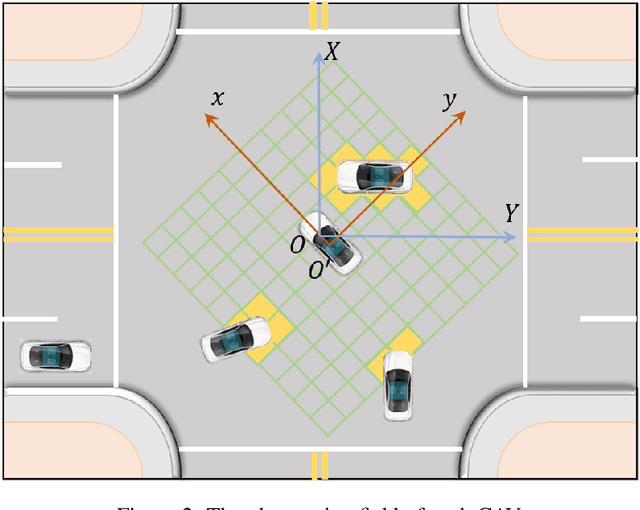

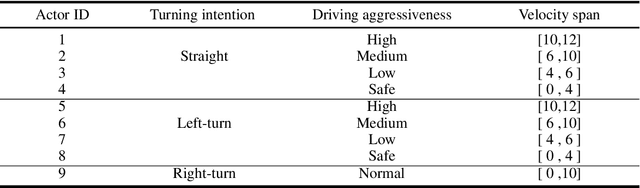

Abstract:As an emerging tendency of future transportation, Connected Autonomous Vehicle (CAV) has the potential to improve traffic capacity and safety at intersections. In autonomous intersection management (AIM), distributed scheduling algorithm formulates the interactions among traffic participants as multi-agent problem with information exchange and behavioral cooperation. Deep Reinforcement Learning (DRL), as an approach obtaining satisfying performance in many domains, has been brought in AIM recently. Attempts to overcome the challenges of curse of dimensionality and instability in multi-agent DRL, we propose a novel DRL framework for AIM problem, ActorRL, where actor allocation mechanism attaches multiple roles with different personalities to CAVs under global observation, including radical actor, conservative actor, safety-first actor, etc. The actor shares behavioral policies with collective memories from CAVs it is assigned to, playing the role of "navigator" at AIM. In experiments, we compares the proposed method with several widely used scheduling methods and distributed DRL without actor allocation, the results shows better performance over benchmarks.

Cyclic Graph Attentive Match Encoder : A Novel Neural Network For OD Estimation

Nov 26, 2021

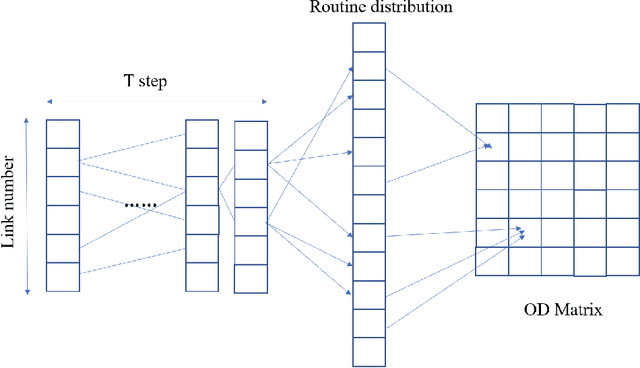

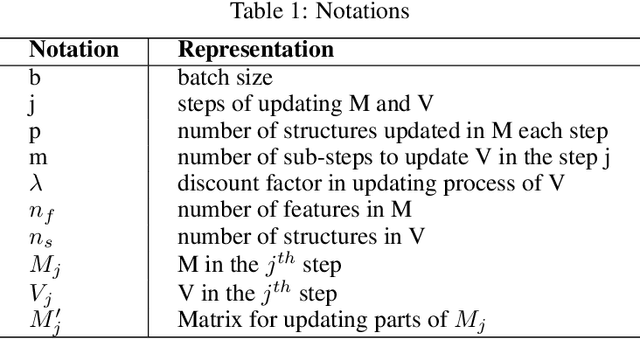

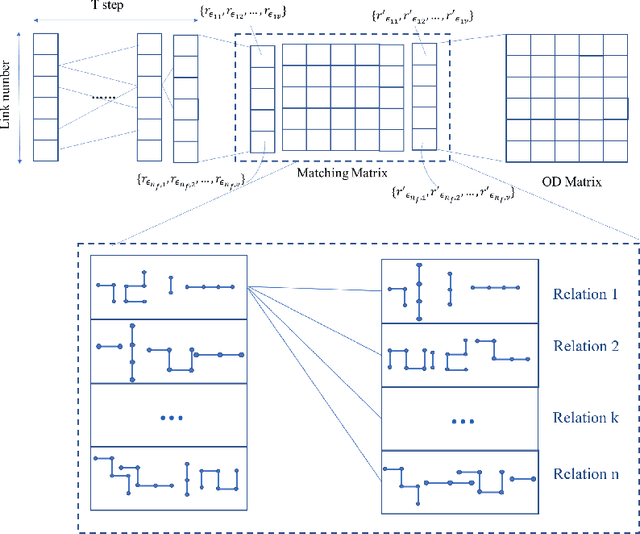

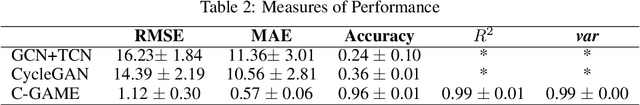

Abstract:Origin-Destination Estimation plays an important role in traffic management and traffic simulation in the era of Intelligent Transportation System (ITS). Nevertheless, previous model-based models face the under-determined challenge, thus desperate demand for additional assumptions and extra data exists. Deep learning provides an ideal data-based method for connecting inputs and results by probabilistic distribution transformation. While relevant researches of applying deep learning into OD estimation are limited due to the challenges lying in data transformation across representation space, especially from dynamic spatial-temporal space to heterogeneous graph in this issue. To address it, we propose Cyclic Graph Attentive Matching Encoder (C-GAME) based on a novel Graph Matcher with double-layer attention mechanism. It realizes effective information exchange in underlying feature space and establishes coupling relationship across spaces. The proposed model achieves state-of-the-art results in experiments, and offers a novel framework for inference task across spaces in prospective employments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge