ActorRL: A Novel Distributed Reinforcement Learning for Autonomous Intersection Management

Paper and Code

May 05, 2022

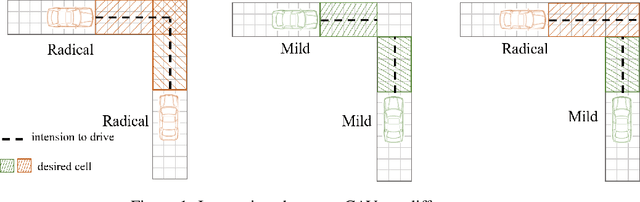

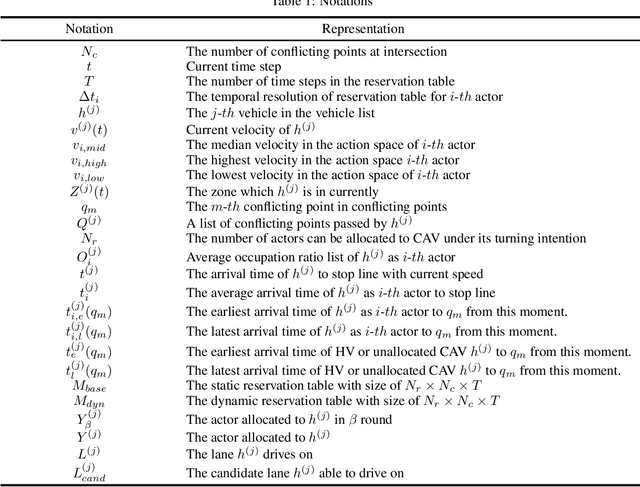

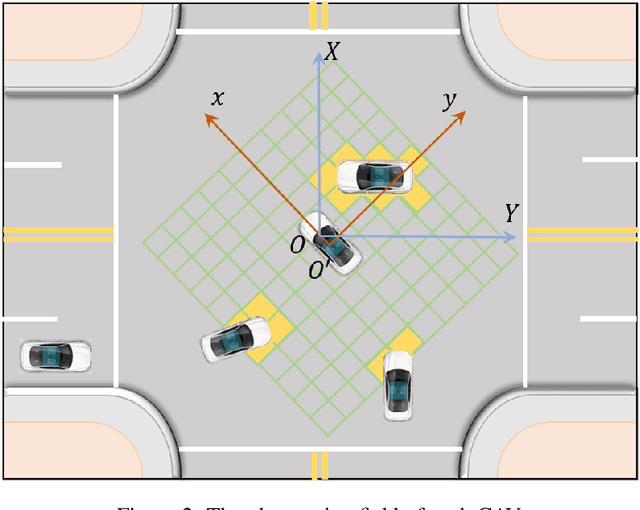

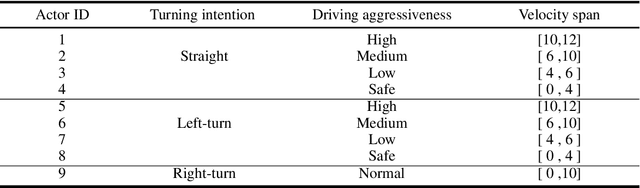

As an emerging tendency of future transportation, Connected Autonomous Vehicle (CAV) has the potential to improve traffic capacity and safety at intersections. In autonomous intersection management (AIM), distributed scheduling algorithm formulates the interactions among traffic participants as multi-agent problem with information exchange and behavioral cooperation. Deep Reinforcement Learning (DRL), as an approach obtaining satisfying performance in many domains, has been brought in AIM recently. Attempts to overcome the challenges of curse of dimensionality and instability in multi-agent DRL, we propose a novel DRL framework for AIM problem, ActorRL, where actor allocation mechanism attaches multiple roles with different personalities to CAVs under global observation, including radical actor, conservative actor, safety-first actor, etc. The actor shares behavioral policies with collective memories from CAVs it is assigned to, playing the role of "navigator" at AIM. In experiments, we compares the proposed method with several widely used scheduling methods and distributed DRL without actor allocation, the results shows better performance over benchmarks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge