Giuseppe Patanè

3D Skin Segmentation Methods in Medical Imaging: A Comparison

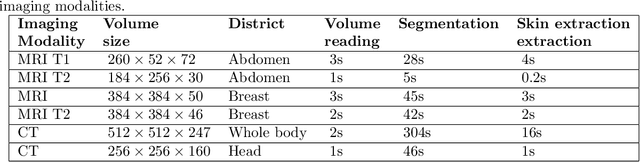

Jun 13, 2025Abstract:Automatic segmentation of anatomical structures is critical in medical image analysis, aiding diagnostics and treatment planning. Skin segmentation plays a key role in registering and visualising multimodal imaging data. 3D skin segmentation enables applications in personalised medicine, surgical planning, and remote monitoring, offering realistic patient models for treatment simulation, procedural visualisation, and continuous condition tracking. This paper analyses and compares algorithmic and AI-driven skin segmentation approaches, emphasising key factors to consider when selecting a strategy based on data availability and application requirements. We evaluate an iterative region-growing algorithm and the TotalSegmentator, a deep learning-based approach, across different imaging modalities and anatomical regions. Our tests show that AI segmentation excels in automation but struggles with MRI due to its CT-based training, while the graphics-based method performs better for MRIs but introduces more noise. AI-driven segmentation also automates patient bed removal in CT, whereas the graphics-based method requires manual intervention.

Framework of a multiscale data-driven digital twin of the muscle-skeletal system

Jun 13, 2025Abstract:Musculoskeletal disorders (MSDs) are a leading cause of disability worldwide, requiring advanced diagnostic and therapeutic tools for personalised assessment and treatment. Effective management of MSDs involves the interaction of heterogeneous data sources, making the Digital Twin (DT) paradigm a valuable option. This paper introduces the Musculoskeletal Digital Twin (MS-DT), a novel framework that integrates multiscale biomechanical data with computational modelling to create a detailed, patient-specific representation of the musculoskeletal system. By combining motion capture, ultrasound imaging, electromyography, and medical imaging, the MS-DT enables the analysis of spinal kinematics, posture, and muscle function. An interactive visualisation platform provides clinicians and researchers with an intuitive interface for exploring biomechanical parameters and tracking patient-specific changes. Results demonstrate the effectiveness of MS-DT in extracting precise kinematic and dynamic tissue features, offering a comprehensive tool for monitoring spine biomechanics and rehabilitation. This framework provides high-fidelity modelling and real-time visualization to improve patient-specific diagnosis and intervention planning.

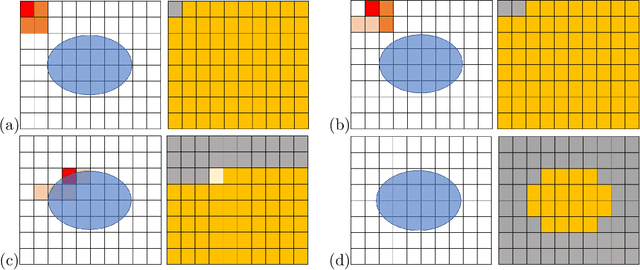

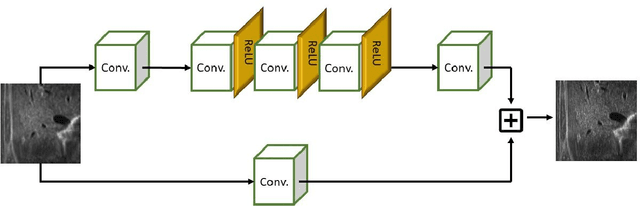

Optimal Density Functions for Weighted Convolution in Learning Models

May 30, 2025Abstract:The paper introduces the weighted convolution, a novel approach to the convolution for signals defined on regular grids (e.g., 2D images) through the application of an optimal density function to scale the contribution of neighbouring pixels based on their distance from the central pixel. This choice differs from the traditional uniform convolution, which treats all neighbouring pixels equally. Our weighted convolution can be applied to convolutional neural network problems to improve the approximation accuracy. Given a convolutional network, we define a framework to compute the optimal density function through a minimisation model. The framework separates the optimisation of the convolutional kernel weights (using stochastic gradient descent) from the optimisation of the density function (using DIRECT-L). Experimental results on a learning model for an image-to-image task (e.g., image denoising) show that the weighted convolution significantly reduces the loss (up to 53% improvement) and increases the test accuracy compared to standard convolution. While this method increases execution time by 11%, it is robust across several hyperparameters of the learning model. Future work will apply the weighted convolution to real-case 2D and 3D image convolutional learning problems.

Optimal Weighted Convolution for Classification and Denosing

May 30, 2025Abstract:We introduce a novel weighted convolution operator that enhances traditional convolutional neural networks (CNNs) by integrating a spatial density function into the convolution operator. This extension enables the network to differentially weight neighbouring pixels based on their relative position to the reference pixel, improving spatial characterisation and feature extraction. The proposed operator maintains the same number of trainable parameters and is fully compatible with existing CNN architectures. Although developed for 2D image data, the framework is generalisable to signals on regular grids of arbitrary dimensions, such as 3D volumetric data or 1D time series. We propose an efficient implementation of the weighted convolution by pre-computing the density function and achieving execution times comparable to standard convolution layers. We evaluate our method on two deep learning tasks: image classification using the CIFAR-100 dataset [KH+09] and image denoising using the DIV2K dataset [AT17]. Experimental results with state-of-the-art classification (e.g., VGG [SZ15], ResNet [HZRS16]) and denoising (e.g., DnCNN [ZZC+17], NAFNet [CCZS22]) methods show that the weighted convolution improves performance with respect to standard convolution across different quantitative metrics. For example, VGG achieves an accuracy of 66.94% with weighted convolution versus 56.89% with standard convolution on the classification problem, while DnCNN improves the PSNR value from 20.17 to 22.63 on the denoising problem. All models were trained on the CINECA Leonardo cluster to reduce the execution time and improve the tuning of the density function values. The PyTorch implementation of the weighted convolution is publicly available at: https://github.com/cammarasana123/weightedConvolution2.0.

Learning-Based and Quality Preserving Super-Resolution of Noisy Images

Nov 03, 2023

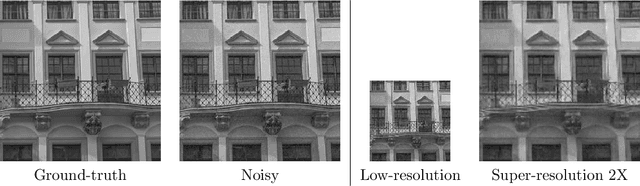

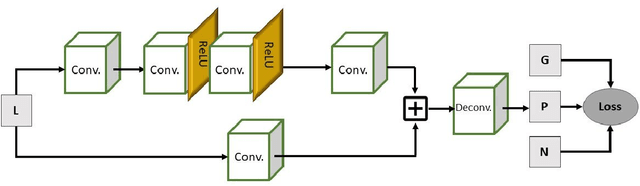

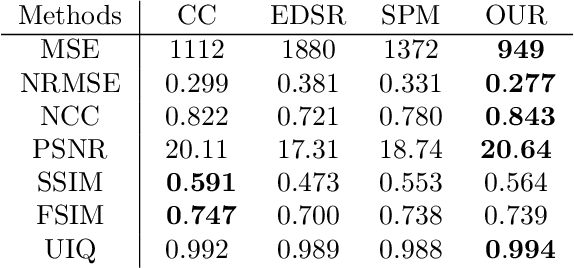

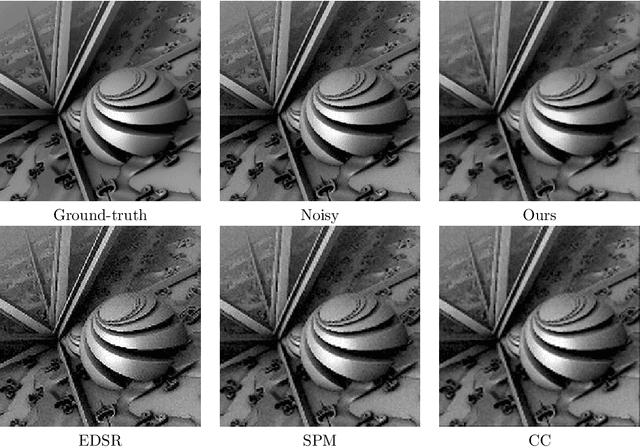

Abstract:Several applications require the super-resolution of noisy images and the preservation of geometrical and texture features. State-of-the-art super-resolution methods do not account for noise and generally enhance the output image's artefacts (e.g., aliasing, blurring). We propose a learning-based method that accounts for the presence of noise and preserves the properties of the input image, as measured by quantitative metrics (e.g., normalised crossed correlation, normalised mean squared error, peak-signal-to-noise-ration, structural similarity feature-based similarity, universal image quality). We train our network to up-sample a low-resolution noisy image while preserving its properties. We perform our tests on the Cineca Marconi100 cluster, at the 26th position in the top500 list. The experimental results show that our method outperforms learning-based methods, has comparable results with standard methods, preserves the properties of the input image as contours, brightness, and textures, and reduces the artefacts. As average quantitative metrics, our method has a PSNR value of 23.81 on the super-resolution of Gaussian noise images with a 2X up-sampling factor. In contrast, previous work has a PSNR value of 23.09 (standard method) and 21.78 (learning-based method). Our learning-based and quality-preserving super-resolution improves the high-resolution prediction of noisy images with respect to state-of-the-art methods with different noise types and up-sampling factors.

HPC-based Solvers of Minimisation Problems for Signal Processing

Nov 03, 2023

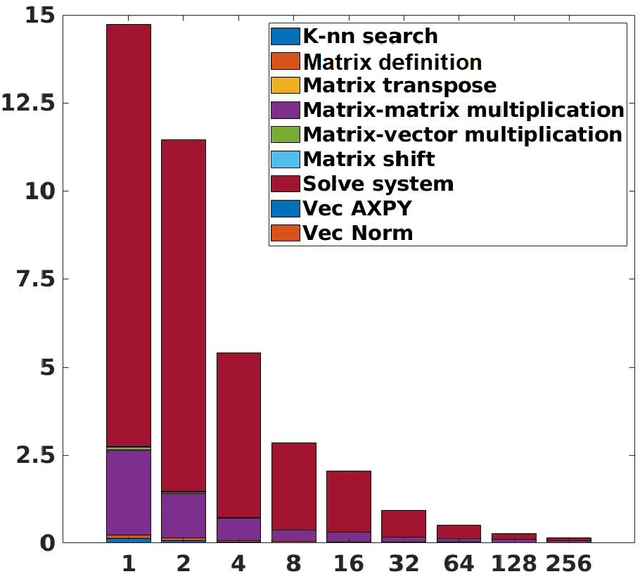

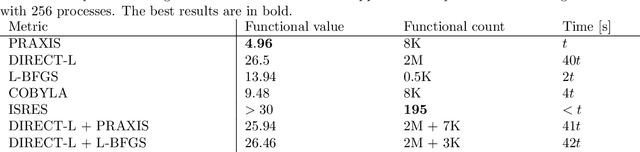

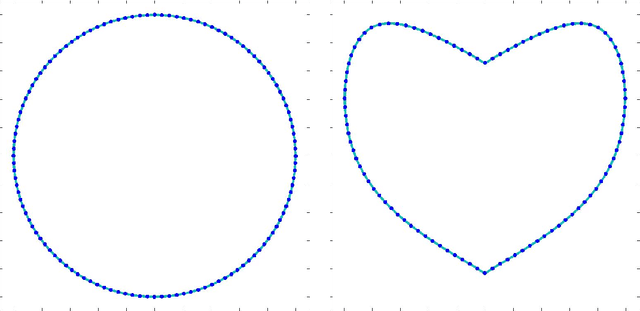

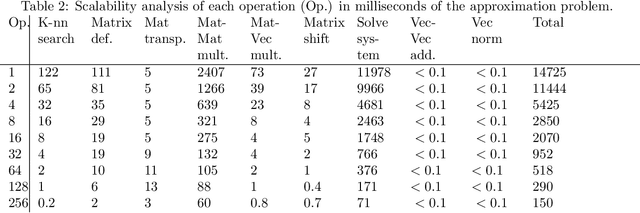

Abstract:Several physics and engineering applications involve the solution of a minimisation problem to compute an approximation of the input signal. Modern computing hardware and software apply high-performance computing to solve and considerably reduce the execution time. We compare and analyse different minimisation methods in terms of functional computation, convergence, execution time, and scalability properties, for the solution of two minimisation problems (i.e., approximation and denoising) with different constraints that involve computationally expensive operations. These problems are attractive due to their numerical and analytical properties, and our general analysis can be extended to most signal-processing problems. We perform our tests on the Cineca Marconi100 cluster, at the 26th position in the top500 list. Our experimental results show that PRAXIS is the best optimiser in terms of minima computation: the efficiency of the approximation is 38% with 256 processes, while the denoising has 46% with 32 processes.

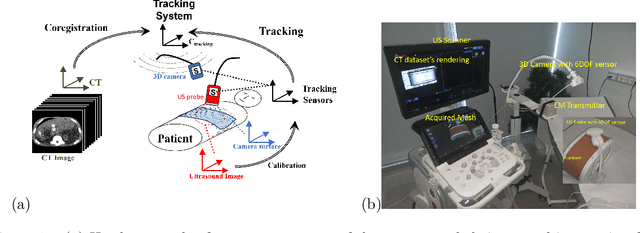

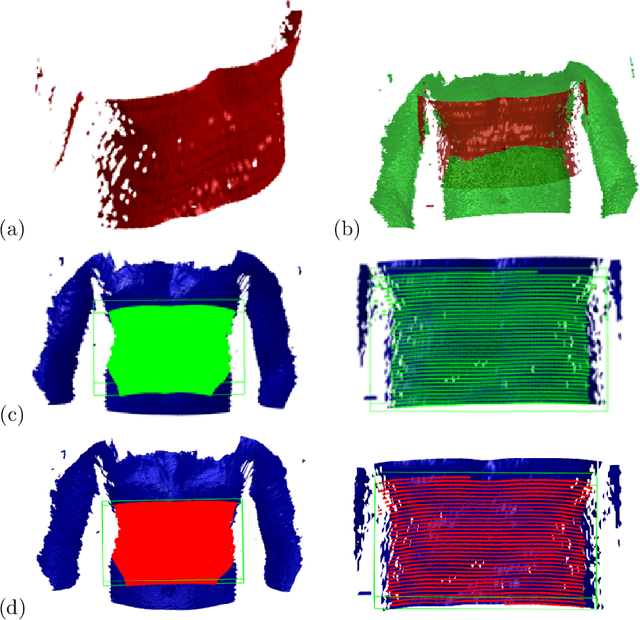

US & MR Image-Fusion Based on Skin Co-Registration

Jul 26, 2023

Abstract:The study and development of innovative solutions for the advanced visualisation, representation and analysis of medical images offer different research directions. Current practice in medical imaging consists in combining real-time US with imaging modalities that allow internal anatomy acquisitions, such as CT, MRI, PET or similar. Application of image-fusion approaches can be found in tracking surgical tools and/or needles, in real-time during interventions. Thus, this work proposes a fusion imaging system for the registration of CT and MRI images with real-time US acquisition leveraging a 3D camera sensor. The main focus of the work is the portability of the system and its applicability to different anatomical districts.

3D Patient-specific Modelling and Characterisation of Muscle-Skeletal Districts

Apr 18, 2023Abstract:This work addresses the patient-specific characterisation of the morphology and pathologies of muscle-skeletal districts (e.g., wrist, spine) to support diagnostic activities and follow-up exams through the integration of morphological and tissue information. We propose different methods for the integration of morphological information, retrieved from the geometrical analysis of 3D surface models, with tissue information extracted from volume images. For the qualitative and quantitative validation, we will discuss the localisation of bone erosion sites on the wrists to monitor rheumatic diseases and the characterisation of the three functional regions of the spinal vertebrae to study the presence of osteoporotic fractures. The proposed approach supports the quantitative and visual evaluation of possible damages, surgery planning, and early diagnosis or follow-up studies. Finally, our analysis is general enough to be applied to different districts.

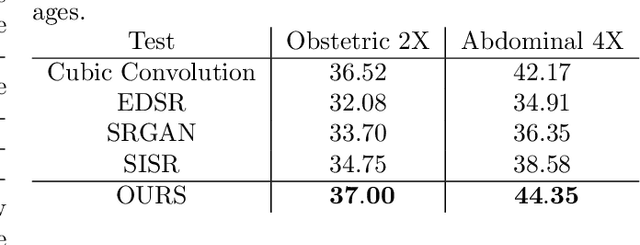

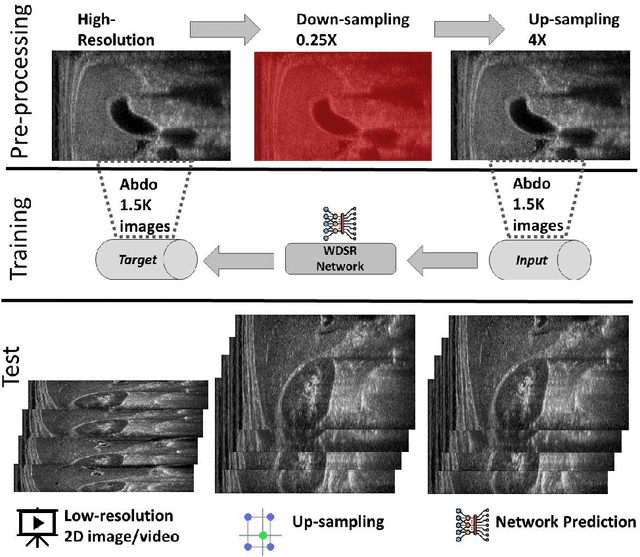

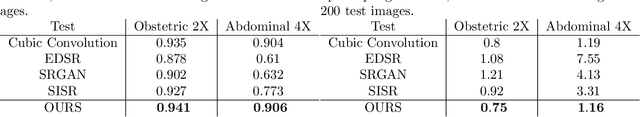

Learning-based Framework for US Signals Super-resolution

Apr 17, 2023

Abstract:We propose a novel deep-learning framework for super-resolution ultrasound images and videos in terms of spatial resolution and line reconstruction. We up-sample the acquired low-resolution image through a vision-based interpolation method; then, we train a learning-based model to improve the quality of the up-sampling. We qualitatively and quantitatively test our model on different anatomical districts (e.g., cardiac, obstetric) images and with different up-sampling resolutions (i.e., 2X, 4X). Our method improves the PSNR median value with respect to SOTA methods of $1.7\%$ on obstetric 2X raw images, $6.1\%$ on cardiac 2X raw images, and $4.4\%$ on abdominal raw 4X images; it also improves the number of pixels with a low prediction error of $9.0\%$ on obstetric 4X raw images, $5.2\%$ on cardiac 4X raw images, and $6.2\%$ on abdominal 4X raw images. The proposed method is then applied to the spatial super-resolution of 2D videos, by optimising the sampling of lines acquired by the probe in terms of the acquisition frequency. Our method specialises trained networks to predict the high-resolution target through the design of the network architecture and the loss function, taking into account the anatomical district and the up-sampling factor and exploiting a large ultrasound data set. The use of deep learning on large data sets overcomes the limitations of vision-based algorithms that are general and do not encode the characteristics of the data. Furthermore, the data set can be enriched with images selected by medical experts to further specialise the individual networks. Through learning and high-performance computing, our super-resolution is specialised to different anatomical districts by training multiple networks. Furthermore, the computational demand is shifted to centralised hardware resources with a real-time execution of the network's prediction on local devices.

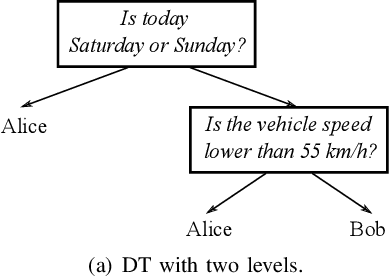

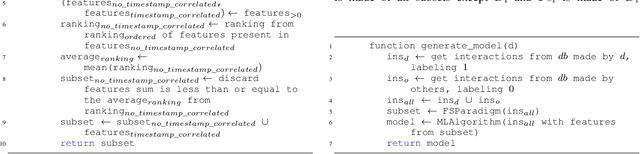

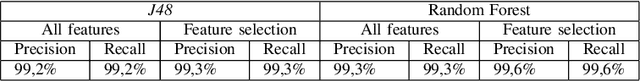

Secure Routine: A Routine-Based Algorithm for Drivers Identification

Dec 12, 2021

Abstract:The introduction of Information and Communication Technology (ICT) in transportation systems leads to several advantages (efficiency of transport, mobility, traffic management). However, it may bring some drawbacks in terms of increasing security challenges, also related to human behaviour. As an example , in the last decades attempts to characterize drivers' behaviour have been mostly targeted. This paper presents Secure Routine, a paradigm that uses driver's habits to driver identification and, in particular, to distinguish the vehicle's owner from other drivers. We evaluate Secure Routine in combination with other three existing research works based on machine learning techniques. Results are measured using well-known metrics and show that Secure Routine outperforms the compared works.

* 6 pages, 4 figures, published in VEHICULAR 2020, The Ninth International Conference on Advances in Vehicular Systems, Technologies and Applications

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge