Gilles Créhange

Learning With Context Feedback Loop for Robust Medical Image Segmentation

Mar 04, 2021

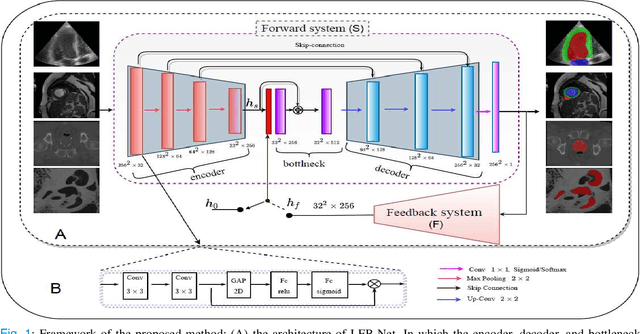

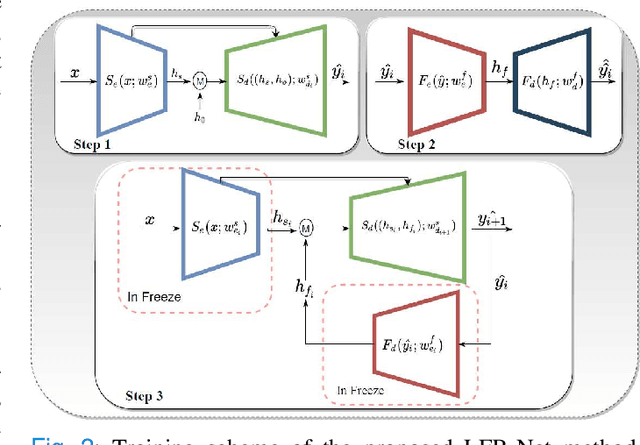

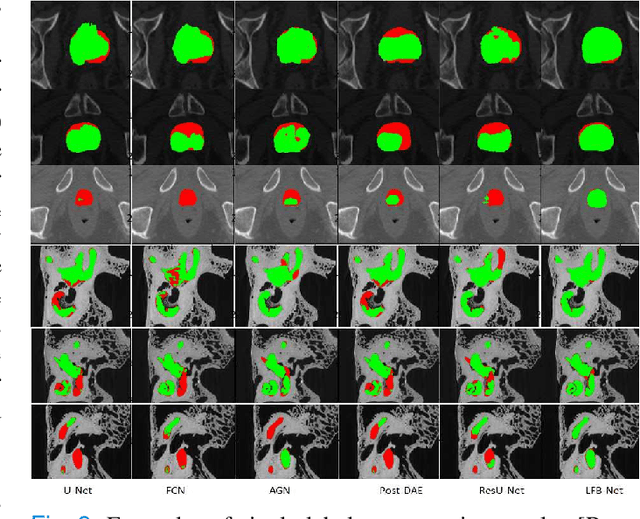

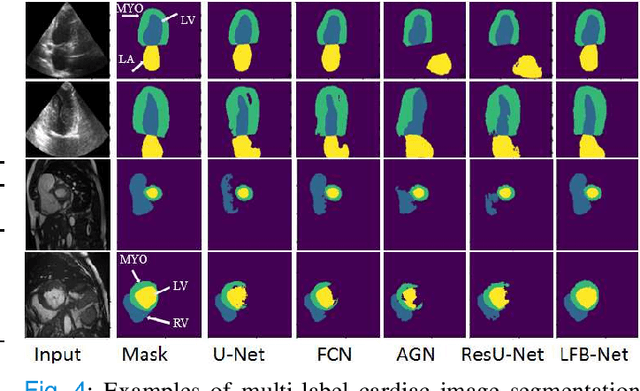

Abstract:Deep learning has successfully been leveraged for medical image segmentation. It employs convolutional neural networks (CNN) to learn distinctive image features from a defined pixel-wise objective function. However, this approach can lead to less output pixel interdependence producing incomplete and unrealistic segmentation results. In this paper, we present a fully automatic deep learning method for robust medical image segmentation by formulating the segmentation problem as a recurrent framework using two systems. The first one is a forward system of an encoder-decoder CNN that predicts the segmentation result from the input image. The predicted probabilistic output of the forward system is then encoded by a fully convolutional network (FCN)-based context feedback system. The encoded feature space of the FCN is then integrated back into the forward system's feed-forward learning process. Using the FCN-based context feedback loop allows the forward system to learn and extract more high-level image features and fix previous mistakes, thereby improving prediction accuracy over time. Experimental results, performed on four different clinical datasets, demonstrate our method's potential application for single and multi-structure medical image segmentation by outperforming the state of the art methods. With the feedback loop, deep learning methods can now produce results that are both anatomically plausible and robust to low contrast images. Therefore, formulating image segmentation as a recurrent framework of two interconnected networks via context feedback loop can be a potential method for robust and efficient medical image analysis.

Automatic Myocardial Infarction Evaluation from Delayed-Enhancement Cardiac MRI using Deep Convolutional Networks

Oct 30, 2020

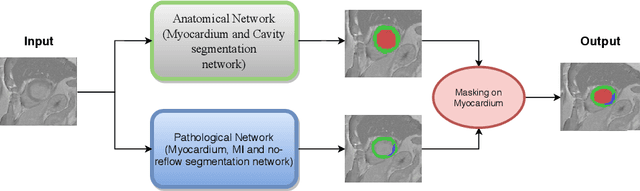

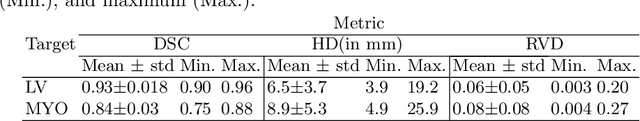

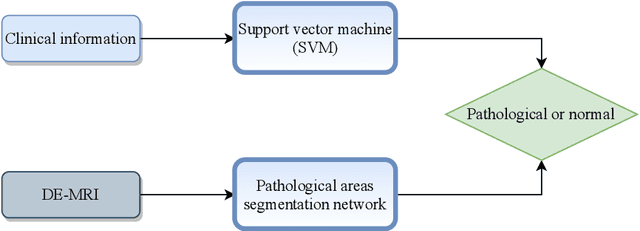

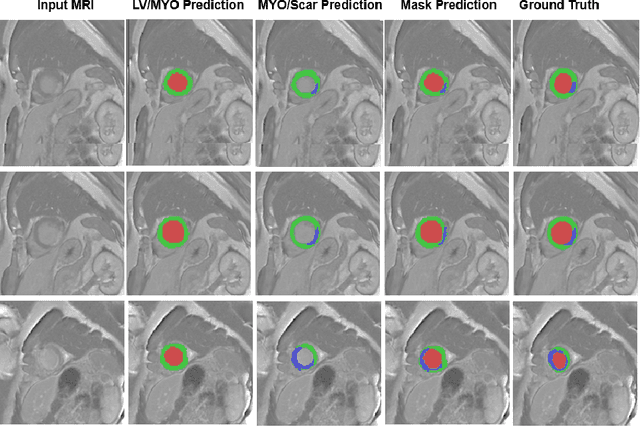

Abstract:In this paper, we propose a new deep learning framework for an automatic myocardial infarction evaluation from clinical information and delayed enhancement-MRI (DE-MRI). The proposed framework addresses two tasks. The first task is automatic detection of myocardial contours, the infarcted area, the no-reflow area, and the left ventricular cavity from a short-axis DE-MRI series. It employs two segmentation neural networks. The first network is used to segment the anatomical structures such as the myocardium and left ventricular cavity. The second network is used to segment the pathological areas such as myocardial infarction, myocardial no-reflow, and normal myocardial region. The segmented myocardium region from the first network is further used to refine the second network's pathological segmentation results. The second task is to automatically classify a given case into normal or pathological from clinical information with or without DE-MRI. A cascaded support vector machine (SVM) is employed to classify a given case from its associated clinical information. The segmented pathological areas from DE-MRI are also used for the classification task. We evaluated our method on the 2020 EMIDEC MICCAI challenge dataset. It yielded an average Dice index of 0.93 and 0.84, respectively, for the left ventricular cavity and the myocardium. The classification from using only clinical information yielded 80% accuracy over five-fold cross-validation. Using the DE-MRI, our method can classify the cases with 93.3% accuracy. These experimental results reveal that the proposed method can automatically evaluate the myocardial infarction.

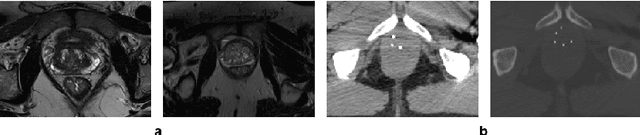

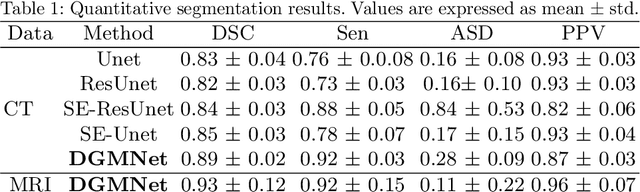

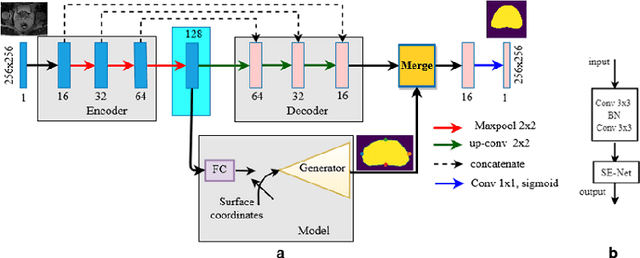

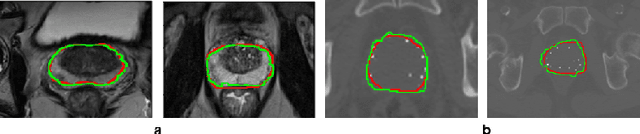

Deep generative model-driven multimodal prostate segmentation in radiotherapy

Oct 23, 2019

Abstract:Deep learning has shown unprecedented success in a variety of applications, such as computer vision and medical image analysis. However, there is still potential to improve segmentation in multimodal images by embedding prior knowledge via learning-based shape modeling and registration to learn the modality invariant anatomical structure of organs. For example, in radiotherapy automatic prostate segmentation is essential in prostate cancer diagnosis, therapy, and post-therapy assessment from T2-weighted MR or CT images. In this paper, we present a fully automatic deep generative model-driven multimodal prostate segmentation method using convolutional neural network (DGMNet). The novelty of our method comes with its embedded generative neural network for learning-based shape modeling and its ability to adapt for different imaging modalities via learning-based registration. The proposed method includes a multi-task learning framework that combines a convolutional feature extraction and an embedded regression and classification based shape modeling. This enables the network to predict the deformable shape of an organ. We show that generative neural networkbased shape modeling trained on a reliable contrast imaging modality (such as MRI) can be directly applied to low contrast imaging modality (such as CT) to achieve accurate prostate segmentation. The method was evaluated on MRI and CT datasets acquired from different clinical centers with large variations in contrast and scanning protocols. Experimental results reveal that our method can be used to automatically and accurately segment the prostate gland in different imaging modalities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge