Giacomo Dabisias

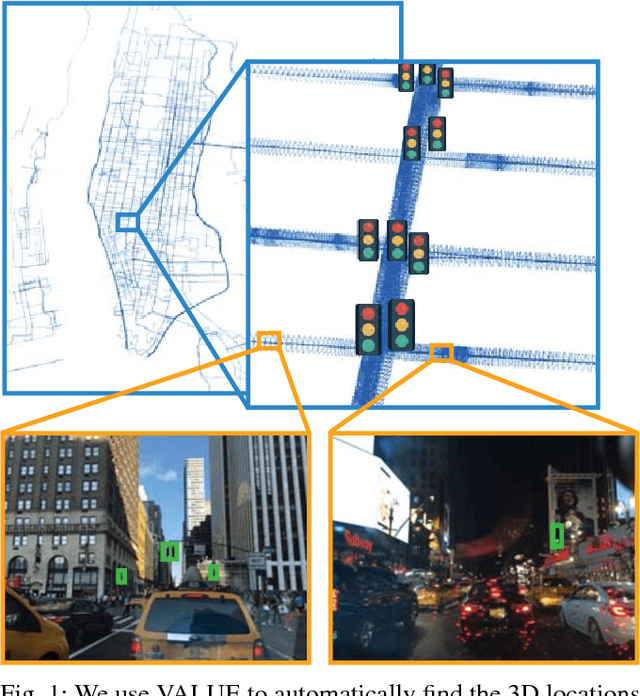

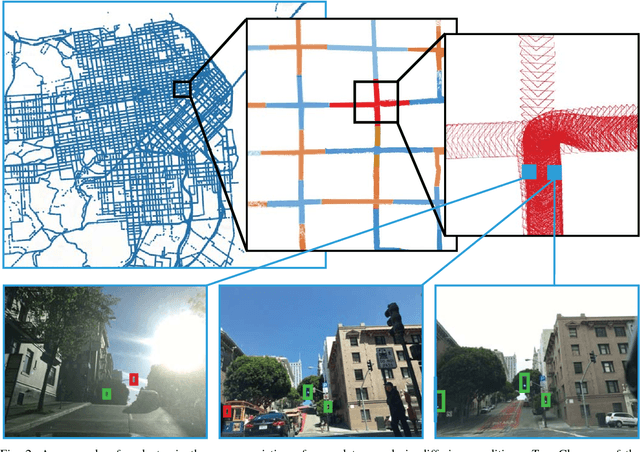

VALUE: Large Scale Voting-based Automatic Labelling for Urban Environments

Jun 05, 2020

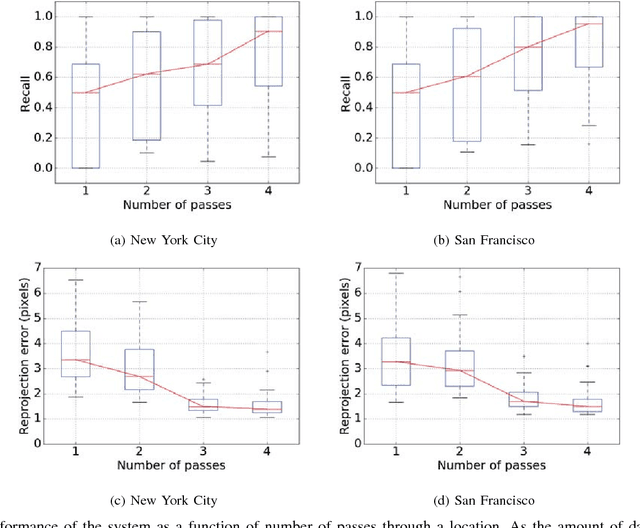

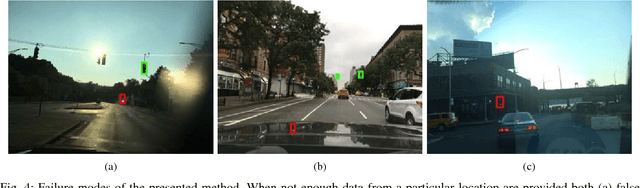

Abstract:This paper presents a simple and robust method for the automatic localisation of static 3D objects in large-scale urban environments. By exploiting the potential to merge a large volume of noisy but accurately localised 2D image data, we achieve superior performance in terms of both robustness and accuracy of the recovered 3D information. The method is based on a simple distributed voting schema which can be fully distributed and parallelised to scale to large-scale scenarios. To evaluate the method we collected city-scale data sets from New York City and San Francisco consisting of almost 400k images spanning the area of 40 km$^2$ and used it to accurately recover the 3D positions of traffic lights. We demonstrate a robust performance and also show that the solution improves in quality over time as the amount of data increases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge