Geraldine Jeckeln

Human-Machine Comparison for Cross-Race Face Verification: Race Bias at the Upper Limits of Performance?

May 31, 2023Abstract:Face recognition algorithms perform more accurately than humans in some cases, though humans and machines both show race-based accuracy differences. As algorithms continue to improve, it is important to continually assess their race bias relative to humans. We constructed a challenging test of 'cross-race' face verification and used it to compare humans and two state-of-the-art face recognition systems. Pairs of same- and different-identity faces of White and Black individuals were selected to be difficult for humans and an open-source implementation of the ArcFace face recognition algorithm from 2019 (5). Human participants (54 Black; 51 White) judged whether face pairs showed the same identity or different identities on a 7-point Likert-type scale. Two top-performing face recognition systems from the Face Recognition Vendor Test-ongoing performed the same test (7). By design, the test proved challenging for humans as a group, who performed above chance, but far less than perfect. Both state-of-the-art face recognition systems scored perfectly (no errors), consequently with equal accuracy for both races. We conclude that state-of-the-art systems for identity verification between two frontal face images of Black and White individuals can surpass the general population. Whether this result generalizes to challenging in-the-wild images is a pressing concern for deploying face recognition systems in unconstrained environments.

The Influence of the Other-Race Effect on Susceptibility to Face Morphing Attacks

Apr 26, 2022

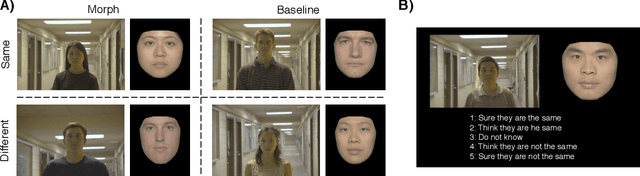

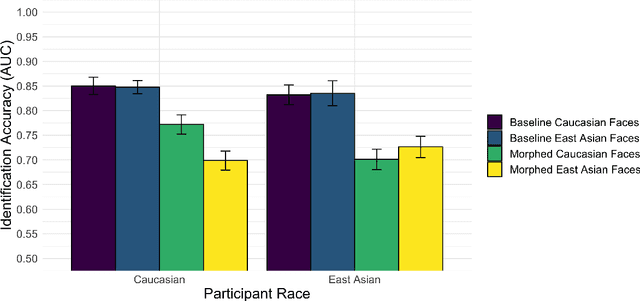

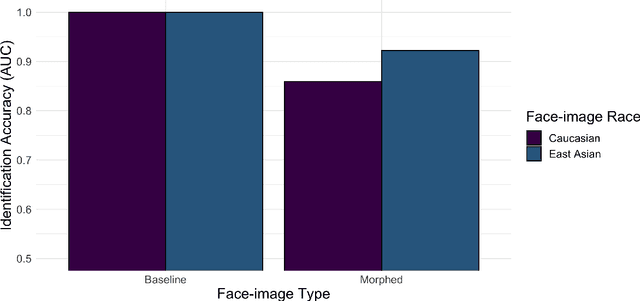

Abstract:Facial morphs created between two identities resemble both of the faces used to create the morph. Consequently, humans and machines are prone to mistake morphs made from two identities for either of the faces used to create the morph. This vulnerability has been exploited in "morph attacks" in security scenarios. Here, we asked whether the "other-race effect" (ORE) -- the human advantage for identifying own- vs. other-race faces -- exacerbates morph attack susceptibility for humans. We also asked whether face-identification performance in a deep convolutional neural network (DCNN) is affected by the race of morphed faces. Caucasian (CA) and East-Asian (EA) participants performed a face-identity matching task on pairs of CA and EA face images in two conditions. In the morph condition, different-identity pairs consisted of an image of identity "A" and a 50/50 morph between images of identity "A" and "B". In the baseline condition, morphs of different identities never appeared. As expected, morphs were identified mistakenly more often than original face images. Moreover, CA participants showed an advantage for CA faces in comparison to EA faces (a partial ORE). Of primary interest, morph identification was substantially worse for cross-race faces than for own-race faces. Similar to humans, the DCNN performed more accurately for original face images than for morphed image pairs. Notably, the deep network proved substantially more accurate than humans in both cases. The results point to the possibility that DCNNs might be useful for improving face identification accuracy when morphed faces are presented. They also indicate the significance of the ORE in morph attack susceptibility in applied settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge