George Boateng

Brilla AI: AI Contestant for the National Science and Maths Quiz

Mar 04, 2024Abstract:The African continent lacks enough qualified teachers which hampers the provision of adequate learning support. An AI could potentially augment the efforts of the limited number of teachers, leading to better learning outcomes. Towards that end, this work describes and evaluates the first key output for the NSMQ AI Grand Challenge, which proposes a robust, real-world benchmark for such an AI: "Build an AI to compete live in Ghana's National Science and Maths Quiz (NSMQ) competition and win - performing better than the best contestants in all rounds and stages of the competition". The NSMQ is an annual live science and mathematics competition for senior secondary school students in Ghana in which 3 teams of 2 students compete by answering questions across biology, chemistry, physics, and math in 5 rounds over 5 progressive stages until a winning team is crowned for that year. In this work, we built Brilla AI, an AI contestant that we deployed to unofficially compete remotely and live in the Riddles round of the 2023 NSMQ Grand Finale, the first of its kind in the 30-year history of the competition. Brilla AI is currently available as a web app that livestreams the Riddles round of the contest, and runs 4 machine learning systems: (1) speech to text (2) question extraction (3) question answering and (4) text to speech that work together in real-time to quickly and accurately provide an answer, and then say it with a Ghanaian accent. In its debut, our AI answered one of the 4 riddles ahead of the 3 human contesting teams, unofficially placing second (tied). Improvements and extensions of this AI could potentially be deployed to offer science tutoring to students and eventually enable millions across Africa to have one-on-one learning interactions, democratizing science education.

Leveraging AI to Advance Science and Computing Education across Africa: Progress, Challenges, and Opportunities

Feb 12, 2024Abstract:Across the African continent, students grapple with various educational challenges, including limited access to essential resources such as computers, internet connectivity, reliable electricity, and a shortage of qualified teachers. Despite these challenges, recent advances in AI such as BERT, and GPT-4 have demonstrated their potential for advancing education. Yet, these AI tools tend to be deployed and evaluated predominantly within the context of Western educational settings, with limited attention directed towards the unique needs and challenges faced by students in Africa. In this book chapter, we describe our works developing and deploying AI in Education tools in Africa: (1) SuaCode, an AI-powered app that enables Africans to learn to code using their smartphones, (2) AutoGrad, an automated grading, and feedback tool for graphical and interactive coding assignments, (3) a tool for code plagiarism detection that shows visual evidence of plagiarism, (4) Kwame, a bilingual AI teaching assistant for coding courses, (5) Kwame for Science, a web-based AI teaching assistant that provides instant answers to students' science questions and (6) Brilla AI, an AI contestant for the National Science and Maths Quiz competition. We discuss challenges and potential opportunities to use AI to advance science and computing education across Africa.

Towards an AI to Win Ghana's National Science and Maths Quiz

Aug 08, 2023

Abstract:Can an AI win Ghana's National Science and Maths Quiz (NSMQ)? That is the question we seek to answer in the NSMQ AI project, an open-source project that is building AI to compete live in the NSMQ and win. The NSMQ is an annual live science and mathematics competition for senior secondary school students in Ghana in which 3 teams of 2 students compete by answering questions across biology, chemistry, physics, and math in 5 rounds over 5 progressive stages until a winning team is crowned for that year. The NSMQ is an exciting live quiz competition with interesting technical challenges across speech-to-text, text-to-speech, question-answering, and human-computer interaction. In this ongoing work that began in January 2023, we give an overview of the project, describe each of the teams, progress made thus far, and the next steps toward our planned launch and debut of the AI in October for NSMQ 2023. An AI that conquers this grand challenge can have real-world impact on education such as enabling millions of students across Africa to have one-on-one learning support from this AI.

Real-World Deployment and Evaluation of Kwame for Science, An AI Teaching Assistant for Science Education in West Africa

Feb 21, 2023

Abstract:Africa has a high student-to-teacher ratio which limits students' access to teachers for learning support such as educational question answering. In this work, we extended Kwame, our previous AI teaching assistant for coding education, adapted it for science education, and deployed it as a web app. Kwame for Science provides passages from well-curated knowledge sources and related past national exam questions as answers to questions from students based on the Integrated Science subject of the West African Senior Secondary Certificate Examination (WASSCE). Furthermore, students can view past national exam questions along with their answers and filter by year, question type (objectives, theory, and practicals), and topics that were automatically categorized by a topic detection model which we developed (91% unweighted average recall). We deployed Kwame for Science in the real world over 8 months and had 750 users across 32 countries (15 in Africa) and 1.5K questions asked. Our evaluation showed an 87.2% top 3 accuracy (n=109 questions) implying that Kwame for Science has a high chance of giving at least one useful answer among the 3 displayed. We categorized the reasons the model incorrectly answered questions to provide insights for future improvements. We also share challenges and lessons with the development, deployment, and human-computer interaction component of such a tool to enable other researchers to deploy similar tools. With a first-of-its-kind tool within the African context, Kwame for Science has the potential to enable the delivery of scalable, cost-effective, and quality remote education to millions of people across Africa.

Can an AI Win Ghana's National Science and Maths Quiz? An AI Grand Challenge for Education

Jan 30, 2023Abstract:There is a lack of enough qualified teachers across Africa which hampers efforts to provide adequate learning support such as educational question answering (EQA) to students. An AI system that can enable students to ask questions via text or voice and get instant answers will make high-quality education accessible. Despite advances in the field of AI, there exists no robust benchmark or challenge to enable building such an (EQA) AI within the African context. Ghana's National Science and Maths Quiz competition (NSMQ) is the perfect competition to evaluate the potential of such an AI due to its wide coverage of scientific fields, variety of question types, highly competitive nature, and live, real-world format. The NSMQ is a Jeopardy-style annual live quiz competition in which 3 teams of 2 students compete by answering questions across biology, chemistry, physics, and math in 5 rounds over 5 progressive stages until a winning team is crowned for that year. In this position paper, we propose the NSMQ AI Grand Challenge, an AI Grand Challenge for Education using Ghana's National Science and Maths Quiz competition (NSMQ) as a case study. Our proposed grand challenge is to "Build an AI to compete live in Ghana's National Science and Maths Quiz (NSMQ) competition and win - performing better than the best contestants in all rounds and stages of the competition." We describe the competition, and key technical challenges to address along with ideas from recent advances in machine learning that could be leveraged to solve this challenge. This position paper is a first step towards conquering such a challenge and importantly, making advances in AI for education in the African context towards democratizing high-quality education across Africa.

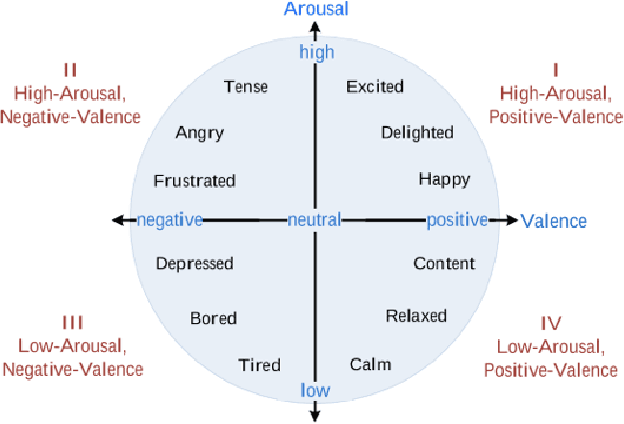

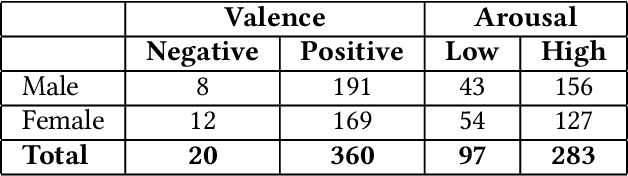

Multimodal Emotion Recognition among Couples from Lab Settings to Daily Life using Smartwatches

Dec 21, 2022Abstract:Couples generally manage chronic diseases together and the management takes an emotional toll on both patients and their romantic partners. Consequently, recognizing the emotions of each partner in daily life could provide an insight into their emotional well-being in chronic disease management. The emotions of partners are currently inferred in the lab and daily life using self-reports which are not practical for continuous emotion assessment or observer reports which are manual, time-intensive, and costly. Currently, there exists no comprehensive overview of works on emotion recognition among couples. Furthermore, approaches for emotion recognition among couples have (1) focused on English-speaking couples in the U.S., (2) used data collected from the lab, and (3) performed recognition using observer ratings rather than partner's self-reported / subjective emotions. In this body of work contained in this thesis (8 papers - 5 published and 3 currently under review in various journals), we fill the current literature gap on couples' emotion recognition, develop emotion recognition systems using 161 hours of data from a total of 1,051 individuals, and make contributions towards taking couples' emotion recognition from the lab which is the status quo, to daily life. This thesis contributes toward building automated emotion recognition systems that would eventually enable partners to monitor their emotions in daily life and enable the delivery of interventions to improve their emotional well-being.

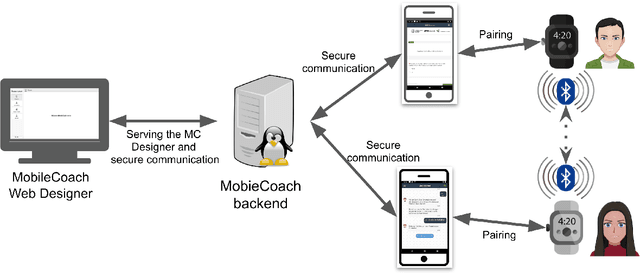

"Are you okay, honey?": Recognizing Emotions among Couples Managing Diabetes in Daily Life using Multimodal Real-World Smartwatch Data

Aug 22, 2022

Abstract:Couples generally manage chronic diseases together and the management takes an emotional toll on both patients and their romantic partners. Consequently, recognizing the emotions of each partner in daily life could provide an insight into their emotional well-being in chronic disease management. Currently, the process of assessing each partner's emotions is manual, time-intensive, and costly. Despite the existence of works on emotion recognition among couples, none of these works have used data collected from couples' interactions in daily life. In this work, we collected 85 hours (1,021 5-minute samples) of real-world multimodal smartwatch sensor data (speech, heart rate, accelerometer, and gyroscope) and self-reported emotion data (n=612) from 26 partners (13 couples) managing diabetes mellitus type 2 in daily life. We extracted physiological, movement, acoustic, and linguistic features, and trained machine learning models (support vector machine and random forest) to recognize each partner's self-reported emotions (valence and arousal). Our results from the best models (balanced accuracies of 63.8% and 78.1% for arousal and valence respectively) are better than chance and our prior work that also used data from German-speaking, Swiss-based couples, albeit, in the lab. This work contributes toward building automated emotion recognition systems that would eventually enable partners to monitor their emotions in daily life and enable the delivery of interventions to improve their emotional well-being.

Kwame for Science: An AI Teaching Assistant for Science Education in West Africa

Jun 28, 2022

Abstract:Africa has a high student-to-teacher ratio which limits students' access to teachers. Consequently, students struggle to get answers to their questions. In this work, we extended Kwame, our previous AI teaching assistant, adapted it for science education, and deployed it as a web app. Kwame for Science answers questions of students based on the Integrated Science subject of the West African Senior Secondary Certificate Examination (WASSCE). Kwame for Science is a Sentence-BERT-based question-answering web app that displays 3 paragraphs as answers along with a confidence score in response to science questions. Additionally, it displays the top 5 related past exam questions and their answers in addition to the 3 paragraphs. Our preliminary evaluation of the Kwame for Science with a 2.5-week real-world deployment showed a top 3 accuracy of 87.5% (n=56) with 190 users across 11 countries. Kwame for Science will enable the delivery of scalable, cost-effective, and quality remote education to millions of people across Africa.

BERT meets LIWC: Exploring State-of-the-Art Language Models for Predicting Communication Behavior in Couples' Conflict Interactions

Jun 03, 2021

Abstract:Many processes in psychology are complex, such as dyadic interactions between two interacting partners (e.g. patient-therapist, intimate relationship partners). Nevertheless, many basic questions about interactions are difficult to investigate because dyadic processes can be within a person and between partners, they are based on multimodal aspects of behavior and unfold rapidly. Current analyses are mainly based on the behavioral coding method, whereby human coders annotate behavior based on a coding schema. But coding is labor-intensive, expensive, slow, focuses on few modalities. Current approaches in psychology use LIWC for analyzing couples' interactions. However, advances in natural language processing such as BERT could enable the development of systems to potentially automate behavioral coding, which in turn could substantially improve psychological research. In this work, we train machine learning models to automatically predict positive and negative communication behavioral codes of 368 German-speaking Swiss couples during an 8-minute conflict interaction on a fine-grained scale (10-seconds sequences) using linguistic features and paralinguistic features derived with openSMILE. Our results show that both simpler TF-IDF features as well as more complex BERT features performed better than LIWC, and that adding paralinguistic features did not improve the performance. These results suggest it might be time to consider modern alternatives to LIWC, the de facto linguistic features in psychology, for prediction tasks in couples research. This work is a further step towards the automated coding of couples' behavior which could enhance couple research and therapy, and be utilized for other dyadic interactions as well.

"You made me feel this way": Investigating Partners' Influence in Predicting Emotions in Couples' Conflict Interactions using Speech Data

Jun 03, 2021

Abstract:How romantic partners interact with each other during a conflict influences how they feel at the end of the interaction and is predictive of whether the partners stay together in the long term. Hence understanding the emotions of each partner is important. Yet current approaches that are used include self-reports which are burdensome and hence limit the frequency of this data collection. Automatic emotion prediction could address this challenge. Insights from psychology research indicate that partners' behaviors influence each other's emotions in conflict interaction and hence, the behavior of both partners could be considered to better predict each partner's emotion. However, it is yet to be investigated how doing so compares to only using each partner's own behavior in terms of emotion prediction performance. In this work, we used BERT to extract linguistic features (i.e., what partners said) and openSMILE to extract paralinguistic features (i.e., how they said it) from a data set of 368 German-speaking Swiss couples (N = 736 individuals) which were videotaped during an 8-minutes conflict interaction in the laboratory. Based on those features, we trained machine learning models to predict if partners feel positive or negative after the conflict interaction. Our results show that including the behavior of the other partner improves the prediction performance. Furthermore, for men, considering how their female partners spoke is most important and for women considering what their male partner said is most important in getting better prediction performance. This work is a step towards automatically recognizing each partners' emotion based on the behavior of both, which would enable a better understanding of couples in research, therapy, and the real world.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge