Geetanjali Sharma

Generating Realistic Forehead-Creases for User Verification via Conditioned Piecewise Polynomial Curves

Jan 23, 2025Abstract:We propose a trait-specific image generation method that models forehead creases geometrically using B-spline and B\'ezier curves. This approach ensures the realistic generation of both principal creases and non-prominent crease patterns, effectively constructing detailed and authentic forehead-crease images. These geometrically rendered images serve as visual prompts for a diffusion-based Edge-to-Image translation model, which generates corresponding mated samples. The resulting novel synthetic identities are then used to train a forehead-crease verification network. To enhance intra-subject diversity in the generated samples, we employ two strategies: (a) perturbing the control points of B-splines under defined constraints to maintain label consistency, and (b) applying image-level augmentations to the geometric visual prompts, such as dropout and elastic transformations, specifically tailored to crease patterns. By integrating the proposed synthetic dataset with real-world data, our method significantly improves the performance of forehead-crease verification systems under a cross-database verification protocol.

Impact of Iris Pigmentation on Performance Bias in Visible Iris Verification Systems: A Comparative Study

Nov 13, 2024

Abstract:Iris recognition technology plays a critical role in biometric identification systems, but their performance can be affected by variations in iris pigmentation. In this work, we investigate the impact of iris pigmentation on the efficacy of biometric recognition systems, focusing on a comparative analysis of blue and dark irises. Data sets were collected using multiple devices, including P1, P2, and P3 smartphones [4], to assess the robustness of the systems in different capture environments [19]. Both traditional machine learning techniques and deep learning models were used, namely Open-Iris, ViT-b, and ResNet50, to evaluate performance metrics such as Equal Error Rate (EER) and True Match Rate (TMR). Our results indicate that iris recognition systems generally exhibit higher accuracy for blue irises compared to dark irises. Furthermore, we examined the generalization capabilities of these systems across different iris colors and devices, finding that while training on diverse datasets enhances recognition performance, the degree of improvement is contingent on the specific model and device used. Our analysis also identifies inherent biases in recognition performance related to iris color and cross-device variability. These findings underscore the need for more inclusive dataset collection and model refinement to reduce bias and promote equitable biometric recognition across varying iris pigmentation and device configurations.

Synthetic Forehead-creases Biometric Generation for Reliable User Verification

Aug 28, 2024

Abstract:Recent studies have emphasized the potential of forehead-crease patterns as an alternative for face, iris, and periocular recognition, presenting contactless and convenient solutions, particularly in situations where faces are covered by surgical masks. However, collecting forehead data presents challenges, including cost and time constraints, as developing and optimizing forehead verification methods requires a substantial number of high-quality images. To tackle these challenges, the generation of synthetic biometric data has gained traction due to its ability to protect privacy while enabling effective training of deep learning-based biometric verification methods. In this paper, we present a new framework to synthesize forehead-crease image data while maintaining important features, such as uniqueness and realism. The proposed framework consists of two main modules: a Subject-Specific Generation Module (SSGM), based on an image-to-image Brownian Bridge Diffusion Model (BBDM), which learns a one-to-many mapping between image pairs to generate identity-aware synthetic forehead creases corresponding to real subjects, and a Subject-Agnostic Generation Module (SAGM), which samples new synthetic identities with assistance from the SSGM. We evaluate the diversity and realism of the generated forehead-crease images primarily using the Fr\'echet Inception Distance (FID) and the Structural Similarity Index Measure (SSIM). In addition, we assess the utility of synthetically generated forehead-crease images using a forehead-crease verification system (FHCVS). The results indicate an improvement in the verification accuracy of the FHCVS by utilizing synthetic data.

FH-SSTNet: Forehead Creases based User Verification using Spatio-Spatial Temporal Network

Mar 24, 2024

Abstract:Biometric authentication, which utilizes contactless features, such as forehead patterns, has become increasingly important for identity verification and access management. The proposed method is based on learning a 3D spatio-spatial temporal convolution to create detailed pictures of forehead patterns. We introduce a new CNN model called the Forehead Spatio-Spatial Temporal Network (FH-SSTNet), which utilizes a 3D CNN architecture with triplet loss to capture distinguishing features. We enhance the model's discrimination capability using Arcloss in the network's head. Experimentation on the Forehead Creases version 1 (FH-V1) dataset, containing 247 unique subjects, demonstrates the superior performance of FH-SSTNet compared to existing methods and pre-trained CNNs like ResNet50, especially for forehead-based user verification. The results demonstrate the superior performance of FH-SSTNet for forehead-based user verification, confirming its effectiveness in identity authentication.

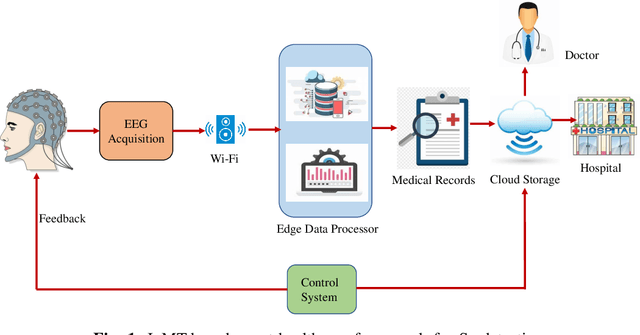

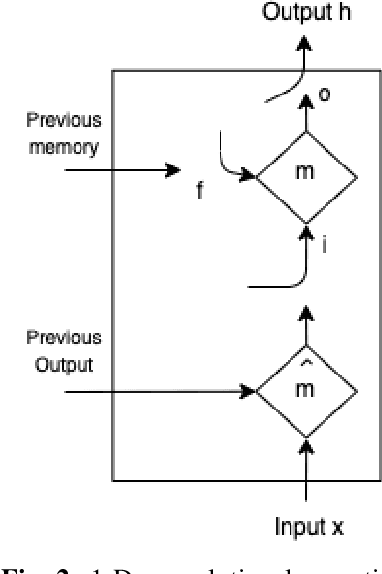

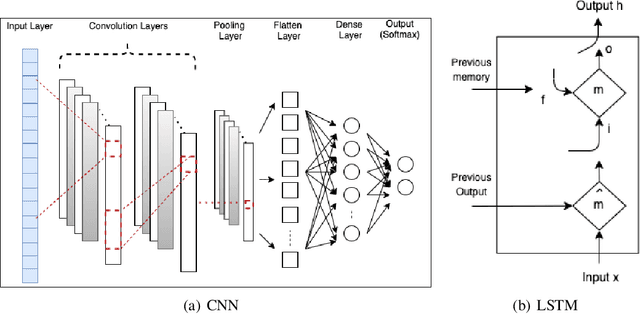

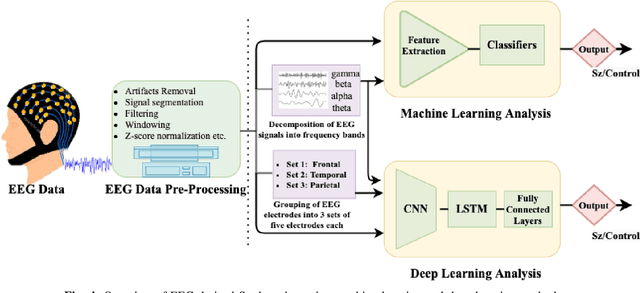

Novel EEG based Schizophrenia Detection with IoMT Framework for Smart Healthcare

Nov 19, 2021

Abstract:In the field of neuroscience, Brain activity analysis is always considered as an important area. Schizophrenia(Sz) is a brain disorder that severely affects the thinking, behaviour, and feelings of people all around the world. Electroencephalography (EEG) is proved to be an efficient biomarker in Sz detection. EEG is a non-linear time-seriesi signal and utilizing it for investigation is rather crucial due to its non-linear structure. This paper aims to improve the performance of EEG based Sz detection using a deep learning approach. A novel hybrid deep learning model known as SzHNN (Schizophrenia Hybrid Neural Network), a combination of Convolutional Neural Networks (CNN) and Long Short-Term Memory (LSTM) has been proposed. CNN network is used for local feature extraction and LSTM has been utilized for classification. The proposed model has been compared with CNN only, LSTM only, and machine learning-based models. All the models have been evaluated on two different datasets wherein Dataset 1 consists of 19 subjects and Dataset 2 consists of 16 subjects. Several experiments have been conducted for the same using various parametric settings on different frequency bands and using different sets of electrodes on the scalp. Based on all the experiments, it is evident that the proposed hybrid model (SzHNN) provides the highest classification accuracy of 99.9% in comparison to other existing models. The proposed model overcomes the influence of different frequency bands and even showed a much better accuracy of 91% with only 5 electrodes. The proposed model is also evaluated on the Internet of Medical Things (IoMT) framework for smart healthcare and remote monitoring applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge