Gaofei Han

Collision Avoidance for Multiple UAVs in Unknown Scenarios with Causal Representation Disentanglement

Jul 04, 2024

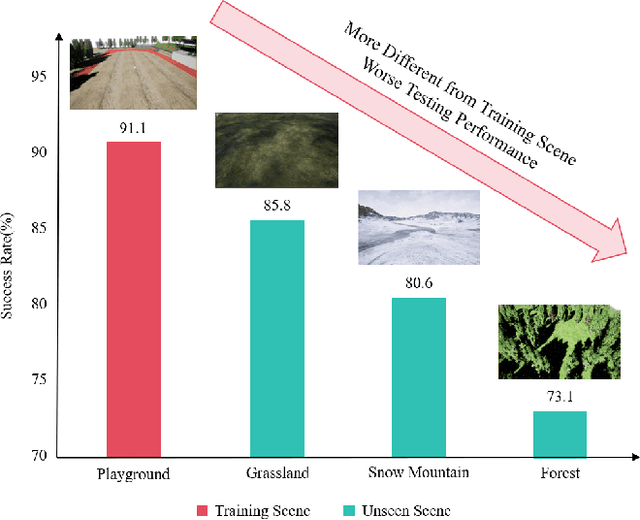

Abstract:Deep reinforcement learning (DRL) has achieved remarkable progress in online path planning tasks for multi-UAV systems. However, existing DRL-based methods often suffer from performance degradation when tackling unseen scenarios, since the non-causal factors in visual representations adversely affect policy learning. To address this issue, we propose a novel representation learning approach, \ie, causal representation disentanglement, which can identify the causal and non-causal factors in representations. After that, we only pass causal factors for subsequent policy learning and thus explicitly eliminate the influence of non-causal factors, which effectively improves the generalization ability of DRL models. Experimental results show that our proposed method can achieve robust navigation performance and effective collision avoidance especially in unseen scenarios, which significantly outperforms existing SOTA algorithms.

Robust Policy Learning for Multi-UAV Collision Avoidance with Causal Feature Selection

Jul 04, 2024Abstract:In unseen and complex outdoor environments, collision avoidance navigation for unmanned aerial vehicle (UAV) swarms presents a challenging problem. It requires UAVs to navigate through various obstacles and complex backgrounds. Existing collision avoidance navigation methods based on deep reinforcement learning show promising performance but suffer from poor generalization abilities, resulting in performance degradation in unseen environments. To address this issue, we investigate the cause of weak generalization ability in DRL and propose a novel causal feature selection module. This module can be integrated into the policy network and effectively filters out non-causal factors in representations, thereby reducing the influence of spurious correlations between non-causal factors and action predictions. Experimental results demonstrate that our proposed method can achieve robust navigation performance and effective collision avoidance especially in scenarios with unseen backgrounds and obstacles, which significantly outperforms existing state-of-the-art algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge