Ganit Kupershmidt

A Penny for Your Thoughts: Self-Supervised Reconstruction of Natural Movies from Brain Activity

Jun 10, 2022

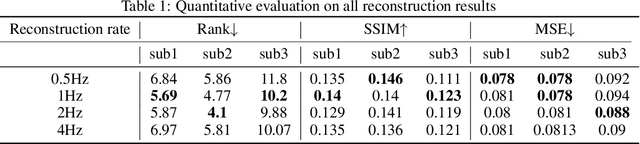

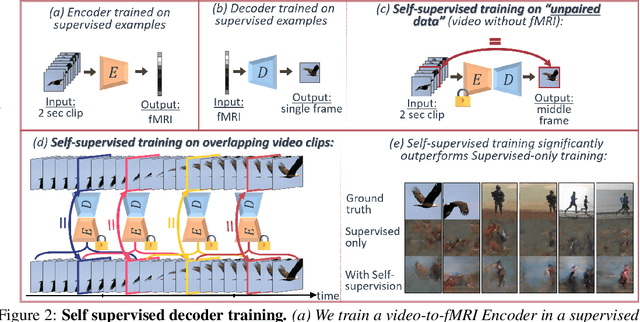

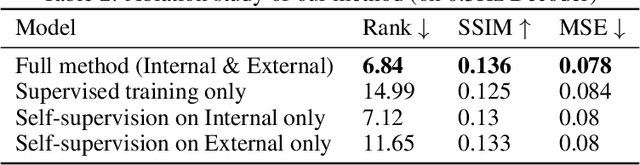

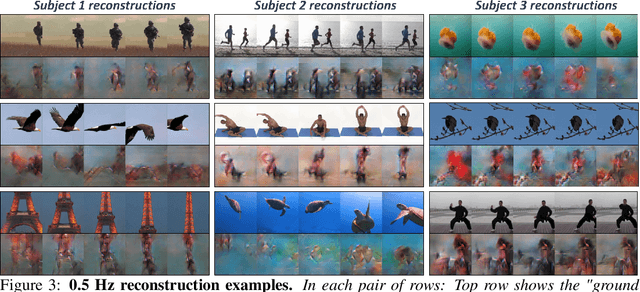

Abstract:Reconstructing natural videos from fMRI brain recordings is very challenging, for two main reasons: (i) As fMRI data acquisition is difficult, we only have a limited amount of supervised samples, which is not enough to cover the huge space of natural videos; and (ii) The temporal resolution of fMRI recordings is much lower than the frame rate of natural videos. In this paper, we propose a self-supervised approach for natural-movie reconstruction. By employing cycle-consistency over Encoding-Decoding natural videos, we can: (i) exploit the full framerate of the training videos, and not be limited only to clips that correspond to fMRI recordings; (ii) exploit massive amounts of external natural videos which the subjects never saw inside the fMRI machine. These enable increasing the applicable training data by several orders of magnitude, introducing natural video priors to the decoding network, as well as temporal coherence. Our approach significantly outperforms competing methods, since those train only on the limited supervised data. We further introduce a new and simple temporal prior of natural videos, which - when folded into our fMRI decoder further - allows us to reconstruct videos at a higher frame-rate (HFR) of up to x8 of the original fMRI sample rate.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge