Gang Xia

Safe Reinforcement Learning Filter for Multicopter Collision-Free Tracking under disturbances

Oct 09, 2024

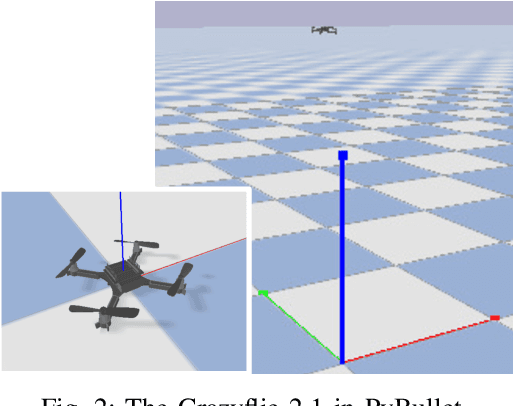

Abstract:This paper proposes a safe reinforcement learning filter (SRLF) to realize multicopter collision-free trajectory tracking with input disturbance. A novel robust control barrier function (RCBF) with its analysis techniques is introduced to avoid collisions with unknown disturbances during tracking. To ensure the system state remains within the safe set, the RCBF gain is designed in control action. A safety filter is introduced to transform unsafe reinforcement learning (RL) control inputs into safe ones, allowing RL training to proceed without explicitly considering safety constraints. The SRLF obtains rigorous guaranteed safe control action by solving a quadratic programming (QP) problem that incorporates forward invariance of RCBF and input saturation constraints. Both simulation and real-world experiments on multicopters demonstrate the effectiveness and excellent performance of SRLF in achieving collision-free tracking under input disturbances and saturation.

A Safety Modulator Actor-Critic Method in Model-Free Safe Reinforcement Learning and Application in UAV Hovering

Oct 09, 2024

Abstract:This paper proposes a safety modulator actor-critic (SMAC) method to address safety constraint and overestimation mitigation in model-free safe reinforcement learning (RL). A safety modulator is developed to satisfy safety constraints by modulating actions, allowing the policy to ignore safety constraint and focus on maximizing reward. Additionally, a distributional critic with a theoretical update rule for SMAC is proposed to mitigate the overestimation of Q-values with safety constraints. Both simulation and real-world scenarios experiments on Unmanned Aerial Vehicles (UAVs) hovering confirm that the SMAC can effectively maintain safety constraints and outperform mainstream baseline algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge