Get our free extension to see links to code for papers anywhere online!Free add-on: code for papers everywhere!Free add-on: See code for papers anywhere!

Gabriel Bayomi Tinoco Kalejaiye

Modular Approach to Machine Reading Comprehension: Mixture of Task-Aware Experts

Oct 04, 2022Figures and Tables:

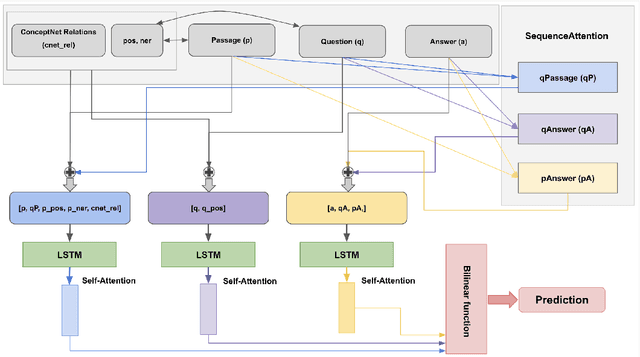

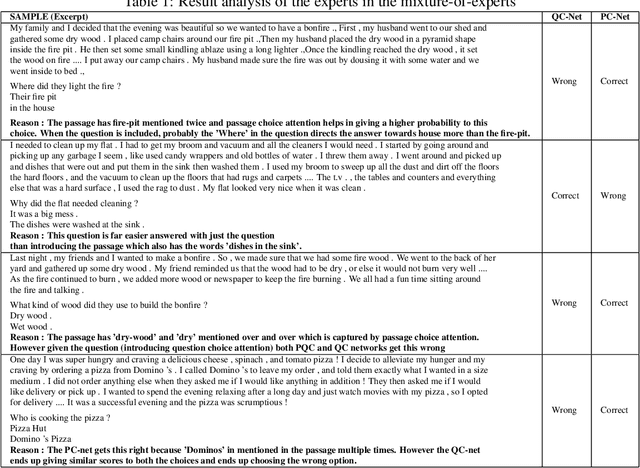

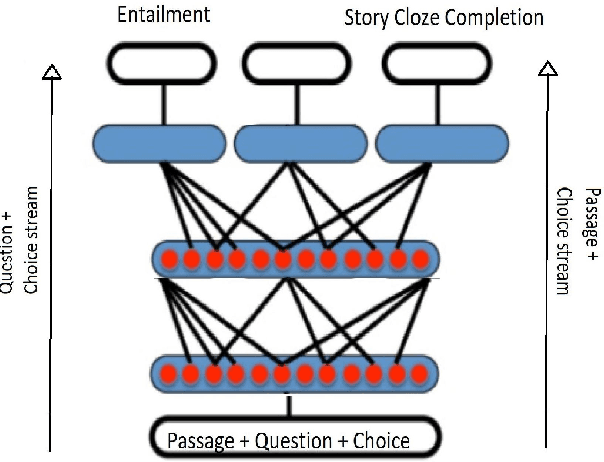

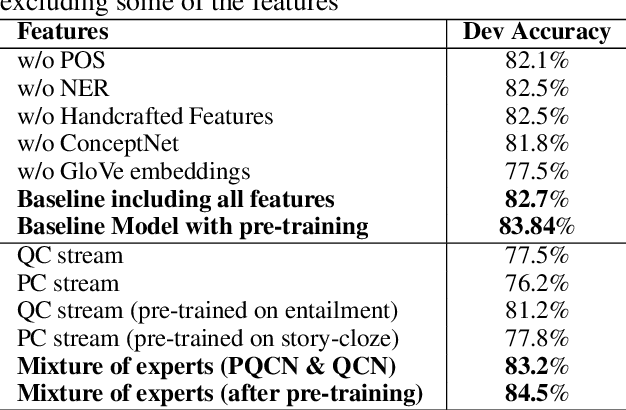

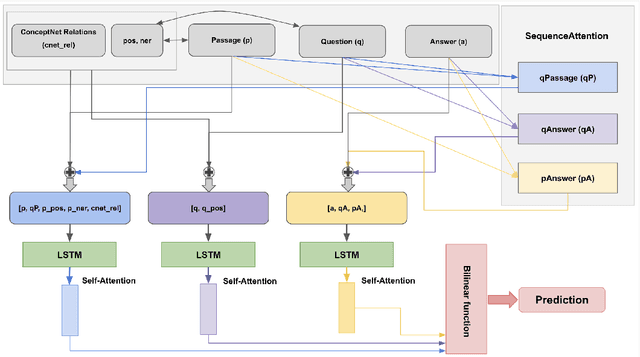

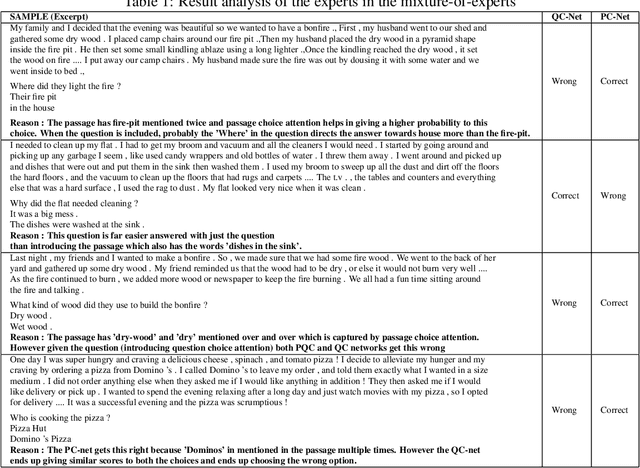

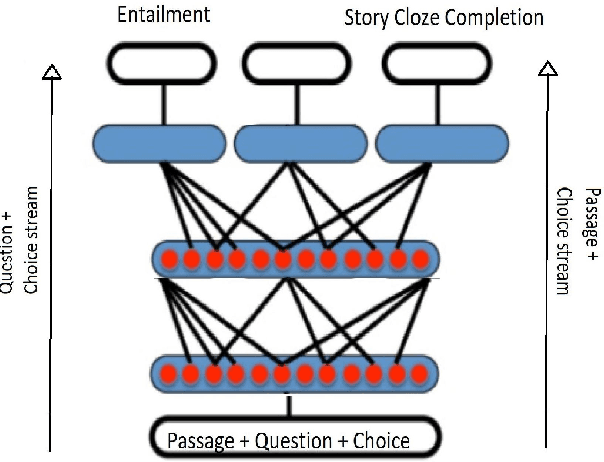

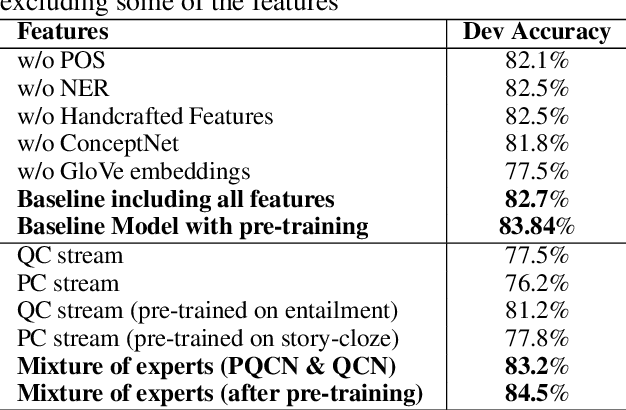

Abstract:In this work we present a Mixture of Task-Aware Experts Network for Machine Reading Comprehension on a relatively small dataset. We particularly focus on the issue of common-sense learning, enforcing the common ground knowledge by specifically training different expert networks to capture different kinds of relationships between each passage, question and choice triplet. Moreover, we take inspi ration on the recent advancements of multitask and transfer learning by training each network a relevant focused task. By making the mixture-of-networks aware of a specific goal by enforcing a task and a relationship, we achieve state-of-the-art results and reduce over-fitting.

Via

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge