G. Zhang

Michigan Engineering Services

Temporal clustering network for self-diagnosing faults from vibration measurements

Jun 16, 2020

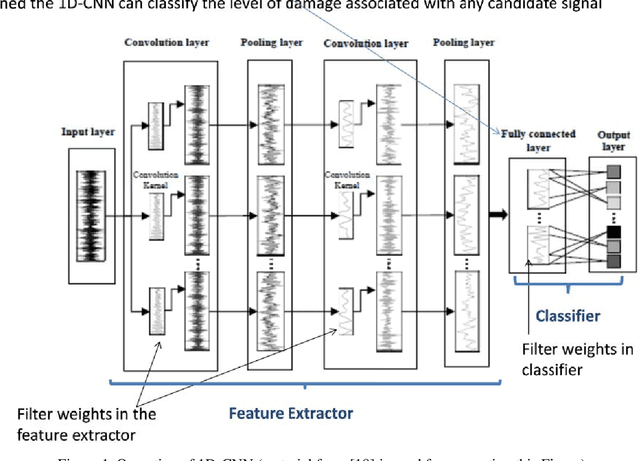

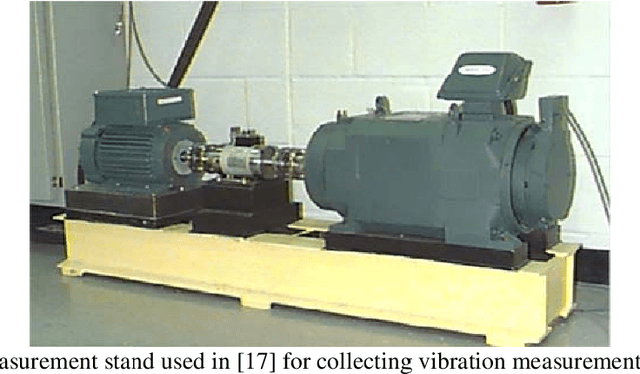

Abstract:There is a need to build intelligence in operating machinery and use data analysis on monitored signals in order to quantify the health of the operating system and self-diagnose any initiations of fault. Built-in control procedures can automatically take corrective actions in order to avoid catastrophic failure when a fault is diagnosed. This paper presents a Temporal Clustering Network (TCN) capability for processing acceleration measurement(s) made on the operating system (i.e. machinery foundation, machinery casing, etc.), or any other type of temporal signals, and determine based on the monitored signal when a fault is at its onset. The new capability uses: one-dimensional convolutional neural networks (1D-CNN) for processing the measurements; unsupervised learning (i.e. no labeled signals from the different operating conditions and no signals at pristine vs. damaged conditions are necessary for training the 1D-CNN); clustering (i.e. grouping signals in different clusters reflective of the operating conditions); and statistical analysis for identifying fault signals that are not members of any of the clusters associated with the pristine operating conditions. A case study demonstrating its operation is included in the paper. Finally topics for further research are identified.

A Robust Deep Learning Approach for Automatic Seizure Detection

Dec 17, 2018

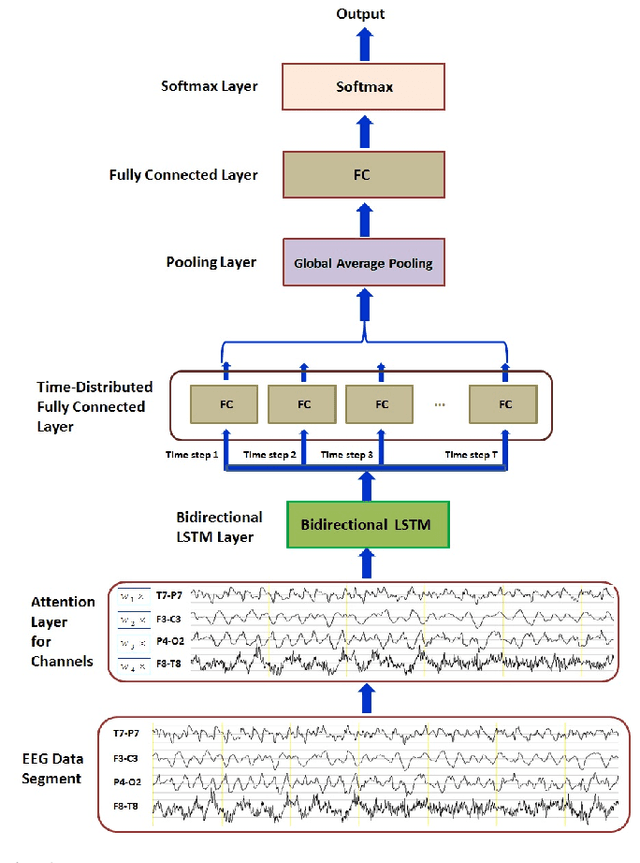

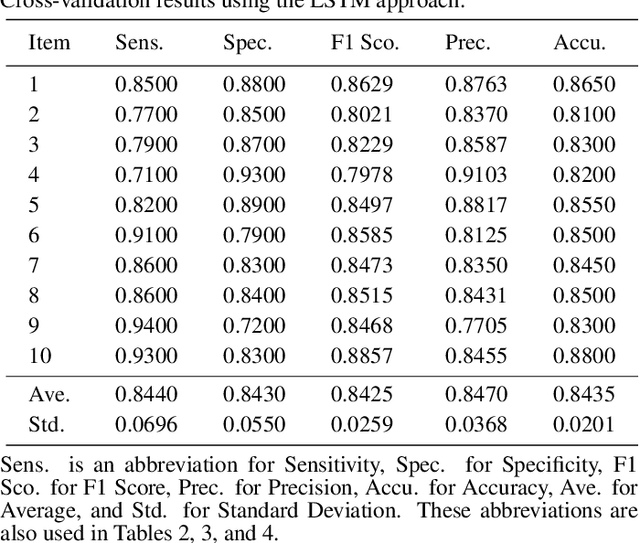

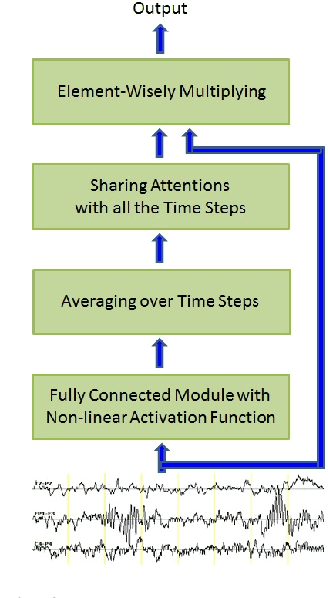

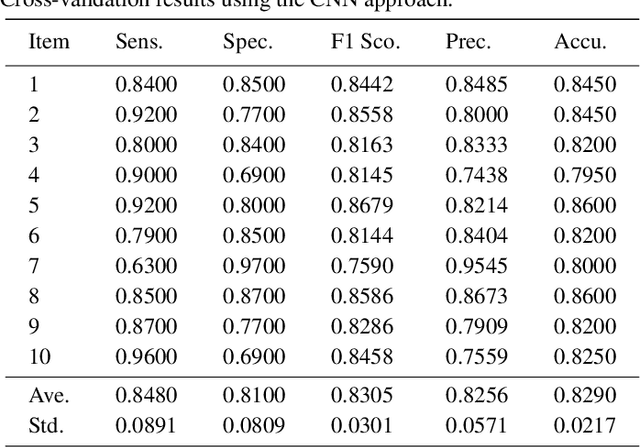

Abstract:Detecting epileptic seizure through analysis of the electroencephalography (EEG) signal becomes a standard method for the diagnosis of epilepsy. In a manual way, monitoring of long term EEG is tedious and error prone. Therefore, a reliable automatic seizure detection method is desirable. A critical challenge to automatic seizure detection is that seizure morphologies exhibit considerable variabilities. In order to capture essential seizure patterns, this paper leverages an attention mechanism and a bidirectional long short-term memory (BiLSTM) model to exploit both spatially and temporally discriminating features and account for seizure variabilities. The attention mechanism is to capture spatial features more effectively according to the contributions of brain areas to seizures. The BiLSTM model is to extract more discriminating temporal features in the forward and the backward directions. By accounting for both spatial and temporal variations of seizures, the proposed method is more robust across subjects. The testing results over the noisy real data of CHB-MIT show that the proposed method outperforms the current state-of-the-art methods. In both mixing-patients and cross-patient experiments, the average sensitivity and specificity are both higher while their corresponding standard deviations are lower than the methods in comparison.

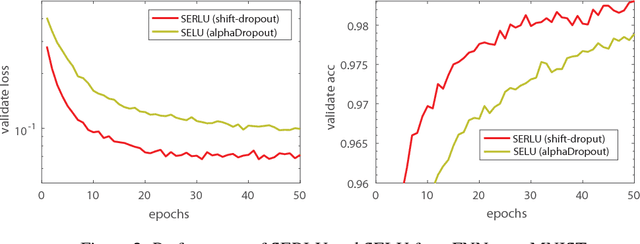

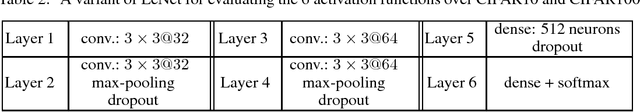

Effectiveness of Scaled Exponentially-Regularized Linear Units (SERLUs)

Jul 27, 2018

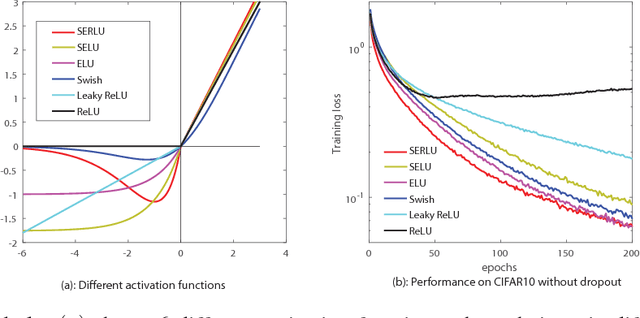

Abstract:Recently, self-normalizing neural networks (SNNs) have been proposed with the intention to avoid batch or weight normalization. The key step in SNNs is to properly scale the exponential linear unit (referred to as SELU) to inherently incorporate normalization based on central limit theory. SELU is a monotonically increasing function, where it has an approximately constant negative output for large negative input. In this work, we propose a new activation function to break the monotonicity property of SELU while still preserving the self-normalizing property. Differently from SELU, the new function introduces a bump-shaped function in the region of negative input by regularizing a linear function with a scaled exponential function, which is referred to as a scaled exponentially-regularized linear unit (SERLU). The bump-shaped function has approximately zero response to large negative input while being able to push the output of SERLU towards zero mean statistically. To effectively combat over-fitting, we develop a so-called shift-dropout for SERLU, which includes standard dropout as a special case. Experimental results on MNIST, CIFAR10 and CIFAR100 show that SERLU-based neural networks provide consistently promising results in comparison to other 5 activation functions including ELU, SELU, Swish, Leakly ReLU and ReLU.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge