G. N. DeSouza

Quantum Error Mitigation with Attention Graph Transformers for Burgers Equation Solvers on NISQ Hardware

Dec 29, 2025Abstract:We present a hybrid quantum-classical framework augmented with learned error mitigation for solving the viscous Burgers equation on noisy intermediate-scale quantum (NISQ) hardware. Using the Cole-Hopf transformation, the nonlinear Burgers equation is mapped to a diffusion equation, discretized on uniform grids, and encoded into a quantum state whose time evolution is approximated via Trotterized nearest-neighbor circuits implemented in Qiskit. Quantum simulations are executed on noisy Aer backends and IBM superconducting quantum devices and are benchmarked against high-accuracy classical solutions obtained using a Krylov-based solver applied to the corresponding discretized Hamiltonian. From measured quantum amplitudes, we reconstruct the velocity field and evaluate physical and numerical diagnostics, including the L2 error, shock location, and dissipation rate, both with and without zero-noise extrapolation (ZNE). To enable data-driven error mitigation, we construct a large parametric dataset by sweeping viscosity, time step, grid resolution, and boundary conditions, producing matched tuples of noisy, ZNE-corrected, hardware, and classical solutions together with detailed circuit metadata. Leveraging this dataset, we train an attention-based graph neural network that incorporates circuit structure, light-cone information, global circuit parameters, and noisy quantum outputs to predict error-mitigated solutions. Across a wide range of parameters, the learned model consistently reduces the discrepancy between quantum and classical solutions beyond what is achieved by ZNE alone. We discuss extensions of this approach to higher-dimensional Burgers systems and more general quantum partial differential equation solvers, highlighting learned error mitigation as a promising complement to physics-based noise reduction techniques on NISQ devices.

EGSA-PT:Edge-Guided Spatial Attention with Progressive Training for Monocular Depth Estimation and Segmentation of Transparent Objects

Nov 18, 2025

Abstract:Transparent object perception remains a major challenge in computer vision research, as transparency confounds both depth estimation and semantic segmentation. Recent work has explored multi-task learning frameworks to improve robustness, yet negative cross-task interactions often hinder performance. In this work, we introduce Edge-Guided Spatial Attention (EGSA), a fusion mechanism designed to mitigate destructive interactions by incorporating boundary information into the fusion between semantic and geometric features. On both Syn-TODD and ClearPose benchmarks, EGSA consistently improved depth accuracy over the current state of the art method (MODEST), while preserving competitive segmentation performance, with the largest improvements appearing in transparent regions. Besides our fusion design, our second contribution is a multi-modal progressive training strategy, where learning transitions from edges derived from RGB images to edges derived from predicted depth images. This approach allows the system to bootstrap learning from the rich textures contained in RGB images, and then switch to more relevant geometric content in depth maps, while it eliminates the need for ground-truth depth at training time. Together, these contributions highlight edge-guided fusion as a robust approach capable of improving transparent object perception.

Weakly Supervised Ephemeral Gully Detection In Remote Sensing Images Using Vision Language Models

Nov 17, 2025Abstract:Among soil erosion problems, Ephemeral Gullies are one of the most concerning phenomena occurring in agricultural fields. Their short temporal cycles increase the difficulty in automatically detecting them using classical computer vision approaches and remote sensing. Also, due to scarcity of and the difficulty in producing accurate labeled data, automatic detection of ephemeral gullies using Machine Learning is limited to zero-shot approaches which are hard to implement. To overcome these challenges, we present the first weakly supervised pipeline for detection of ephemeral gullies. Our method relies on remote sensing and uses Vision Language Models (VLMs) to drastically reduce the labor-intensive task of manual labeling. In order to achieve that, the method exploits: 1) the knowledge embedded in the VLM's pretraining; 2) a teacher-student model where the teacher learns from noisy labels coming from the VLMs, and the student learns by weak supervision using teacher-generate labels and a noise-aware loss function. We also make available the first-of-its-kind dataset for semi-supervised detection of ephemeral gully from remote-sensed images. The dataset consists of a number of locations labeled by a group of soil and plant scientists, as well as a large number of unlabeled locations. The dataset represent more than 18,000 high-resolution remote-sensing images obtained over the course of 13 years. Our experimental results demonstrate the validity of our approach by showing superior performances compared to VLMs and the label model itself when using weak supervision to train an student model. The code and dataset for this work are made publicly available.

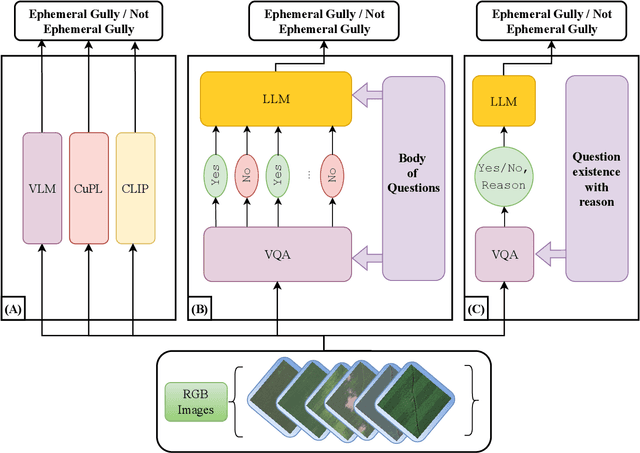

A Zero-Shot Learning Approach for Ephemeral Gully Detection from Remote Sensing using Vision Language Models

Mar 03, 2025

Abstract:Ephemeral gullies are a primary cause of soil erosion and their reliable, accurate, and early detection will facilitate significant improvements in the sustainability of global agricultural systems. In our view, prior research has not successfully addressed automated detection of ephemeral gullies from remotely sensed images, so for the first time, we present and evaluate three successful pipelines for ephemeral gully detection. Our pipelines utilize remotely sensed images, acquired from specific agricultural areas over a period of time. The pipelines were tested with various choices of Visual Language Models (VLMs), and they classified the images based on the presence of ephemeral gullies with accuracy higher than 70% and a F1-score close to 80% for positive gully detection. Additionally, we developed the first public dataset for ephemeral gully detection, labeled by a team of soil- and plant-science experts. To evaluate the proposed pipelines, we employed a variety of zero-shot classification methods based on State-of-the-Art (SOTA) open-source Vision-Language Models (VLMs). In addition to that, we compare the same pipelines with a transfer learning approach. Extensive experiments were conducted to validate the detection pipelines and to analyze the impact of hyperparameter changes in their performance. The experimental results demonstrate that the proposed zero-shot classification pipelines are highly effective in detecting ephemeral gullies in a scenario where classification datasets are scarce.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge