Fuyao Zhang

Oblivionis: A Lightweight Learning and Unlearning Framework for Federated Large Language Models

Aug 12, 2025

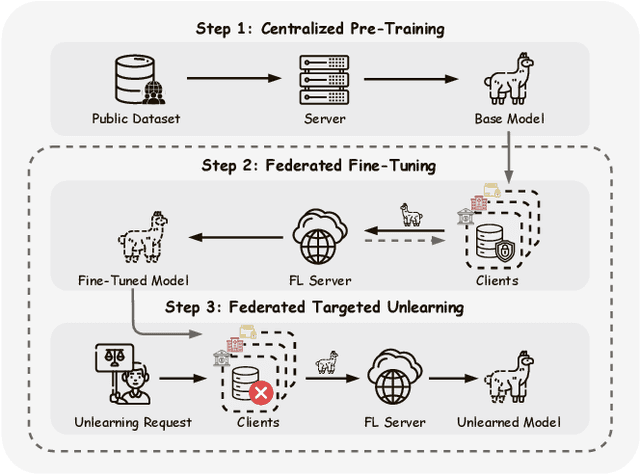

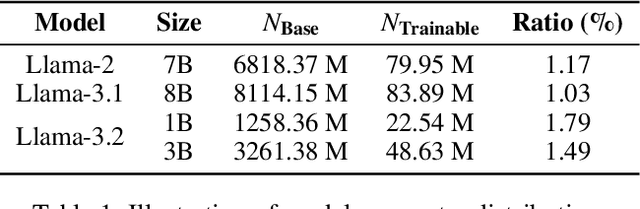

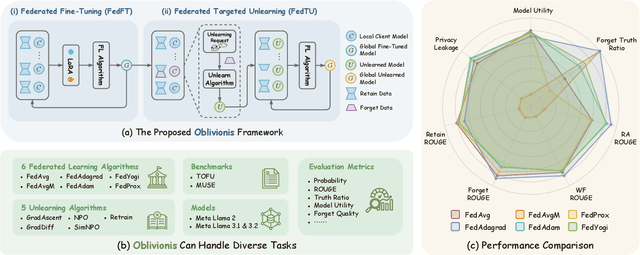

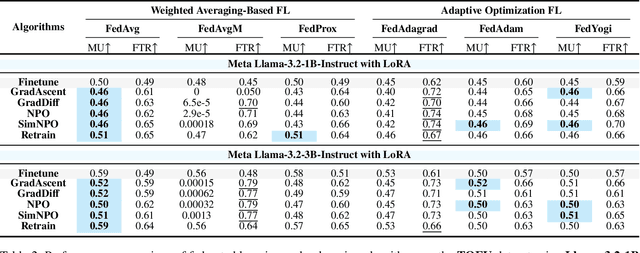

Abstract:Large Language Models (LLMs) increasingly leverage Federated Learning (FL) to utilize private, task-specific datasets for fine-tuning while preserving data privacy. However, while federated LLM frameworks effectively enable collaborative training without raw data sharing, they critically lack built-in mechanisms for regulatory compliance like GDPR's right to be forgotten. Integrating private data heightens concerns over data quality and long-term governance, yet existing distributed training frameworks offer no principled way to selectively remove specific client contributions post-training. Due to distributed data silos, stringent privacy constraints, and the intricacies of interdependent model aggregation, federated LLM unlearning is significantly more complex than centralized LLM unlearning. To address this gap, we introduce Oblivionis, a lightweight learning and unlearning framework that enables clients to selectively remove specific private data during federated LLM training, enhancing trustworthiness and regulatory compliance. By unifying FL and unlearning as a dual optimization objective, we incorporate 6 FL and 5 unlearning algorithms for comprehensive evaluation and comparative analysis, establishing a robust pipeline for federated LLM unlearning. Extensive experiments demonstrate that Oblivionis outperforms local training, achieving a robust balance between forgetting efficacy and model utility, with cross-algorithm comparisons providing clear directions for future LLM development.

Object Detection in the Context of Mobile Augmented Reality

Aug 15, 2020Abstract:In the past few years, numerous Deep Neural Network (DNN) models and frameworks have been developed to tackle the problem of real-time object detection from RGB images. Ordinary object detection approaches process information from the images only, and they are oblivious to the camera pose with regard to the environment and the scale of the environment. On the other hand, mobile Augmented Reality (AR) frameworks can continuously track a camera's pose within the scene and can estimate the correct scale of the environment by using Visual-Inertial Odometry (VIO). In this paper, we propose a novel approach that combines the geometric information from VIO with semantic information from object detectors to improve the performance of object detection on mobile devices. Our approach includes three components: (1) an image orientation correction method, (2) a scale-based filtering approach, and (3) an online semantic map. Each component takes advantage of the different characteristics of the VIO-based AR framework. We implemented the AR-enhanced features using ARCore and the SSD Mobilenet model on Android phones. To validate our approach, we manually labeled objects in image sequences taken from 12 room-scale AR sessions. The results show that our approach can improve on the accuracy of generic object detectors by 12% on our dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge