Fred Spiessens

Combined Peak Reduction and Self-Consumption Using Proximal Policy Optimization

Nov 27, 2022Abstract:Residential demand response programs aim to activate demand flexibility at the household level. In recent years, reinforcement learning (RL) has gained significant attention for these type of applications. A major challenge of RL algorithms is data efficiency. New RL algorithms, such as proximal policy optimisation (PPO), have tried to increase data efficiency. Additionally, combining RL with transfer learning has been proposed in an effort to mitigate this challenge. In this work, we further improve upon state-of-the-art transfer learning performance by incorporating demand response domain knowledge into the learning pipeline. We evaluate our approach on a demand response use case where peak shaving and self-consumption is incentivised by means of a capacity tariff. We show our adapted version of PPO, combined with transfer learning, reduces cost by 14.51% compared to a regular hysteresis controller and by 6.68% compared to traditional PPO.

Direct Load Control of Thermostatically Controlled Loads Based on Sparse Observations Using Deep Reinforcement Learning

Jul 26, 2017

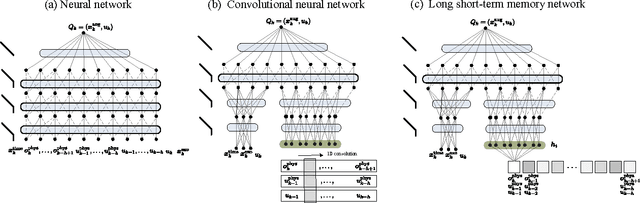

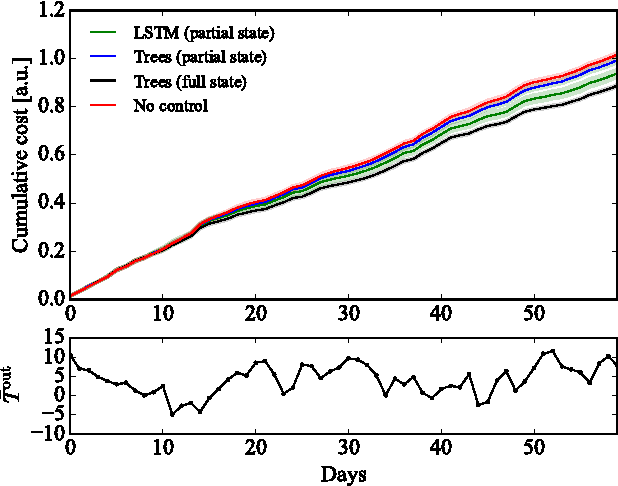

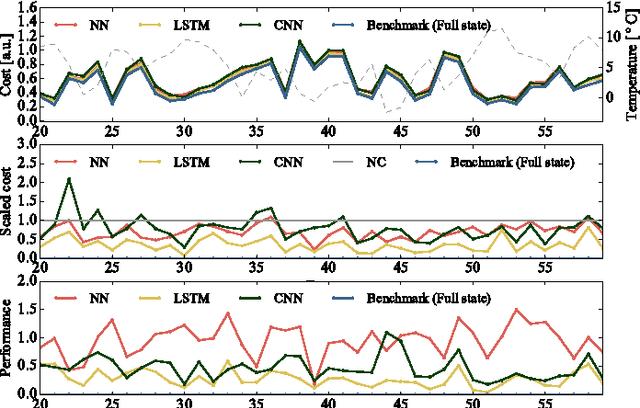

Abstract:This paper considers a demand response agent that must find a near-optimal sequence of decisions based on sparse observations of its environment. Extracting a relevant set of features from these observations is a challenging task and may require substantial domain knowledge. One way to tackle this problem is to store sequences of past observations and actions in the state vector, making it high dimensional, and apply techniques from deep learning. This paper investigates the capabilities of different deep learning techniques, such as convolutional neural networks and recurrent neural networks, to extract relevant features for finding near-optimal policies for a residential heating system and electric water heater that are hindered by sparse observations. Our simulation results indicate that in this specific scenario, feeding sequences of time-series to an LSTM network, which is a specific type of recurrent neural network, achieved a higher performance than stacking these time-series in the input of a convolutional neural network or deep neural network.

Using Reinforcement Learning for Demand Response of Domestic Hot Water Buffers: a Real-Life Demonstration

Mar 16, 2017

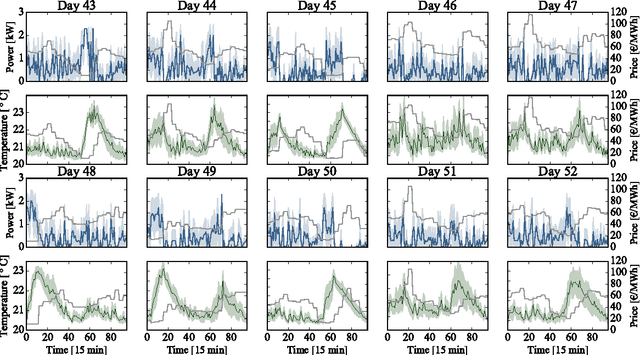

Abstract:This paper demonstrates a data-driven control approach for demand response in real-life residential buildings. The objective is to optimally schedule the heating cycles of the Domestic Hot Water (DHW) buffer to maximize the self-consumption of the local photovoltaic (PV) production. A model-based reinforcement learning technique is used to tackle the underlying sequential decision-making problem. The proposed algorithm learns the stochastic occupant behavior, predicts the PV production and takes into account the dynamics of the system. A real-life experiment with six residential buildings is performed using this algorithm. The results show that the self-consumption of the PV production is significantly increased, compared to the default thermostat control.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge