François Waldner

Looking for change? Roll the Dice and demand Attention

Sep 04, 2020

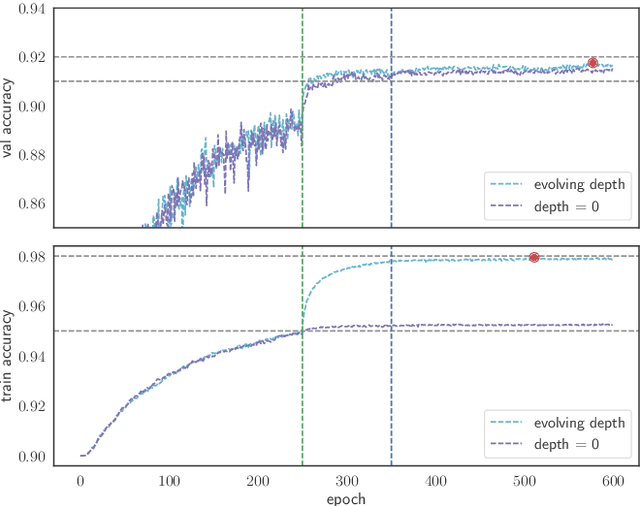

Abstract:Change detection, i.e. identification per pixel of changes for some classes of interest from a set of bi-temporal co-registered images, is a fundamental task in the field of remote sensing. It remains challenging due to unrelated forms of change that appear at different times in input images. These are changes due to to different environmental conditions or simply changes of objects that are not of interest. Here, we propose a reliable deep learning framework for the task of semantic change detection in very high-resolution aerial images. Our framework consists of a new loss function, new attention modules, new feature extraction building blocks, and a new backbone architecture that is tailored for the task of semantic change detection. Specifically, we define a new form of set similarity, that is based on an iterative evaluation of a variant of the Dice coefficient. We use this similarity metric to define a new loss function as well as a new spatial and channel convolution Attention layer (the FracTAL). The new attention layer, designed specifically for vision tasks, is memory efficient, thus suitable for use in all levels of deep convolutional networks. Based on these, we introduce two new efficient self-contained feature extraction convolution units. We term these units CEECNet and FracTAL ResNet units. We validate the performance of these feature extraction building blocks on the CIFAR10 reference data and compare the results with standard ResNet modules. Further, we introduce a new encoder/decoder scheme, a network macro-topology, that is tailored for the task of change detection. We validate our approach by showing excellent performance and achieving state of the art score (F1 and Intersection over Union - hereafter IoU) on two building change detection datasets, namely, the LEVIRCD (F1: 0.918, IoU: 0.848) and the WHU (F1: 0.938, IoU: 0.882) datasets.

Deep learning on edge: extracting field boundaries from satellite images with a convolutional neural network

Oct 26, 2019

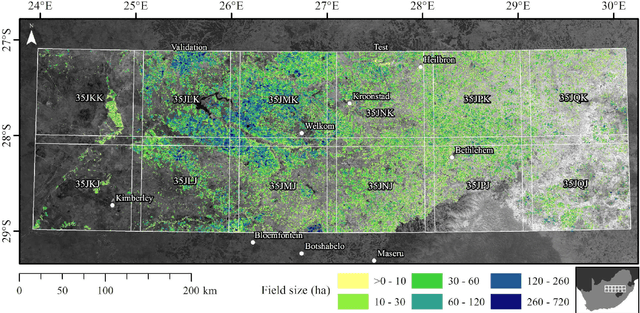

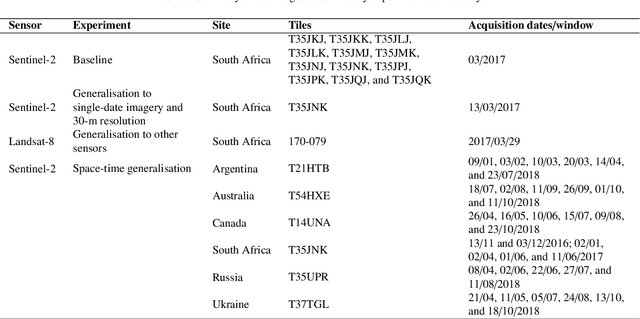

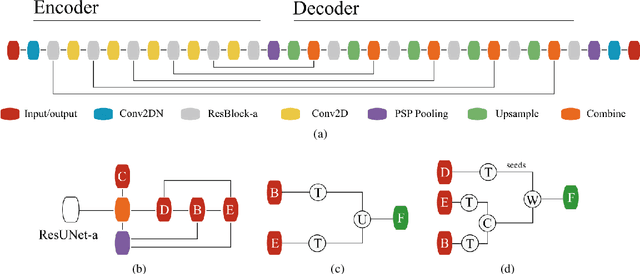

Abstract:Applications of digital agricultural services often require either farmers or their advisers to provide digital records of their field boundaries. Automatic extraction of field boundaries from satellite imagery would reduce the reliance on manual input of these records which is time consuming and error-prone, and would underpin the provision of remote products and services. The lack of current field boundary data sets seems to indicate low uptake of existing methods,presumably because of expensive image preprocessing requirements and local, often arbitrary, tuning. In this paper, we address the problem of field boundary extraction from satellite images as a multitask semantic segmentation problem. We used ResUNet-a, a deep convolutional neural network with a fully connected UNet backbone that features dilated convolutions and conditioned inference, to assign three labels to each pixel: 1) the probability of belonging to a field; 2) the probability of being part of a boundary; and 3) the distance to the closest boundary. These labels can then be combined to obtain closed field boundaries. Using a single composite image from Sentinel-2, the model was highly accurate in mapping field extent, field boundaries, and, consequently, individual fields. Replacing the monthly composite with a single-date image close to the compositing period only marginally decreased accuracy. We then showed in a series of experiments that our model generalised well across resolutions, sensors, space and time without recalibration. Building consensus by averaging model predictions from at least four images acquired across the season is the key to coping with the temporal variations of accuracy. By minimising image preprocessing requirements and replacing local arbitrary decisions by data-driven ones, our approach is expected to facilitate the extraction of individual crop fields at scale.

ResUNet-a: a deep learning framework for semantic segmentation of remotely sensed data

Apr 01, 2019

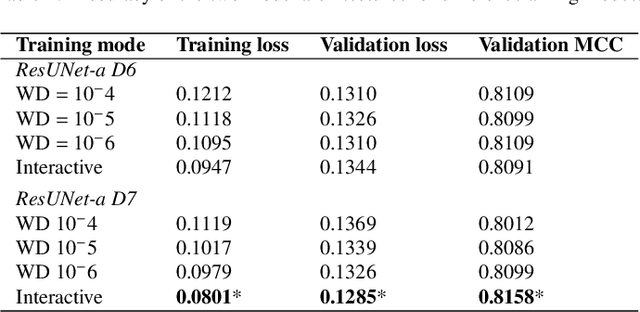

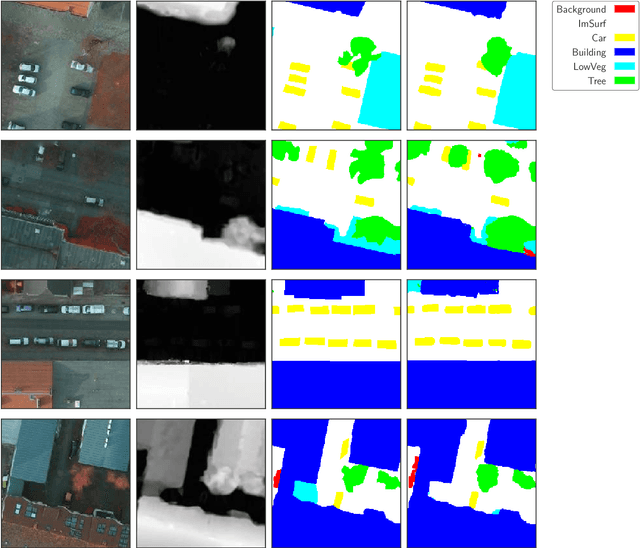

Abstract:Scene understanding of high resolution aerial images is of great importance for the task of automated monitoring in various remote sensing applications. Due to the large within-class and small between-class variance in pixel values of objects of interest, this remains a challenging task. In recent years, deep convolutional neural networks have started being used in remote sensing applications and demonstrate state-of-the-art performance for pixel level classification of objects. Here we present a novel deep learning architecture, ResUNet-a, that combines ideas from various state-of-the-art modules used in computer vision for semantic segmentation tasks. We analyse the performance of several flavours of the Generalized Dice loss for semantic segmentation, and we introduce a novel variant loss function for semantic segmentation of objects that has better convergence properties and behaves well even under the presence of highly imbalanced classes. The performance of our modelling framework is evaluated on the ISPRS 2D Potsdam dataset. Results show state-of-the-art performance with an average F1 score of 92.1\% over all classes for our best model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge