Florian Kennel-Maushart

Manipulability optimization for multi-arm teleoperation

Feb 10, 2021

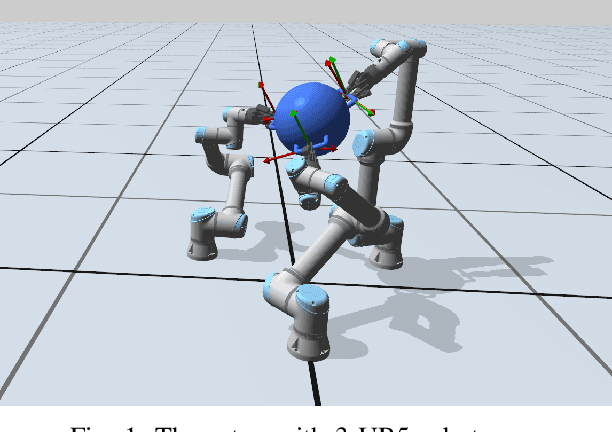

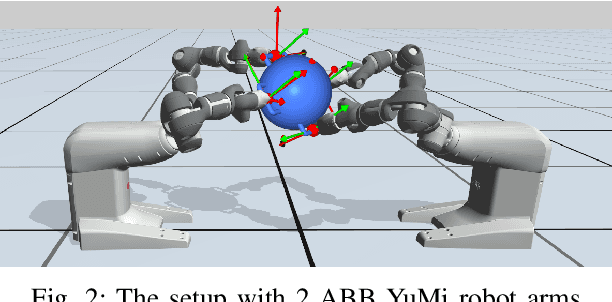

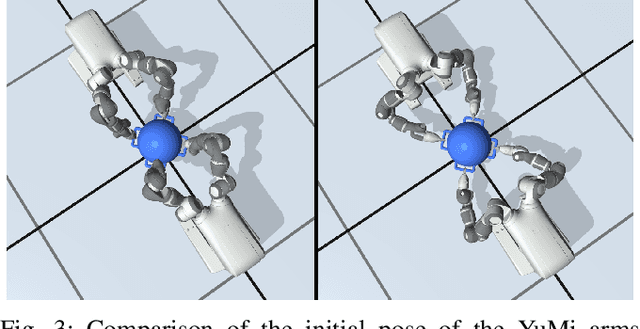

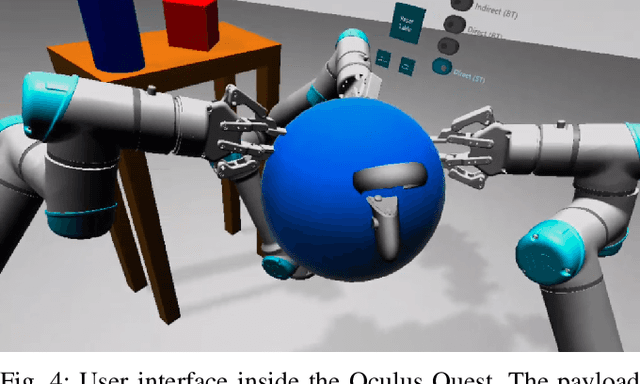

Abstract:Teleoperation provides a way for human operators to guide robots in situations where full autonomy is challenging or where direct human intervention is required. It can also be an important tool to teach robots in order to achieve autonomous behaviour later on. The increased availability of collaborative robot arms and Virtual Reality (VR) devices provides ample opportunity for development of novel teleoperation methods. Since robot arms are often kinematically different from human arms, mapping human motions to a robot in real-time is not trivial. Additionally, a human operator might steer the robot arm toward singularities or its workspace limits, which can lead to undesirable behaviour. This is further accentuated for the orchestration of multiple robots. In this paper, we present a VR interface targeted to multi-arm payload manipulation, which can closely match real-time input motion. Allowing the user to manipulate the payload rather than mapping their motions to individual arms we are able to simultaneously guide multiple collaborative arms. By releasing a single rotational degree of freedom, and by using a local optimization method, we can improve each arm's manipulability index, which in turn lets us avoid kinematic singularities and workspace limitations. We apply our approach to predefined trajectories as well as real-time teleoperation on different robot arms and compare performance in terms of end effector position error and relevant joint motion metrics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge