Florian Heinrichs

Consumer-grade EEG-based Eye Tracking

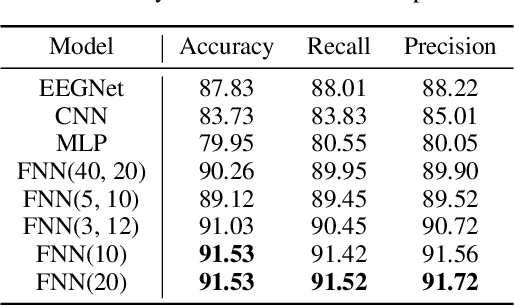

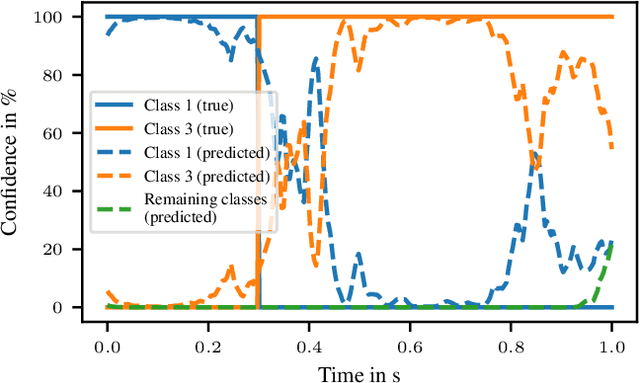

Mar 18, 2025Abstract:Electroencephalography-based eye tracking (EEG-ET) leverages eye movement artifacts in EEG signals as an alternative to camera-based tracking. While EEG-ET offers advantages such as robustness in low-light conditions and better integration with brain-computer interfaces, its development lags behind traditional methods, particularly in consumer-grade settings. To support research in this area, we present a dataset comprising simultaneous EEG and eye-tracking recordings from 113 participants across 116 sessions, amounting to 11 hours and 45 minutes of recordings. Data was collected using a consumer-grade EEG headset and webcam-based eye tracking, capturing eye movements under four experimental paradigms with varying complexity. The dataset enables the evaluation of EEG-ET methods across different gaze conditions and serves as a benchmark for assessing feasibility with affordable hardware. Data preprocessing includes handling of missing values and filtering to enhance usability. In addition to the dataset, code for data preprocessing and analysis is available to support reproducibility and further research.

OML-AD: Online Machine Learning for Anomaly Detection in Time Series Data

Sep 15, 2024Abstract:Time series are ubiquitous and occur naturally in a variety of applications -- from data recorded by sensors in manufacturing processes, over financial data streams to climate data. Different tasks arise, such as regression, classification or segmentation of the time series. However, to reliably solve these challenges, it is important to filter out abnormal observations that deviate from the usual behavior of the time series. While many anomaly detection methods exist for independent data and stationary time series, these methods are not applicable to non-stationary time series. To allow for non-stationarity in the data, while simultaneously detecting anomalies, we propose OML-AD, a novel approach for anomaly detection (AD) based on online machine learning (OML). We provide an implementation of OML-AD within the Python library River and show that it outperforms state-of-the-art baseline methods in terms of accuracy and computational efficiency.

Detecting 5G Narrowband Jammers with CNN, k-nearest Neighbors, and Support Vector Machines

May 07, 2024Abstract:5G cellular networks are particularly vulnerable against narrowband jammers that target specific control sub-channels in the radio signal. One mitigation approach is to detect such jamming attacks with an online observation system, based on machine learning. We propose to detect jamming at the physical layer with a pre-trained machine learning model that performs binary classification. Based on data from an experimental 5G network, we study the performance of different classification models. A convolutional neural network will be compared to support vector machines and k-nearest neighbors, where the last two methods are combined with principal component analysis. The obtained results show substantial differences in terms of classification accuracy and computation time.

GT-PCA: Effective and Interpretable Dimensionality Reduction with General Transform-Invariant Principal Component Analysis

Jan 28, 2024Abstract:Data analysis often requires methods that are invariant with respect to specific transformations, such as rotations in case of images or shifts in case of images and time series. While principal component analysis (PCA) is a widely-used dimension reduction technique, it lacks robustness with respect to these transformations. Modern alternatives, such as autoencoders, can be invariant with respect to specific transformations but are generally not interpretable. We introduce General Transform-Invariant Principal Component Analysis (GT-PCA) as an effective and interpretable alternative to PCA and autoencoders. We propose a neural network that efficiently estimates the components and show that GT-PCA significantly outperforms alternative methods in experiments based on synthetic and real data.

Monitoring Machine Learning Models: Online Detection of Relevant Deviations

Sep 26, 2023Abstract:Machine learning models are essential tools in various domains, but their performance can degrade over time due to changes in data distribution or other factors. On one hand, detecting and addressing such degradations is crucial for maintaining the models' reliability. On the other hand, given enough data, any arbitrary small change of quality can be detected. As interventions, such as model re-training or replacement, can be expensive, we argue that they should only be carried out when changes exceed a given threshold. We propose a sequential monitoring scheme to detect these relevant changes. The proposed method reduces unnecessary alerts and overcomes the multiple testing problem by accounting for temporal dependence of the measured model quality. Conditions for consistency and specified asymptotic levels are provided. Empirical validation using simulated and real data demonstrates the superiority of our approach in detecting relevant changes in model quality compared to benchmark methods. Our research contributes a practical solution for distinguishing between minor fluctuations and meaningful degradations in machine learning model performance, ensuring their reliability in dynamic environments.

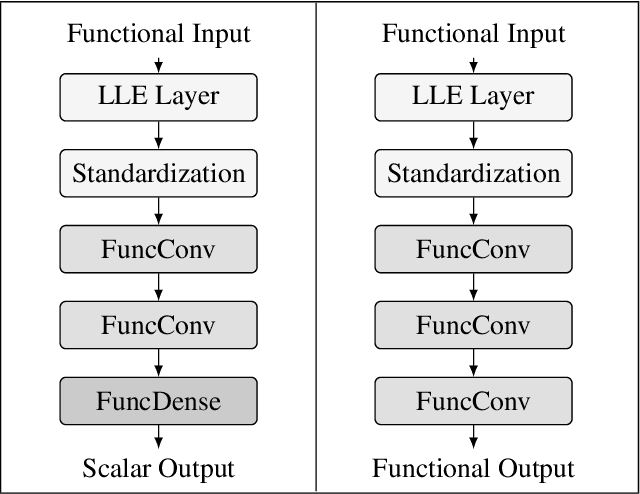

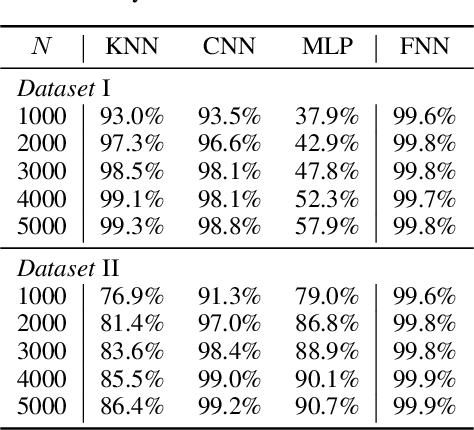

Functional Neural Networks: Shift invariant models for functional data with applications to EEG classification

Jan 14, 2023

Abstract:It is desirable for statistical models to detect signals of interest independently of their position. If the data is generated by some smooth process, this additional structure should be taken into account. We introduce a new class of neural networks that are shift invariant and preserve smoothness of the data: functional neural networks (FNNs). For this, we use methods from functional data analysis (FDA) to extend multi-layer perceptrons and convolutional neural networks to functional data. We propose different model architectures, show that the models outperform a benchmark model from FDA in terms of accuracy and successfully use FNNs to classify electroencephalography (EEG) data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge