Fernanda L. Ribeiro

SMILE-UHURA Challenge -- Small Vessel Segmentation at Mesoscopic Scale from Ultra-High Resolution 7T Magnetic Resonance Angiograms

Nov 14, 2024Abstract:The human brain receives nutrients and oxygen through an intricate network of blood vessels. Pathology affecting small vessels, at the mesoscopic scale, represents a critical vulnerability within the cerebral blood supply and can lead to severe conditions, such as Cerebral Small Vessel Diseases. The advent of 7 Tesla MRI systems has enabled the acquisition of higher spatial resolution images, making it possible to visualise such vessels in the brain. However, the lack of publicly available annotated datasets has impeded the development of robust, machine learning-driven segmentation algorithms. To address this, the SMILE-UHURA challenge was organised. This challenge, held in conjunction with the ISBI 2023, in Cartagena de Indias, Colombia, aimed to provide a platform for researchers working on related topics. The SMILE-UHURA challenge addresses the gap in publicly available annotated datasets by providing an annotated dataset of Time-of-Flight angiography acquired with 7T MRI. This dataset was created through a combination of automated pre-segmentation and extensive manual refinement. In this manuscript, sixteen submitted methods and two baseline methods are compared both quantitatively and qualitatively on two different datasets: held-out test MRAs from the same dataset as the training data (with labels kept secret) and a separate 7T ToF MRA dataset where both input volumes and labels are kept secret. The results demonstrate that most of the submitted deep learning methods, trained on the provided training dataset, achieved reliable segmentation performance. Dice scores reached up to 0.838 $\pm$ 0.066 and 0.716 $\pm$ 0.125 on the respective datasets, with an average performance of up to 0.804 $\pm$ 0.15.

An explainability framework for cortical surface-based deep learning

Mar 15, 2022

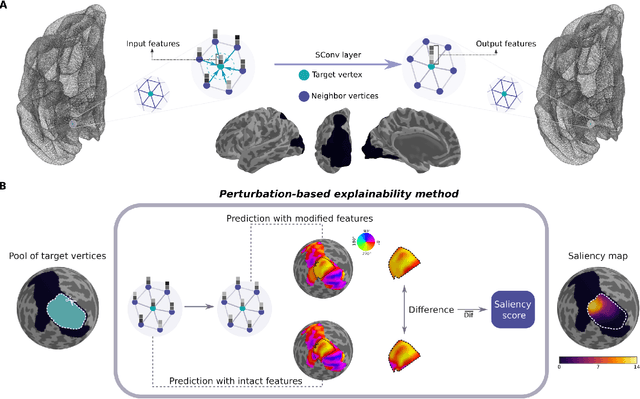

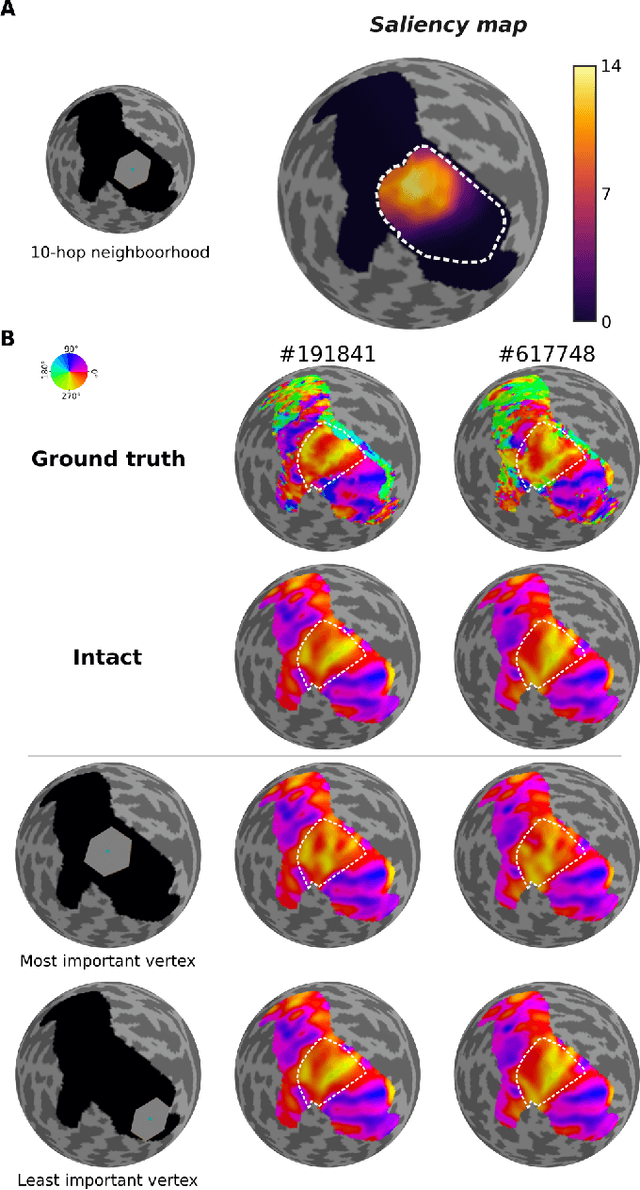

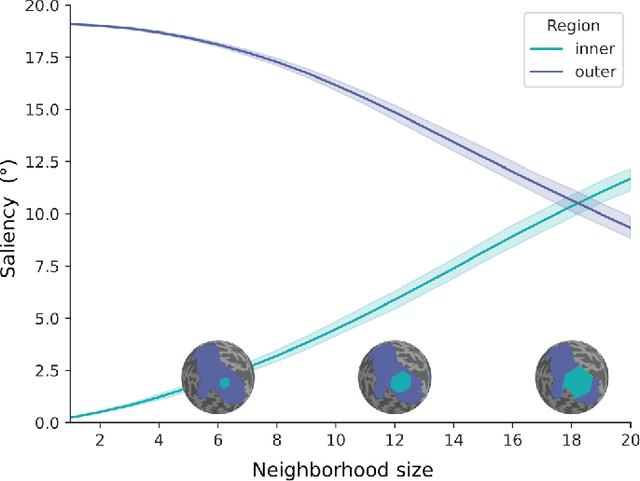

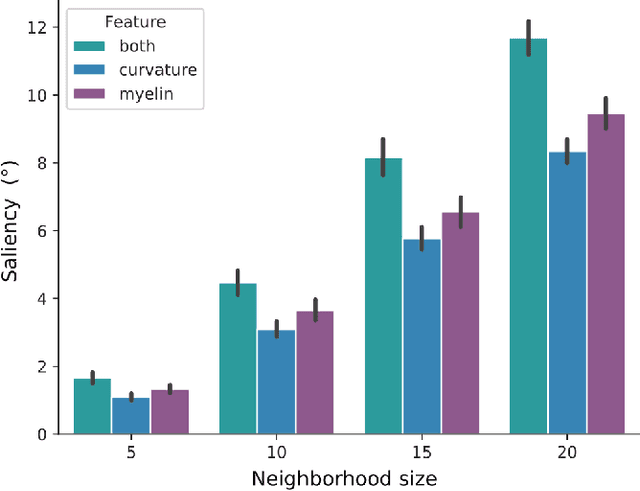

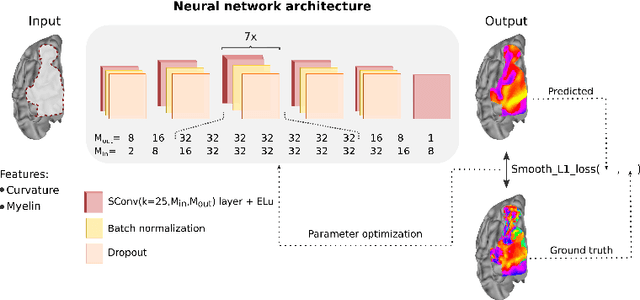

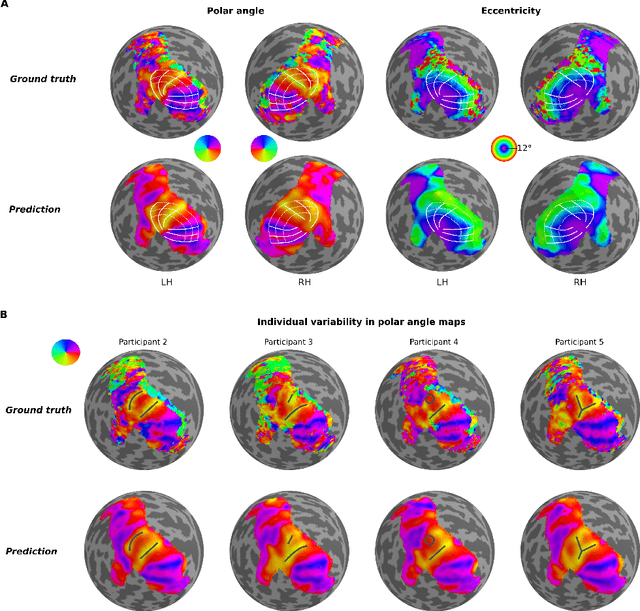

Abstract:The emergence of explainability methods has enabled a better comprehension of how deep neural networks operate through concepts that are easily understood and implemented by the end user. While most explainability methods have been designed for traditional deep learning, some have been further developed for geometric deep learning, in which data are predominantly represented as graphs. These representations are regularly derived from medical imaging data, particularly in the field of neuroimaging, in which graphs are used to represent brain structural and functional wiring patterns (brain connectomes) and cortical surface models are used to represent the anatomical structure of the brain. Although explainability techniques have been developed for identifying important vertices (brain areas) and features for graph classification, these methods are still lacking for more complex tasks, such as surface-based modality transfer (or vertex-wise regression). Here, we address the need for surface-based explainability approaches by developing a framework for cortical surface-based deep learning, providing a transparent system for modality transfer tasks. First, we adapted a perturbation-based approach for use with surface data. Then, we applied our perturbation-based method to investigate the key features and vertices used by a geometric deep learning model developed to predict brain function from anatomy directly on a cortical surface model. We show that our explainability framework is not only able to identify important features and their spatial location but that it is also reliable and valid.

DeepRetinotopy: Predicting the Functional Organization of Human Visual Cortex from Structural MRI Data using Geometric Deep Learning

May 26, 2020

Abstract:Whether it be in a man-made machine or a biological system, form and function are often directly related. In the latter, however, this particular relationship is often unclear due to the intricate nature of biology. Here we developed a geometric deep learning model capable of exploiting the actual structure of the cortex to learn the complex relationship between brain function and anatomy from structural and functional MRI data. Our model was not only able to predict the functional organization of human visual cortex from anatomical properties alone, but it was also able to predict nuanced variations across individuals.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge