Felipe Moreno-Vera

Inferring Discussion Topics about Exploitation of Vulnerabilities from Underground Hacking Forums

May 07, 2024Abstract:The increasing sophistication of cyber threats necessitates proactive measures to identify vulnerabilities and potential exploits. Underground hacking forums serve as breeding grounds for the exchange of hacking techniques and discussions related to exploitation. In this research, we propose an innovative approach using topic modeling to analyze and uncover key themes in vulnerabilities discussed within these forums. The objective of our study is to develop a machine learning-based model that can automatically detect and classify vulnerability-related discussions in underground hacking forums. By monitoring and analyzing the content of these forums, we aim to identify emerging vulnerabilities, exploit techniques, and potential threat actors. To achieve this, we collect a large-scale dataset consisting of posts and threads from multiple underground forums. We preprocess and clean the data to ensure accuracy and reliability. Leveraging topic modeling techniques, specifically Latent Dirichlet Allocation (LDA), we uncover latent topics and their associated keywords within the dataset. This enables us to identify recurring themes and prevalent discussions related to vulnerabilities, exploits, and potential targets.

* 6 pages

WSAM: Visual Explanations from Style Augmentation as Adversarial Attacker and Their Influence in Image Classification

Aug 29, 2023

Abstract:Currently, style augmentation is capturing attention due to convolutional neural networks (CNN) being strongly biased toward recognizing textures rather than shapes. Most existing styling methods either perform a low-fidelity style transfer or a weak style representation in the embedding vector. This paper outlines a style augmentation algorithm using stochastic-based sampling with noise addition to improving randomization on a general linear transformation for style transfer. With our augmentation strategy, all models not only present incredible robustness against image stylizing but also outperform all previous methods and surpass the state-of-the-art performance for the STL-10 dataset. In addition, we present an analysis of the model interpretations under different style variations. At the same time, we compare comprehensive experiments demonstrating the performance when applied to deep neural architectures in training settings.

Cream Skimming the Underground: Identifying Relevant Information Points from Online Forums

Aug 03, 2023

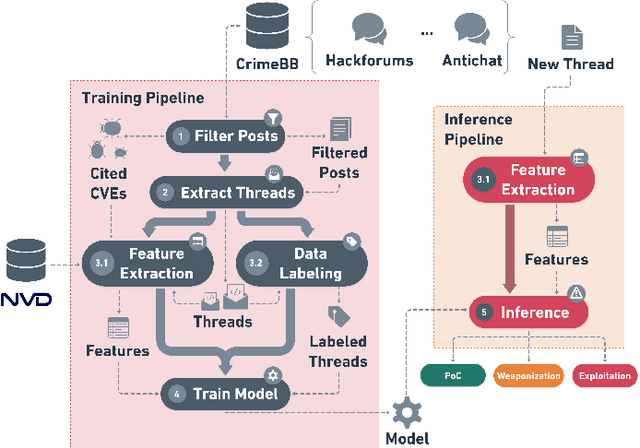

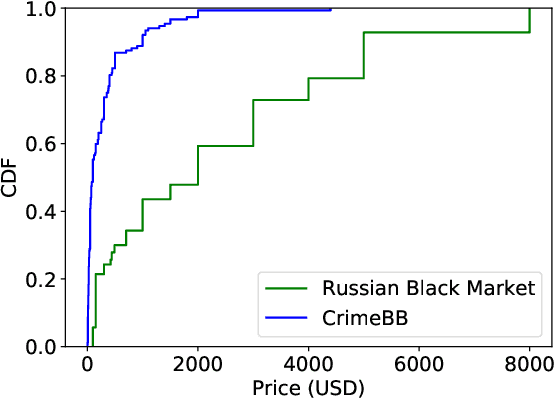

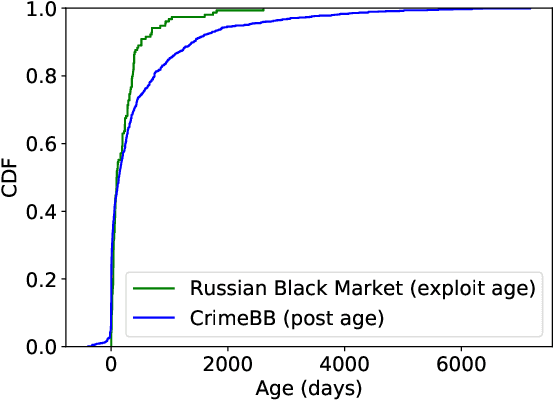

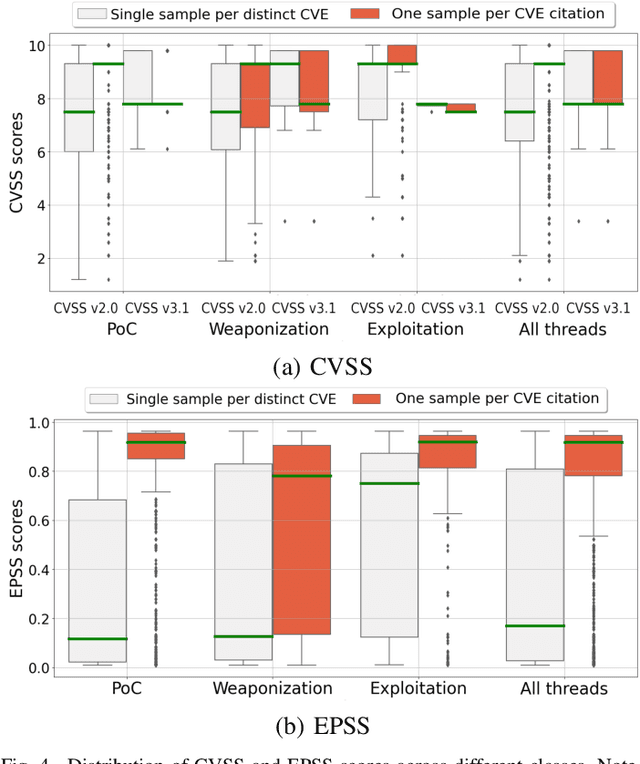

Abstract:This paper proposes a machine learning-based approach for detecting the exploitation of vulnerabilities in the wild by monitoring underground hacking forums. The increasing volume of posts discussing exploitation in the wild calls for an automatic approach to process threads and posts that will eventually trigger alarms depending on their content. To illustrate the proposed system, we use the CrimeBB dataset, which contains data scraped from multiple underground forums, and develop a supervised machine learning model that can filter threads citing CVEs and label them as Proof-of-Concept, Weaponization, or Exploitation. Leveraging random forests, we indicate that accuracy, precision and recall above 0.99 are attainable for the classification task. Additionally, we provide insights into the difference in nature between weaponization and exploitation, e.g., interpreting the output of a decision tree, and analyze the profits and other aspects related to the hacking communities. Overall, our work sheds insight into the exploitation of vulnerabilities in the wild and can be used to provide additional ground truth to models such as EPSS and Expected Exploitability.

Performing Deep Recurrent Double Q-Learning for Atari Games

Aug 16, 2019

Abstract:Currently, many applications in Machine Learning are based on define new models to extract more information about data, In this case Deep Reinforcement Learning with the most common application in video games like Atari, Mario, and others causes an impact in how to computers can learning by himself with only information called rewards obtained from any action. There is a lot of algorithms modeled and implemented based on Deep Recurrent Q-Learning proposed by DeepMind used in AlphaZero and Go. In this document, We proposed Deep Recurrent Double Q-Learning that is an implementation of Deep Reinforcement Learning using Double Q-Learning algorithms and Recurrent Networks like LSTM and DRQN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge