Fehmi Kahraman

Generalized Mask-aware IoU for Anchor Assignment for Real-time Instance Segmentation

Dec 28, 2023

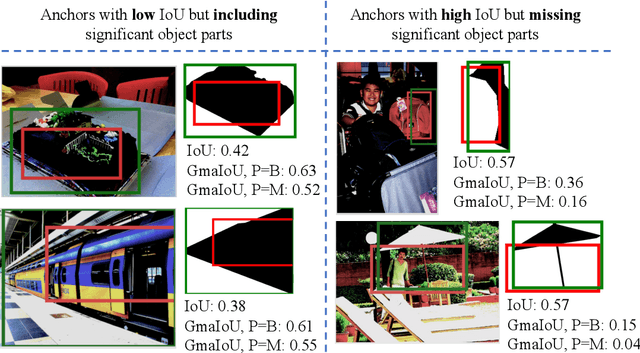

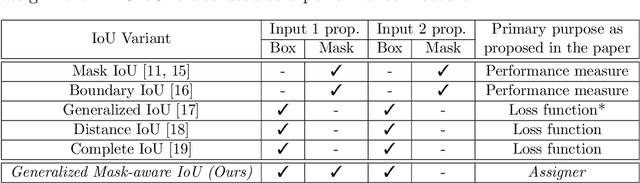

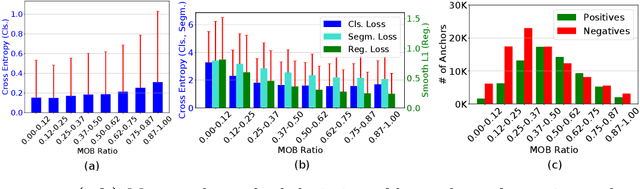

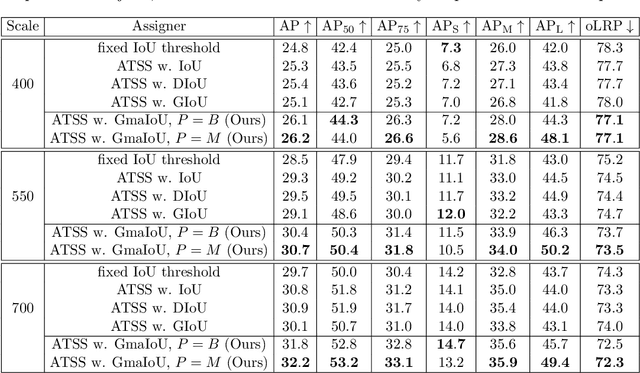

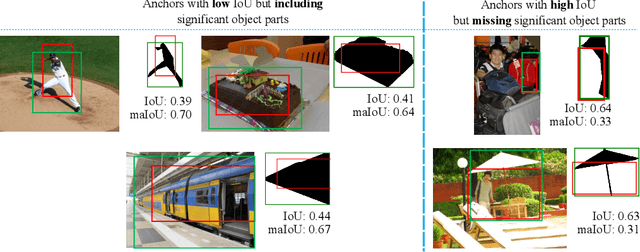

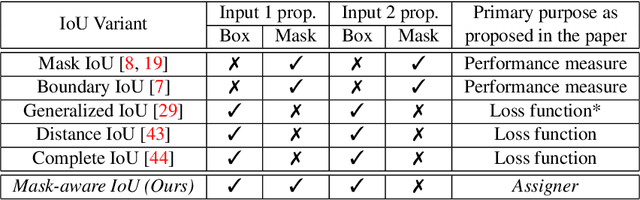

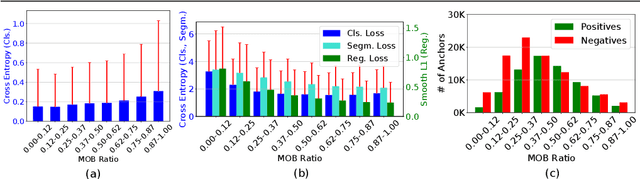

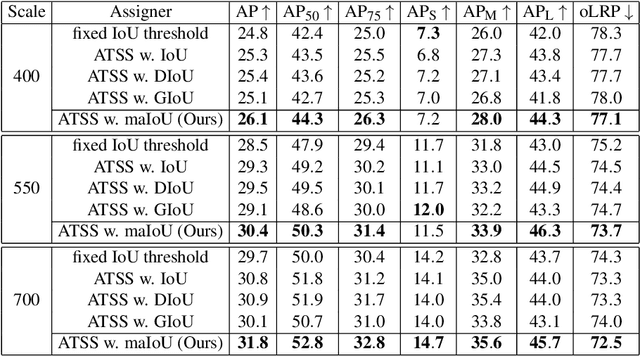

Abstract:This paper introduces Generalized Mask-aware Intersection-over-Union (GmaIoU) as a new measure for positive-negative assignment of anchor boxes during training of instance segmentation methods. Unlike conventional IoU measure or its variants, which only consider the proximity of anchor and ground-truth boxes; GmaIoU additionally takes into account the segmentation mask. This enables GmaIoU to provide more accurate supervision during training. We demonstrate the effectiveness of GmaIoU by replacing IoU with our GmaIoU in ATSS, a state-of-the-art (SOTA) assigner. Then, we train YOLACT, a real-time instance segmentation method, using our GmaIoU-based ATSS assigner. The resulting YOLACT based on the GmaIoU assigner outperforms (i) ATSS with IoU by $\sim 1.0-1.5$ mask AP, (ii) YOLACT with a fixed IoU threshold assigner by $\sim 1.5-2$ mask AP over different image sizes and (iii) decreases the inference time by $25 \%$ owing to using less anchors. Taking advantage of this efficiency, we further devise GmaYOLACT, a faster and $+7$ mask AP points more accurate detector than YOLACT. Our best model achieves $38.7$ mask AP at $26$ fps on COCO test-dev establishing a new state-of-the-art for real-time instance segmentation.

Correlation Loss: Enforcing Correlation between Classification and Localization

Jan 03, 2023

Abstract:Object detectors are conventionally trained by a weighted sum of classification and localization losses. Recent studies (e.g., predicting IoU with an auxiliary head, Generalized Focal Loss, Rank & Sort Loss) have shown that forcing these two loss terms to interact with each other in non-conventional ways creates a useful inductive bias and improves performance. Inspired by these works, we focus on the correlation between classification and localization and make two main contributions: (i) We provide an analysis about the effects of correlation between classification and localization tasks in object detectors. We identify why correlation affects the performance of various NMS-based and NMS-free detectors, and we devise measures to evaluate the effect of correlation and use them to analyze common detectors. (ii) Motivated by our observations, e.g., that NMS-free detectors can also benefit from correlation, we propose Correlation Loss, a novel plug-in loss function that improves the performance of various object detectors by directly optimizing correlation coefficients: E.g., Correlation Loss on Sparse R-CNN, an NMS-free method, yields 1.6 AP gain on COCO and 1.8 AP gain on Cityscapes dataset. Our best model on Sparse R-CNN reaches 51.0 AP without test-time augmentation on COCO test-dev, reaching state-of-the-art. Code is available at https://github.com/fehmikahraman/CorrLoss

Mask-aware IoU for Anchor Assignment in Real-time Instance Segmentation

Oct 19, 2021

Abstract:This paper presents Mask-aware Intersection-over-Union (maIoU) for assigning anchor boxes as positives and negatives during training of instance segmentation methods. Unlike conventional IoU or its variants, which only considers the proximity of two boxes; maIoU consistently measures the proximity of an anchor box with not only a ground truth box but also its associated ground truth mask. Thus, additionally considering the mask, which, in fact, represents the shape of the object, maIoU enables a more accurate supervision during training. We present the effectiveness of maIoU on a state-of-the-art (SOTA) assigner, ATSS, by replacing IoU operation by our maIoU and training YOLACT, a SOTA real-time instance segmentation method. Using ATSS with maIoU consistently outperforms (i) ATSS with IoU by $\sim 1$ mask AP, (ii) baseline YOLACT with fixed IoU threshold assigner by $\sim 2$ mask AP over different image sizes and (iii) decreases the inference time by $25 \%$ owing to using less anchors. Then, exploiting this efficiency, we devise maYOLACT, a faster and $+6$ AP more accurate detector than YOLACT. Our best model achieves $37.7$ mask AP at $25$ fps on COCO test-dev establishing a new state-of-the-art for real-time instance segmentation. Code is available at https://github.com/kemaloksuz/Mask-aware-IoU

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge