Fatemah Almeman

GEAR: A Simple GENERATE, EMBED, AVERAGE AND RANK Approach for Unsupervised Reverse Dictionary

Dec 09, 2024

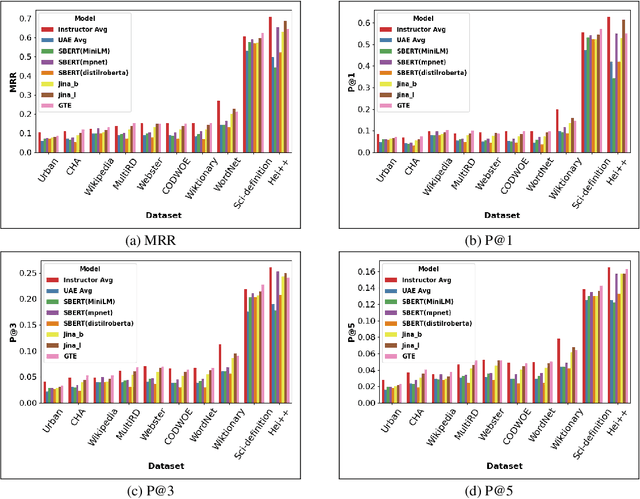

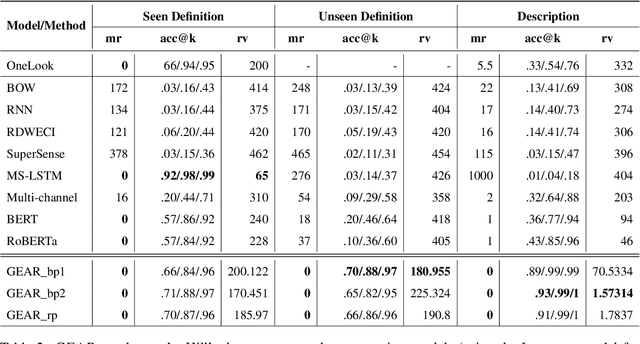

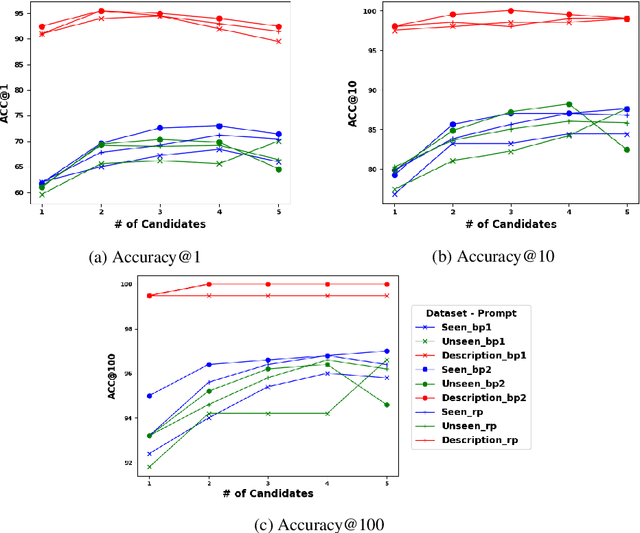

Abstract:Reverse Dictionary (RD) is the task of obtaining the most relevant word or set of words given a textual description or dictionary definition. Effective RD methods have applications in accessibility, translation or writing support systems. Moreover, in NLP research we find RD to be used to benchmark text encoders at various granularities, as it often requires word, definition and sentence embeddings. In this paper, we propose a simple approach to RD that leverages LLMs in combination with embedding models. Despite its simplicity, this approach outperforms supervised baselines in well studied RD datasets, while also showing less over-fitting. We also conduct a number of experiments on different dictionaries and analyze how different styles, registers and target audiences impact the quality of RD systems. We conclude that, on average, untuned embeddings alone fare way below an LLM-only baseline (although they are competitive in highly technical dictionaries), but are crucial for boosting performance in combined methods.

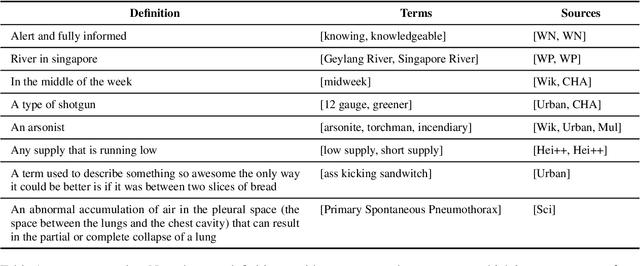

3D-EX : A Unified Dataset of Definitions and Dictionary Examples

Aug 11, 2023Abstract:Definitions are a fundamental building block in lexicography, linguistics and computational semantics. In NLP, they have been used for retrofitting word embeddings or augmenting contextual representations in language models. However, lexical resources containing definitions exhibit a wide range of properties, which has implications in the behaviour of models trained and evaluated on them. In this paper, we introduce 3D- EX , a dataset that aims to fill this gap by combining well-known English resources into one centralized knowledge repository in the form of <term, definition, example> triples. 3D- EX is a unified evaluation framework with carefully pre-computed train/validation/test splits to prevent memorization. We report experimental results that suggest that this dataset could be effectively leveraged in downstream NLP tasks. Code and data are available at https://github.com/F-Almeman/3D-EX .

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge